Generative AI enables users to quickly generate new content based on a variety of inputs. Inputs and outputs to these models can include text, images, sounds, animation, 3D models, or other types of data.

NVIDIA Jetson is capable of running large language models (LLMs), vision transformers, and stable diffusion locally. That includes the largest Llama-2-70B model on Jetson AGX Orin, and Llama-2-13B at interactive rates. Seeed is an Elite Partner of NVIDIA Partner Network, offering Jetson Platfrom hardware and ODM services. For accelerating Generative AI deploying at the edge devices, we offering interactive, hands-on experiences to the NVIDIA Jetson platform.

Here, you can learn how to deploy LLMs and CLIP models locally on NVIDIA Jetson Orin devices. Additionally, explores tutorials of vision language models (VLMs) and vision transformers (ViTs), which merge vision AI with natural language processing for a deep, comprehensive scene understanding. Now you can focus on uncovering the untapped potential of generative AIs in the physical world.

Explore Trending Large Models for Real-world AI Tasks

One of the breakthroughs with generative AI models is the ability to leverage different learning approaches, including unsupervised or semi-supervised learning for training. This has given organizations the ability to more easily and quickly leverage a large amount of unlabeled data to create foundation models. As the name suggests, foundation models can be used as a base for AI systems that can perform multiple tasks.

Model: Llama3

The latest release of Llama3 stands out as the most advanced and accessible large language model currently on the market. Featuring a robust design with 8 billion parameters, Llama3 showcases remarkable efficiency, achieving up to 40 tokens per second on the Jetson AGX Orin platform and 15 tokens per second on the Jetson Orin Nano.

Model: Llama2 with RAG

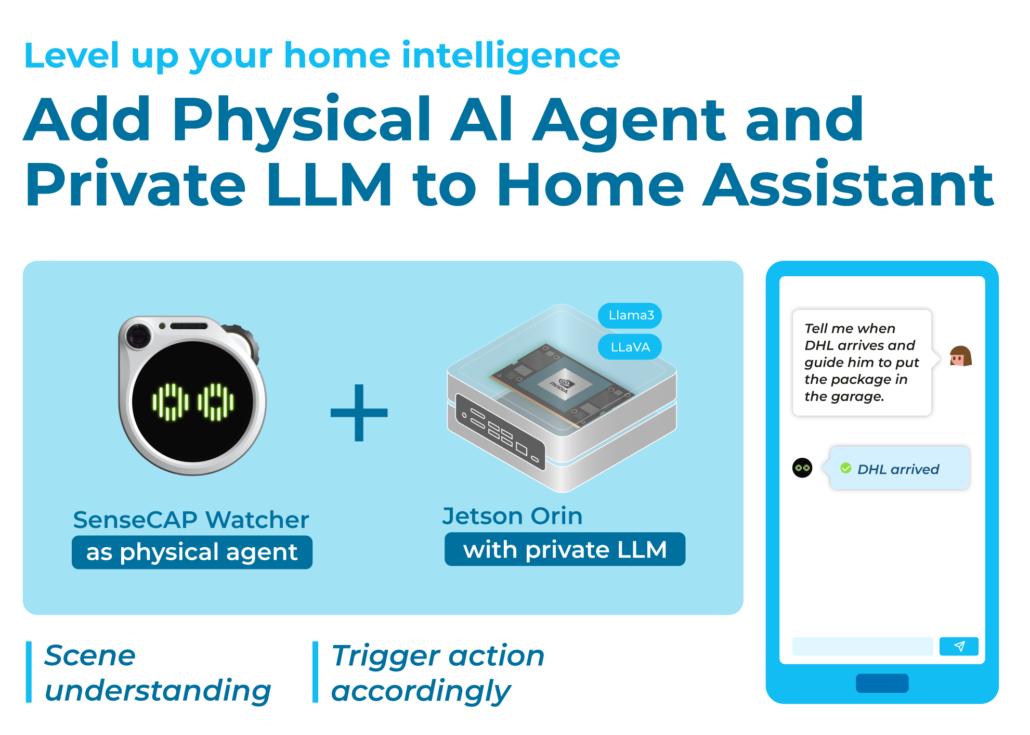

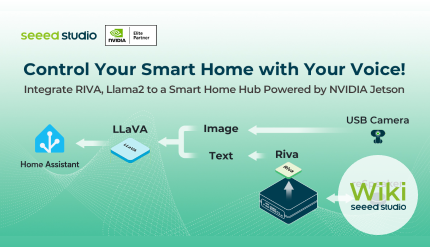

Llama2 is an open-source large language model that can employ live NVIDIA Riva ASR/TTS service for specific language tasks or domains, enabling developers to transfer verbal command to actions for further Home Assistant control. It’s also ideal for creating local Retrieval Augmented Generation(RAG) systems, enhancing interactive robotics, etc.

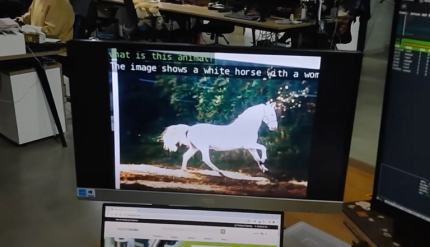

Model: LlaVA

Llava represents a large multimodal model that integrates advanced language understanding with visual capabilities, enabling it to interpret and generate text and images simultaneously. By leveraging live Llava on the Jetson AGX Orin, developers can achieve a deeper understanding of video content, extracting pivotal information for advanced content search and interpretation.

Get Started Building Generative AI Applications

Speech Recognition: Local Voice Chatbot

Model: Llama2-based LLM

Seeed Application Engineer Youjiang Yu built a local voice chatbot using NVIDIA RIVA and Meta Llama2 on Jetson AGX Orin. NVIDIA RIVA handles automatic speech recognition (ASR) converting spoken language into text, while Llama2 generates responses based on the context input. This system offers an efficient solution for real-time private voice interactions and natural language understanding.

Vision2Audio - Visually Impaired Assistant

Model: MLC LLM, LlaVA, multimodal VLM

Nurgaliyev Shakhizat has built a locally-hosted blind assistant device powered by Jetson AGX Orin 64GB, designed for real-time image-to-speech translation. This innovative device aids in outdoor navigation by helping to avoid obstacles and excels in object recognition, significantly enhancing daily life for visually impaired users.

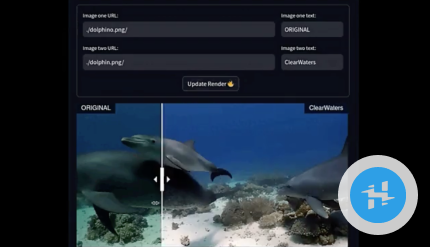

Underwater Image Enhancement

Model: Denoising diffusion probabilistic model

Vy Pham created a denoising pipeline using a Transformer-based diffusion model with a GAN upscaler for enhanced image clarity, running on Jetson AGX Orin with a Streamlit web UI. This system processes photos and videos in real-time, optimizing to enhance color richness and reduce artifacts, pushing the boundaries of image enhancement technology.

Accelerate Generative AI Application Building

NVIDIA Jetson Generative Lab

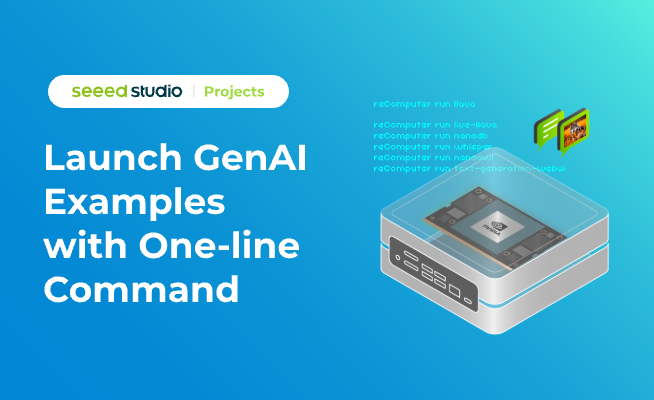

Jetson Examples Github Library

This repository shows examples for running AI models and applications on NVIDIA Jetson devices with one-line command, making easy step to deploy state-of-the-art AI models for various tasks like language understanding, computer vision, and multimodal processing. It supports a variety of examples based on Generative AI models including text generation, image generation, vision transformer, vector database, and audio models.

A Glimpse of Seeed's NVIDIA Jetson Series

Contact Us at edgeai@seeed.cc