ARMOR: Egocentric Perception for Humanoid Robot Powered by XIAO ESP32S3

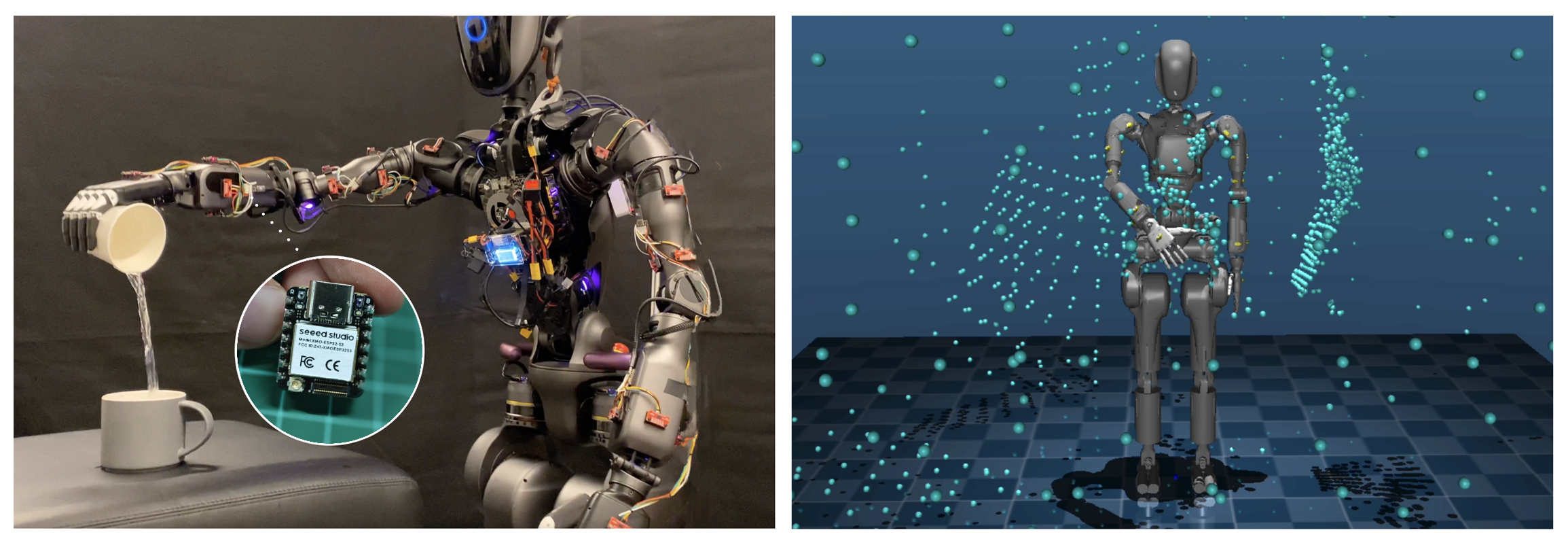

By Kezang Loday 3 months agoDaehwa Kim (Carnegie Mellon University), Mario Srouji, Chen Chen, and Jian Zhang (Apple) have developed ARMOR, an innovative egocentric perception hardware and software system for humanoid robots. By combining Seeed Studio XIAO ESP32S3-based wearable depth sensor networks and transformer-based policies, ARMOR tackles the challenges of collision avoidance and motion planning in dense environments. This system enhances spatial awareness and enables nimble and safe motion planning, outperforming traditional perception setups. ARMOR was deployed on the GR1 humanoid robot from Fourier Intelligence, showcasing its real-world applications.

Hardwares Used

ARMOR uses the following hardware components:

- XIAO ESP32S3 microcontrollers: Efficiently collect sensor data and stream it to the robot’s onboard computer via I2C.

- Onboard Computer: NVIDIA Jetson Xavier NX processes sensor inputs.

- GPU (NVIDIA GeForce RTX 4090): Handles ARMOR-Policy’s inference-time optimization for motion planning.

- SparkFun VL53L5CX Time-of-Flight (ToF) lidar sensors: Distributed across the robot’s body for comprehensive point cloud perception.

How the ARMOR Works

The hardware solution of ARMOR’s egocentric perception system uses distributed ToF lidar sensor networks. Groups of four ToF sensors are connected to Seeed Studio XIAO ESP32S3 microcontrollers, capturing high-precision depth information from the environment. The XIAO ESP32S3 serves as a crucial intermediary controller, efficiently managing real-time sensor data transmission. It streams the collected depth data via USB to the robot’s onboard computer, the NVIDIA Jetson Xavier NX, which then wirelessly transmits the data to a powerful Linux machine equipped with an NVIDIA GeForce RTX 4090 GPU for data processing. This sophisticated data pipeline enables the creation of an occlusion-free point cloud around the humanoid robot, providing essential environmental awareness data for the ARMOR neural motion planning algorithm. The distributed and light-weight hardware setup also ensures enhanced spatial awareness and overcomes the limitations of head-mounted or external cameras, which often fail in cluttered or occluded environments.

Daehwa Kim, one of the core developers of this project, mentions why they selected the Seeed Studio XIAO for this project.

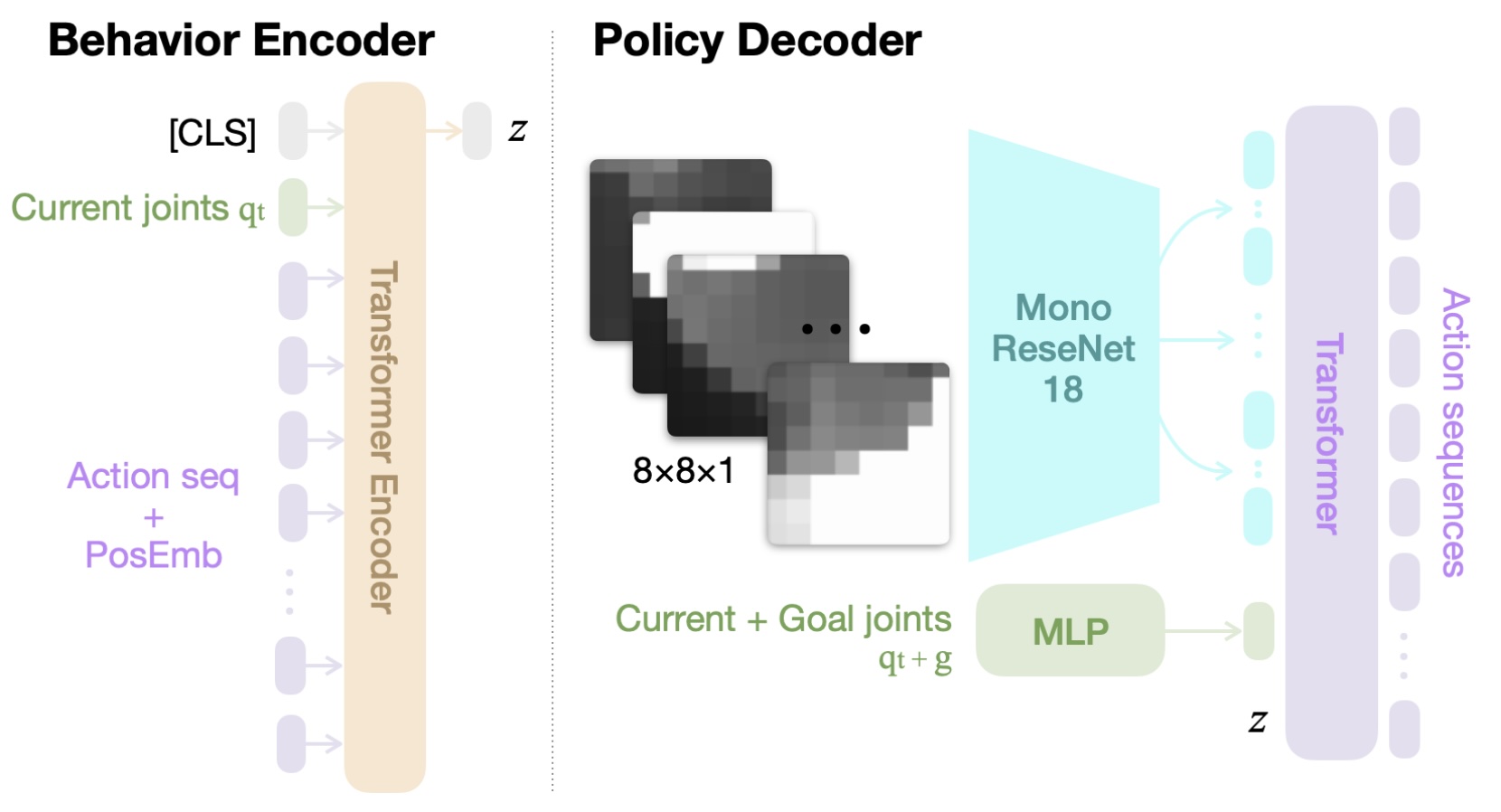

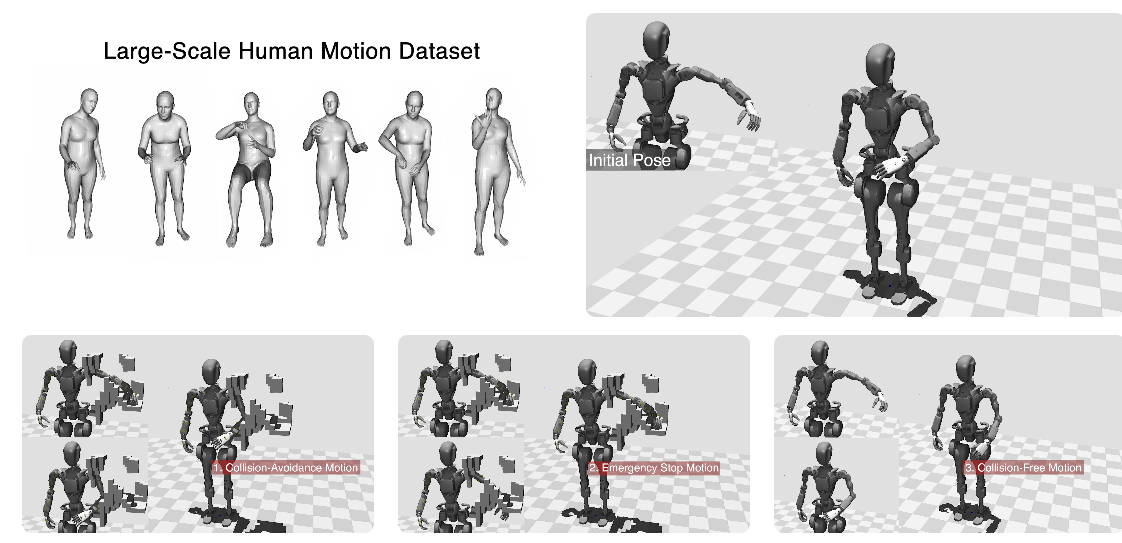

The neural motion planning system, ARMOR-Policy, is built on a transformer-based architecture called the Action Chunking Transformer. This policy was trained on 86 hours of human motion data from the AMASS dataset using imitation learning. ARMOR-Policy processes the robot’s current state, goal positions, and sensor inputs to predict safe and efficient trajectories in real-time. The system leverages latent variables to explore multiple trajectory solutions during inference, ensuring flexibility and robustness.

ARMOR was rigorously tested in both simulated and real-world scenarios. It demonstrated remarkable improvements in performance, reducing collisions by 63.7% and increasing success rates by 78.7% compared to exocentric systems with dense head-mounted cameras. Additionally, the transformer-based ARMOR-Policy reduced computational latency by 26× compared to sampling-based motion planners like cuRobo, enabling efficient and nimble collision avoidance.

Real World Hardware Deployment [Source: Daehwa Kim]

Discover more about ARMOR

Want to explore ARMOR’s capabilities? The research team will soon release the source code, hardware details, and 3D CAD files on their GitHub repository. Dive deeper into this cutting-edge project by reading their paper on arXiv. Stay tuned for updates to replicate and innovate on this revolutionary approach to humanoid robot motion planning! To see ARMOR in action, check out their demonstration video on YouTube.

End Note

Hey community, we’re curating a monthly newsletter centering around the beloved Seeed Studio XIAO. If you want to stay up-to-date with:

Please click the image below