Deploy YOLOv10 on NVIDIA Jetson Orin with a One-Line Command: Real-Time End-to-End Object Detection

- YOLOv10 is a state-of-the-art real-time object detection model.

- NVIDIA Jetson Orin is a powerful embedded platform for real-time object detection.

- Jetson-examples is a toolkit designed to deploy containerized applications including computer vision and generative AI on NVIDIA Jetson devices.

Introduction to YOLOv10

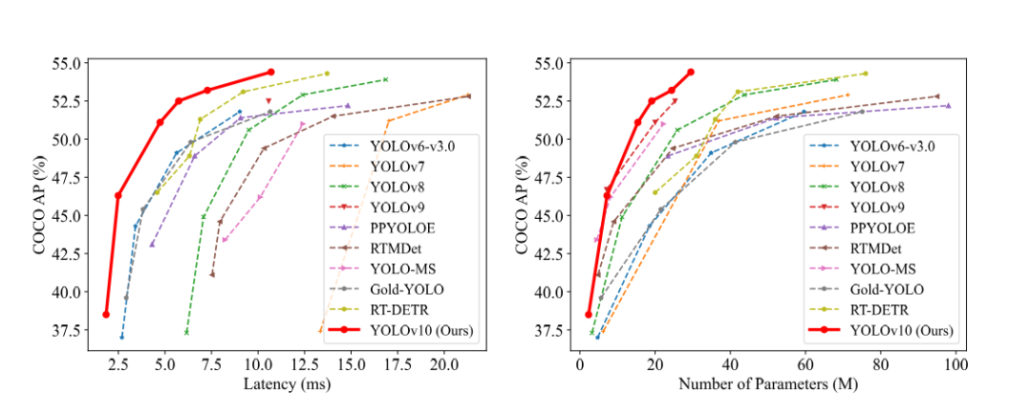

Developed by researchers at Tsinghua University, YOLOv10 introduces a revolutionary approach to real-time object detection, addressing the limitations of previous YOLO versions in both post-processing and model architecture. By eliminating non-maximum suppression (NMS) and optimizing various model components, YOLOv10 achieves state-of-the-art performance and efficiency across different model scales. For instance, YOLOv10-S on COCO is 1.8 times faster than RT-DETR-R18 while reducing parameters and FLOPs by 2.8 times. Compared to YOLOv9-C, YOLOv10-B reduces latency by 46% and parameters by 25% with the same performance.

Model Architecture and Performance

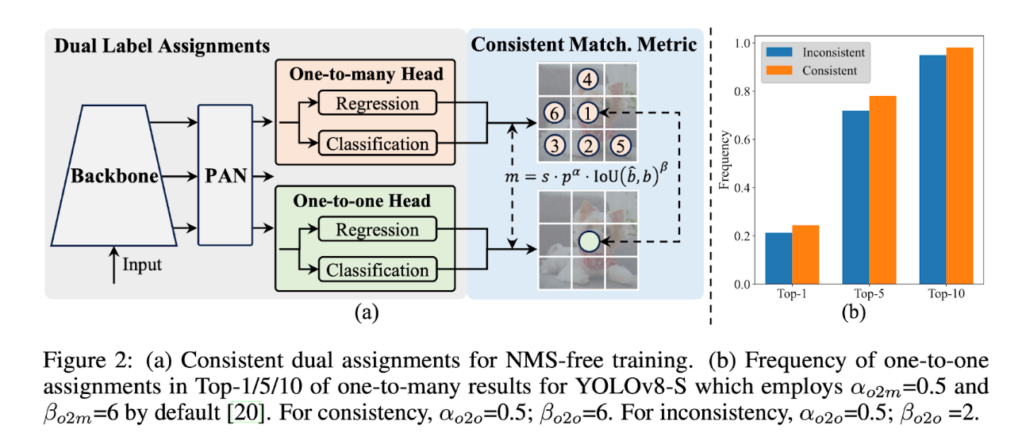

YOLOv10, selected from YOLOv8 due to its commendable latency-accuracy balance and availability in various model sizes, utilizes consistent dual assignments for NMS-free training and holistic efficiency-accuracy-driven model design. This approach enhances the efficiency and accuracy of YOLOv10 models.

Key Comparisons:

- YOLOv10: Designed as a real-time end-to-end object detection model, YOLOv10 is significantly faster than its predecessors. It achieves high performance on benchmarks like the COCO dataset while maintaining a lower number of parameters, contributing to its efficiency.

- YOLOv8: Developed by Ultralytics, YOLOv8 is renowned for its state-of-the-art object detection and image segmentation capabilities. It excels in accuracy and robustness across various computer vision tasks but does not match the speed of YOLOv10.

Speed and Efficiency:

- YOLOv10: Its optimizations enable it to process images more quickly, making it ideal for real-time applications. This speed advantage is achieved without a significant increase in parameters, ensuring the model remains lightweight and efficient.

- YOLOv8: While efficient, it focuses more on high accuracy and robustness, sometimes at the cost of processing speed.

How Does YOLOv10 Achieve Its Fast Speed?

Key Features:

- NMS-Free Training: Utilizes consistent dual assignments to eliminate the need for NMS, reducing inference latency.

- Holistic Model Design: Comprehensive optimization of components from both efficiency and accuracy perspectives, including lightweight classification heads, spatial-channel decoupled-down sampling, and rank-guided block design.

- Enhanced Model Capabilities: Incorporates large-kernel convolutions and partial self-attention modules to improve performance without significant computational cost.

Deploy YOLOv10 at the Edge Made Easy, Within Just One Minute!

Jetson-example offers one-line deployment projects and edge AI applications, including generative AI models like Ollama and Llama3, as well as computer vision models like YOLOv8 and YOLOv10. We have pre-configured all environments for you to enable single-command deployment of projects.

Deploy with jetson-examples: https://github.com/Seeed-Projects/jetson-examples/blob/main/reComputer/scripts/yolov10/README.md

Steps to Get Started:

- Get a Jetson Orin device from Seeed; our full-system devices are pre-built with Jetpack.

- Install the jetson-examples toolkit using the command:

pip3 install jetson-examples. - Run the YOLOv10 model.

- Check real-time inferencing results through the web UI.

Deploy YOLOv10 on NVIDIA Jetson Orin easily and leverage its real-time, end-to-end object detection capabilities to power your edge AI applications efficiently!