Local Voice Chatbot – Deploy Riva and Llama2 on reComputer

Introducing our Local Voice Chatbot—a groundbreaking solution that operates locally on hardware, leveraging Nvidia Riva and Meta Llama2. Departing from cloud dependencies, our architecture ensures privacy and speed.

In a world where artificial intelligence is evolving at an inventive pace, the mode of human-computer interaction has taken a revolutionary turn towards voice interaction. This shift is particularly evident in smart homes, personal assistants, and customer service support, where the demand for seamless and responsive voice chatbots is on the rise. However, the reliance on cloud-based solutions has brought about concerns related to data privacy and network latency. In response to these challenges, we present an innovative Local Voice Chatbot project that operates locally, addressing privacy issues and ensuring swift responses.

Traditional voice chatbots heavily depend on cloud computing services, raising valid concerns about data privacy and network latency. The need for a more secure and efficient solution has become increasingly apparent, paving the way for the development of a locally operated voice interaction system.

Our project focuses on deploying a voice chatbot that operates entirely hardware, mitigating privacy concerns and offering a faster response time. To achieve this, we leverage the powerful capabilities of Nvidia Riva and Meta Llama2, creating a robust and private voice interaction system that ensures user data remains secure.

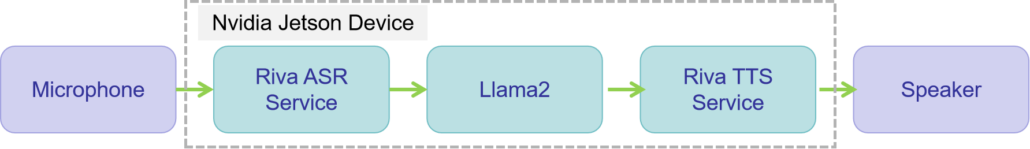

Now, lets break down the system architecture step by step:

- The user speaks into the microphone, and the voice input is processed by the Riva ASR service, running locally on the Jetson device.

- The ASR service converts the spoken words into text, which is then sent to the local Llama2 model for intent recognition and dialogue management.

- Llama2 processes the text, determines the appropriate response, and generates a text response.

- The text response is sent to the Riva TTS service, which converts it into synthesized speech.

- The synthesized speech is played through the connected speaker, allowing the user to hear the chatbot’s response.

One more thing, both the Riva Server and Llama2 are kept running in separate terminals to maintain the chatbot’s functionality. The overall architecture ensures a secure, private and fast-responding voice interaction system without relying on cloud services, addressing data privacy and network latency concerns.

For more detailed setup information and advanced configurations, please refer our wiki page.

Effect Demonstration: