Generative AI at the Edge Fundamentals: What is it and how it works?

The next significant breakthrough in modern AI came with the advent of GPT(Generative Pre-trained Transformer). Leveraging vast amounts of data from the internet, the core technology of GPTs has enabled AI models to be more generalized. Without the limitation of conventional AI approaches like heavily relying on predefined rules and patterns, or even letting machines make predictions based on the relationship between data and label it learned. In the Generative wave, we can make the results flexible and infinite by only tapping or verbally providing a prompt to the machine, making life more convenient and smarter. Now, let’s dive deep to figure out how to understand generative AI at the edge, and what we can do with generative AI!

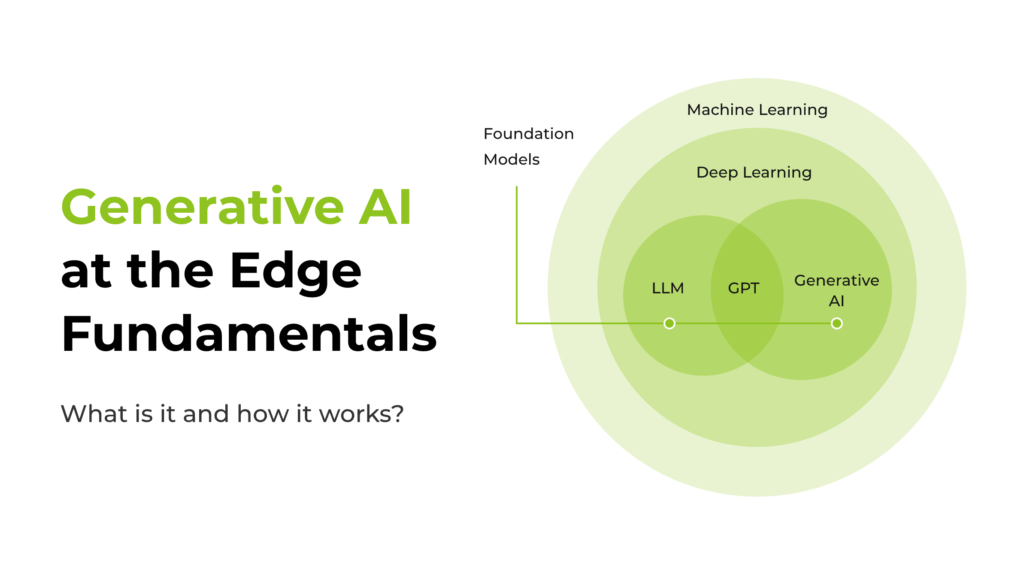

What is Generative AI?

Generative AI is designed to learn from patterns in unstructured data based on deep-learning neural networks. Employing a blend of unsupervised and semi-supervised methods in its initial training, it also seamlessly integrates supervised techniques during fine-tuning. It enables the model to autonomously craft new, original content with enhanced efficiency and precision across various domains—be it text, images, audio, or synthetic data. Discover our Generative AI website for more insights.

Generative AI Architecture Underneath

The concept of Generative AI, which refers to a pre-trained model that serves as the starting point for various downstream tasks, utilizes the Transformer as the underlying architecture, where the foundation model is built and pre-trained with a huge amount of data. Once pre-trained, the model can be fine-tuned for specific tasks or used as is for various creative applications, such as language generation, text completion, or even image synthesis.

Transformer

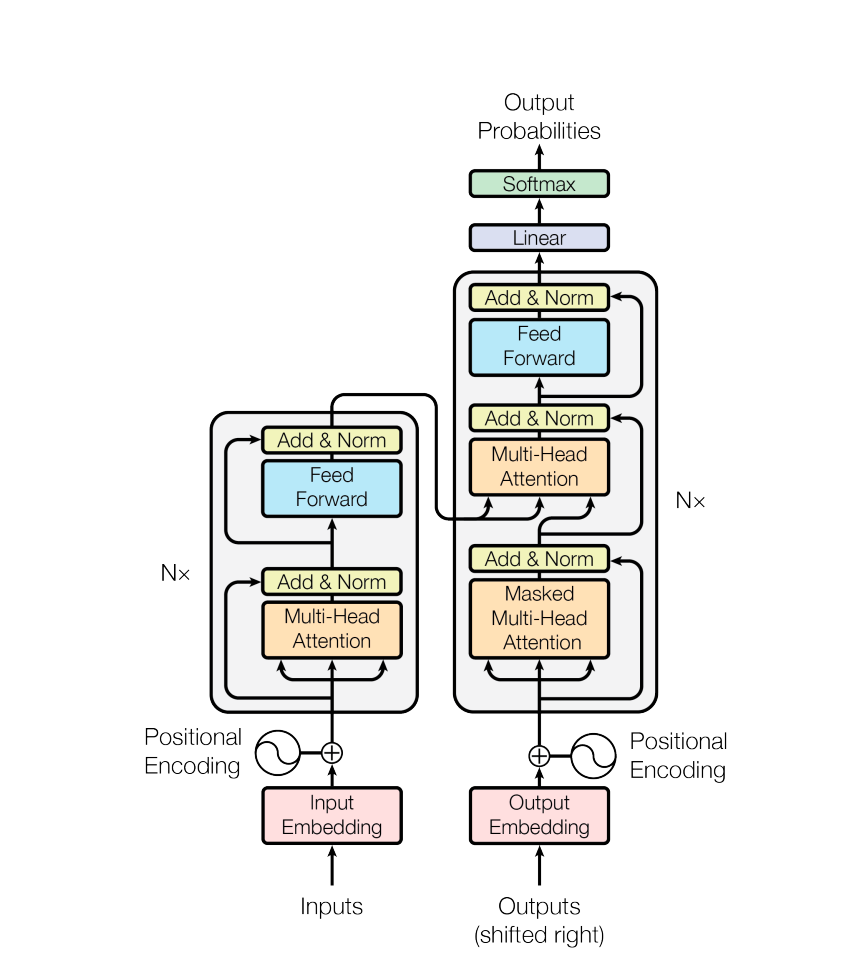

The Transformer framework is a groundbreaking architecture in natural language processing, characterized by its encoder-decoder structure and self-attention mechanism with the whole input rather than processing vectors one by one in order.

In the encoder, the input sequence undergoes multi-head self-attention, allowing the model to focus on different parts of the sequence simultaneously. The decoder, responsible for generating the output sequence, utilizes a similar mechanism while incorporating additional components like positional encoding to maintain the order of words. Feedforward neural networks within each layer process the information derived from attention mechanisms, enabling the model to capture complex relationships in the data. Additionally, layer normalization and residual connections contribute to stable and efficient training, allowing the model to effectively learn intricate patterns in sequential data.

Foundation Model

Understanding the framework of a foundation model involves recognizing its role as a pre-trained model that serves as a starting point for various downstream tasks.

Take an example of language models like GPT, the foundation model is trained on an extensive and diverse dataset using unsupervised learning. During this pre-training phase, the model learns the nuances of language, capturing contextual relationships and patterns. The resulting foundation model is then fine-tuned for specific tasks or applications, adapting its pre-learned knowledge to address more specialized challenges.

This pre-training followed by fine-tuning approach empowers the foundation model to excel in a wide range of language-related tasks, from text completion to sentiment analysis. Let’s take a look at some classic foundation models that can be deployed at the Edge:

- LLM: or “Large Language Model,” is a powerful artificial intelligence model in the context of natural language processing and machine learning. Trained extensively on diverse textual data, it excels in comprehending and generating human-like language. This versatile model can be finely tuned to cater to specific language tasks or domains. One significant application of LLM should be Llamaspeak, an innovative interactive chat application that utilizes live NVIDIA Riva ASR/TTS technology, allowing users to engage in vocal conversations with a locally running LLM, enhancing the interactive and dynamic nature of communication. Check out our wiki about how to build a local chatbot by deploying Riva and Llama2 on reComputer.

- CLIP: stands for Contrastive Language-Image Pretraining, is a computer vision model developed by OpenAI. It excels in understanding the relationship between images and their corresponding textual descriptions, built on a large body of work on zero-shot transfer, natural language supervision, and multimodal learning. With CLIP, You only need to define the possible prompts or descriptions the objects of the scene belong to, then CLIP will help you predict the most probable class for the given image or video based on its extensive pertaining. One illustrative application of CLIP could be automating labeling processes using Vision Transformers (OWL-ViT) in conjunction with CLIP. It enables zero-shot object detection and classification, not only just streamlining the labeling workflow, but also extending the model’s capabilities to classify objects it has never encountered before.

Generative AI Model Types

Text-to-Text

Text-to-text models seamlessly transform natural language inputs into corresponding text outputs. Specifically trained to understand and generate mappings between text pairs, these models offer a versatile toolkit applicable across a spectrum of tasks, such as multilingual translation, concise document summarization, efficient information extraction, precise search operations, dynamic content clustering, and even nuanced content editing and rewriting

Text-to-Image

Text-to-image models represent a novel frontier in AI, training on extensive datasets comprising images paired with concise text descriptions. Leveraging methodologies like diffusion, by progressively refining the model’s understanding of the intricate relationship between textual prompts and corresponding visual features, these models excel in translating textual prompts into vibrant visual outputs. A prime application lies in the area of image generation and editing, where the model’s capacity to comprehend and manifest visual concepts from textual cues enhances creativity and efficiency.

Text-to-Task

Text-to-task models are meticulously trained to execute precise actions or tasks in response to textual input. These tasks encompass a broad spectrum, including answering queries, conducting searches, making predictions, and undertaking various other actions. These models find versatile applications in virtual assistants, where their capabilities streamline interactions, and in automation, where their proficiency in understanding and responding to text prompts enhances operational efficiency.

What’s Next? Ready for Practical Applications?

To perform these GenAI tasks smoothly at the edge, NVIDIA Jetson is capable of locally running large language models (LLMs), vision transformers, and stable diffusion efficiently and stably. That includes the largest Llama-2-70B model on Jetson AGX Orin, and Llama-2-13B at interactive rates. Powered by the great Jetson Orin NX with up to 100 TOPS AI performance, our reServer Industrial J4012 edge device hands over the capability to perform on-site video analytics tasks seamlessly. As an expendable local storage with 2 drive bays for 2.5″ SATA HDD/SSD, it combines with 5 GbE RJ45 ports where 4 of which are for 802.3af PSE, making it an ideal choice to deploy local large language models with real-time processing through multiple streams.

You can simply use miniGPT-4 to create writing content, generate image descriptions, deploy virtual conversational agents, and even get websites from handwritten drafts.

While dealing with environmental perception, Vision2Audio based on the LLaVA model, brings the gap between vision and sound together, allowing visually impaired individuals to perceive the surrounding environments by verbal instructions.

To comprehend multimodal input, you can use CLIP to generalize across diverse classes through zero-shot learning. It performs great in a spectrum of tasks, from classifying images based on textual descriptions to retrieving images with specific textual queries. Its capabilities extend to object detection, text-based image generation, and various natural language processing endeavors such as sentiment analysis and visual question answering, growing as a powerful tool for AI applications requiring a nuanced comprehension of both image and text data. Check out the demos of how to deploy CLIP on reComputer Jetson Orin NX.

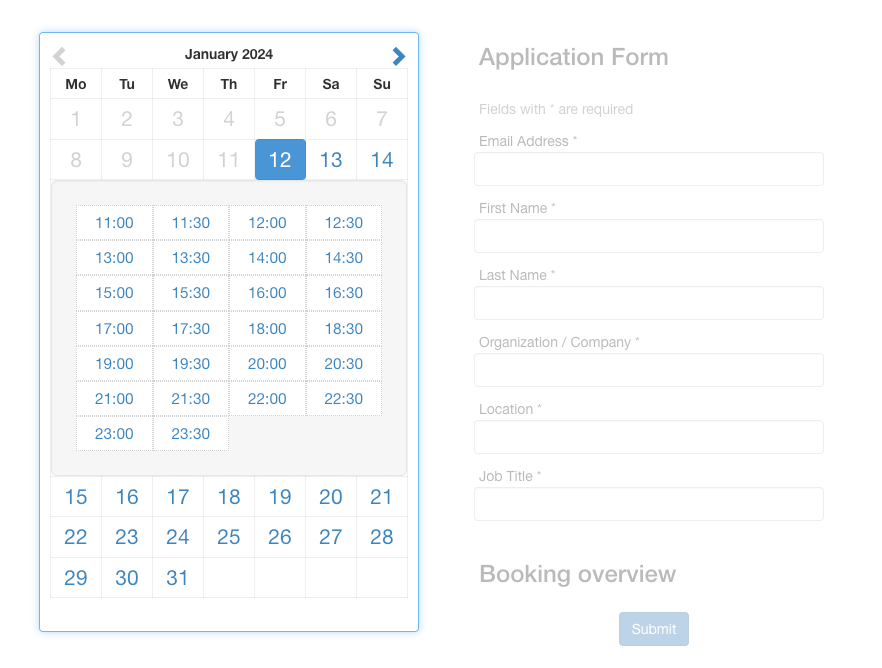

Free Trail Virtually for NVIDIA Jetson AGX Orin Developer Kit!

Ready to dive into your first generative AI application? Schedule a complimentary virtual trial with our NVIDIA Jetson AGX Orin Developer Kit. Unleash your creative ideas into the physical world with cutting-edge GenAI technology!

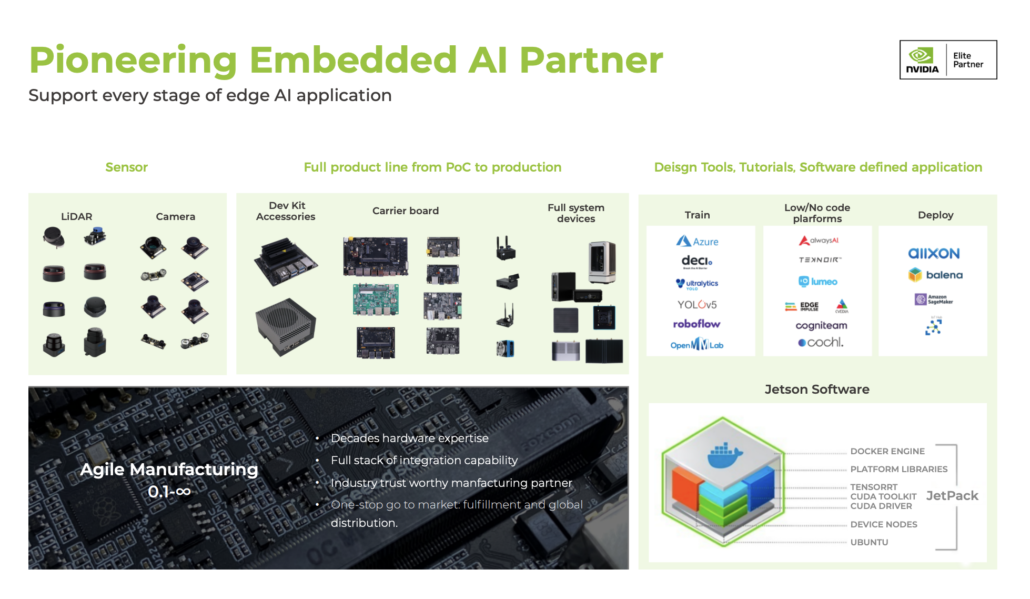

Seeed: NVIDIA Jetson Ecosystem Partner

Seeed is an Elite partner for edge AI in the NVIDIA Partner Network. Explore more carrier boards, full system devices, customization services, use cases, and developer tools on Seeed’s NVIDIA Jetson ecosystem page.

Join the forefront of AI innovation with us! Harness the power of cutting-edge hardware and technology to revolutionize the deployment of machine learning in the real world across industries. Be a part of our mission to provide developers and enterprises with the best ML solutions available. Check out our successful case study catalog to discover more edge AI possibilities!

Take the first step and send us an email at edgeai@seeed.cc to become a part of this exciting journey!

Download our latest Jetson Catalog to find one option that suits you well. If you can’t find the off-the-shelf Jetson hardware solution for your needs, please check out our customization services, and submit a new product inquiry to us at odm@seeed.cc for evaluation.