Snake Recognition System: Harnessing LoRaWAN and XIAO ESP32S3 Sense for TinyML

Updated on Feb 28th, 2024

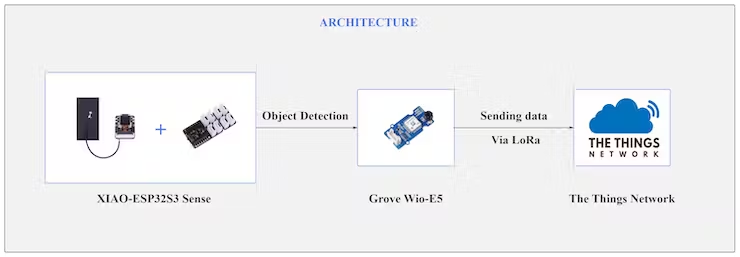

Snakes often pose a danger on farms, especially those with venomous species that can cause serious medical emergencies. This XIAO ESP32S3 Sense-driven Snake Recognition System aims to detect snakes in farm fields and alert people via LoRaWAN.

Seeed Hardware: Seeed Studio XIAO ESP32S3 Sense, Grove – Wio-E5 (STM32WLE5JC), Grove Base for XIAO, SenseCAP M2 Multi-Platform LoRaWAN Indoor Gateway(SX1302) – EU868

Software: Arduino IDE, Edge Impulse

Industry: Agriculture, Urban Wildlife Monitoring, Zoos and Aquariums, Conservation Biology, Tourism and Nature Conservation, Research and Education

The Background

Snakes often enter farms, threatening human safety. Chloe Zhang‘s research highlights the neglected issue of snake bites in many countries, especially in Africa, Asia, and Latin America. The numbers are alarming: Asia sees around 2 million snakebite cases each year, and in Africa, an estimated 435,000 to 580,000 people require treatment annually. These bites from venomous snakes can lead to severe medical emergencies, including paralysis, respiratory problems, and bleeding disorders.

The Challenge

There are three significant challenges within Chloe’s project. Firstly, Wi-Fi and cellular networks are ill-suited for the vast and remote expanse of agricultural fields. Secondly, to balance outdoor deployment feasibility and cost-effectiveness, a compact equipment solution is crucial. Lastly, the project must achieve long-distance transmission capabilities while minimizing power consumption, demanding an optimal synergy of range and energy efficiency.

The Solution

Hardware Selection

- Seeed Studio XIAO ESP32S3 Sense: is a cost-effective device that comes with an OV2640 camera sensor, a digital microphone, and SD card support. Its AI capabilities empower it to excel in image recognition, ensuring precise image detection and prediction.

- Grove – Wio-E5 (STM32WLE5JC): LoRaWAN’s extensive coverage and low power usage make this LoRa module a great choice for sending XIAO image recognition results to the IoT platform. Its plug-and-play simplicity, along with built-in AT commands and Arduino compatibility, enhance its appeal.

- Grove Base for XIAO: is used to connect the XIAO ESP32S3 Sense to the Grove-Wio E5.

- SenseCAP M2 Multi-Platform LoRaWAN Indoor Gateway(SX1302) – EU868: Chloe seeks a budget-friendly yet high-quality gateway, and the M2 is the perfect choice. It meets her requirements without breaking the bank and offers strong performance.

Training object detection model with Edge Impulse

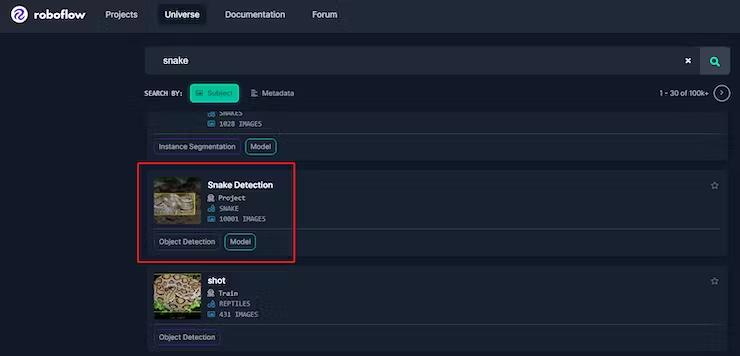

1. Gathering dataset

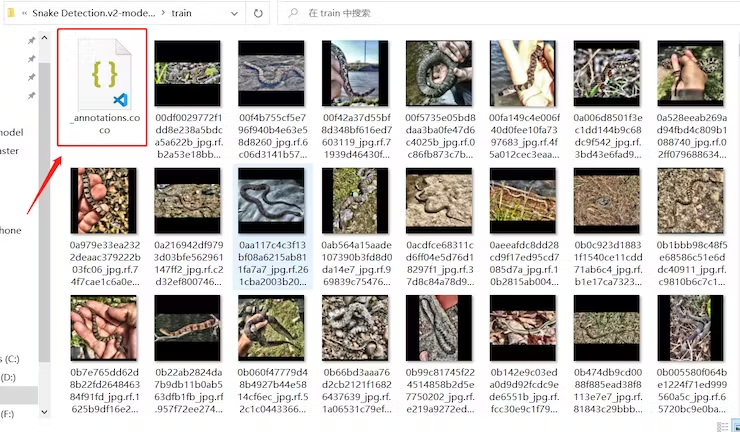

Training a target recognition model begins with acquiring a high-quality dataset of target images. You can source relevant datasets from platforms like Kaggle, Roboflow, and OpenDataLab. Download your chosen dataset in COCO format for a compressed dataset package.

Upon decompression, you’ll find “train” and “test” folders containing data for model training and validation. Each folder includes a JSON file with image labels, eliminating the need for manual labeling. If manual labeling is necessary, consider using the labelme annotation tool.

2. Build a new project on Edge Impulse

- Create a new project.

- Click on “Data acquisition” and then the “upload” button. Select a folder, choose files, and specify “train” or “test” depending on the folder’s content. Then, upload the data.

- After uploading the data, you can view the entire dataset in the Edge Impulse platform. Check the labels for the entire dataset.

- Click “Create Impulse” and change the resize mode to “Fit longest axis.” Save the impulse.

- Click “Image” and generate features.

- Begin model training and deploy it in the Arduino IDE.

- Once model training is complete, click on “Deployment,” choose the Arduino library, disable the EON Compiler, select “Quantized (int8),” and then build.

Note:

- Edge Impulse’s developer model has limitations on dataset size and training duration. Prior complexity assessment is essential to ensure your model adheres to these constraints.

- Parameter changes are required based on data set size and model structure, and the default parameters are used here for training

3. Compiling code using Arduino IDE

- Installation of Object Detection Libraries

Upon completing the steps in the document, access a compressed package containing the trained model and necessary library files required for Arduino operation.

Installing libraries in Arduino IDE, refer to the Installing Libraries | Arduino Documentation

- Implementing the Trained Model on XIAO

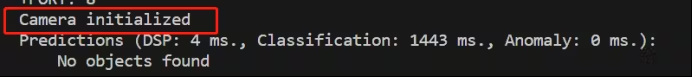

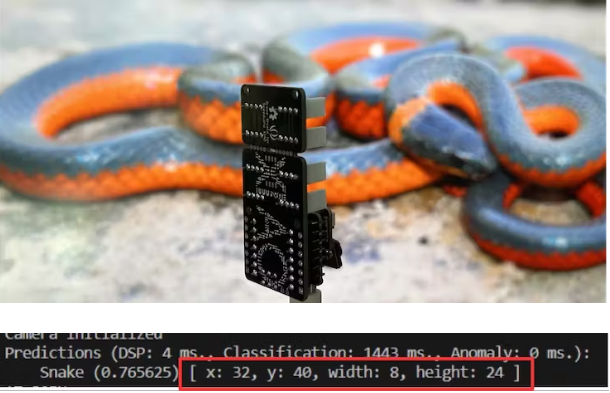

With the deep learning dependency libraries configured in the previous section, the trained model can now be used for inference. Initiate the camera to capture real-time image data. Successful initialization will prompt the serial port to display “camera initialized.” For a visual representation, refer to the figure below.

Subsequently, obtain image data through camera invocation and execute forward propagation prediction with the loaded model to determine the image’s category. The prediction corresponds to different categories, represented using sequential numbering for classification. Specifically, for snake detection, “1” denotes snake detection, and “0” signifies no snake detection. Once this classification data is available, transmit it through LoRa communication.

3. Transmit data to TTN and achieve visualization on Datacake

- Data Transmission to The Things Network

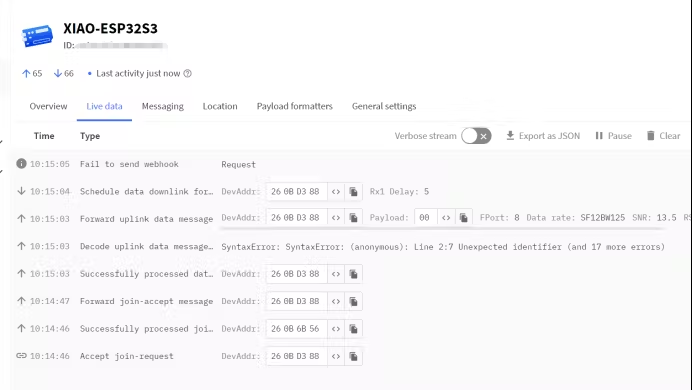

Consult the guide “Sending Wio-E5 Data to Datacake via TTN – Hackster.io” for instructions on creating an application, adding a gateway, and binding devices. It’s essential to have your own LoRaWAN gateway to utilize The Things Network (TTN). As shown in the image below, XIAO successfully uploads recognition results to TTN via the Grove-Wio-E5.

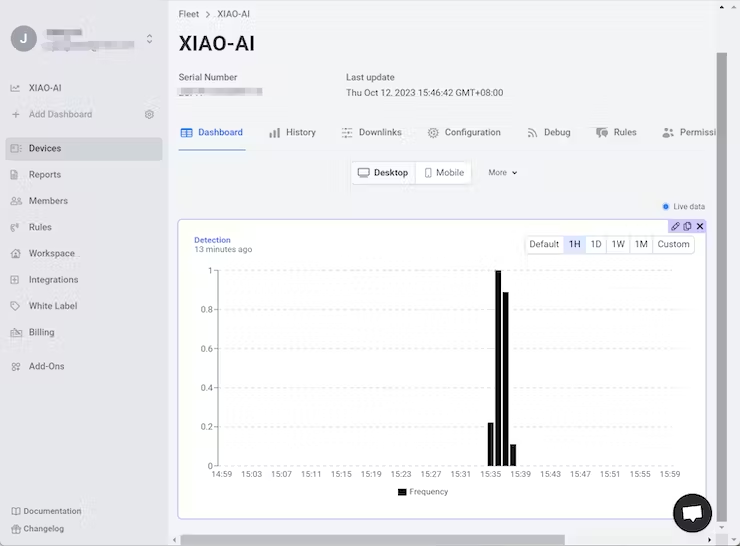

- Data Visualization Using Datacake

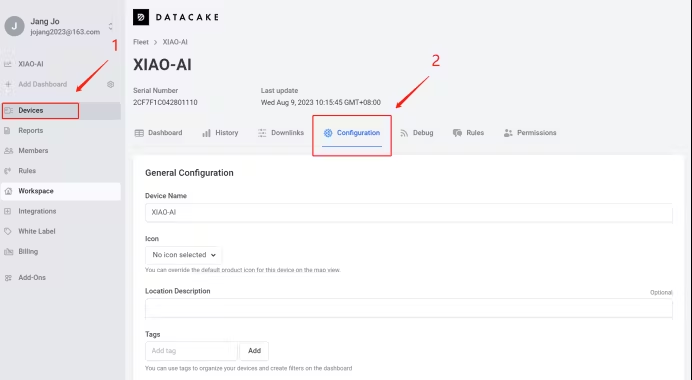

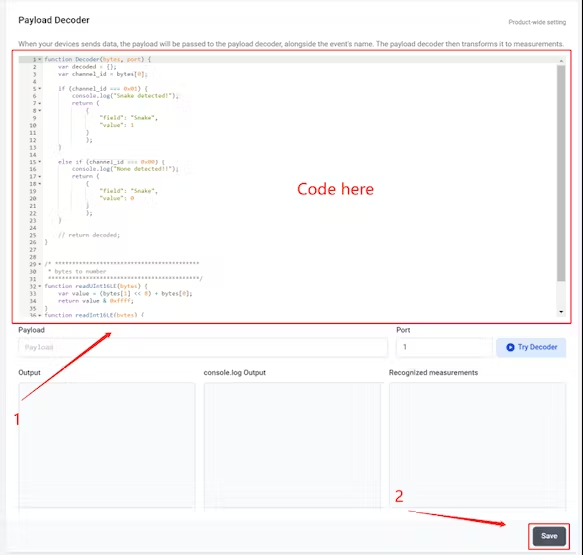

For data visualization, refer to the same resource, “Sending Wio-E5 Data to Datacake via TTN – Hackster.io” Before visualizing the data, the data must be decoded to obtain the necessary information. This can be done by navigating to the device -> Configuration -> Payload Decoder page, as illustrated in the figure below:

You can then replace the code in the Payload Decoder with the code Chloe provided. Once all the tasks are accomplished, you can visualize the data from XIAO on the dashboard.

Notice that the value remains at 0 when no snakes are present. However, if a snake is detected, the number will change. A “1” indicates the presence of snakes during that specific time period.

Once the program is uploaded to XIAO, the camera captures snake images for object detection. Using x, y, width, and height data, we can determine the target’s position within the image. If needed, additional equipment, like a steering gear head, can be added to track and recognize the target by keeping it in the image center. This project benefits from LoRaWAN technology, providing ample coverage for farm areas, extending up to several kilometers.

The Conclusion

In conclusion, Chloe Zhang has been working on a snake detection project and plans to expand it to count and identify snake species using advanced AI and computer vision techniques. This development has significant implications for wildlife conservation, ecological research, and snakebite prevention. She is committed to advancing her project and encourages others to explore AI and LoRaWAN technology for their unique endeavors.

More Information

Learn More Project Details on Hackster: LoRaWAN Based TinyML Snake Recognition System

Explore more TinyML stories:

- Empowering Travel Safety with XIAO ESP32S3 Sense, Round Display for XIAO, and TinyML: AI-Driven Keychain Detection for Instant Alerts and Location Request

- NMCS: Empowering Your Coffee Experience with Sound and Vision Classification

- IoT-Enabled Tree Disease Detection: Harnessing Vision AI, Wio Terminal, and TinyML

- Revolutionizing Wildlife Monitoring: TinyML, IoT, and LoRa Technologies with XIAO ESP32S3 Sense and Wio E5 Module

- Innovative Community Projects That Utilized Grove-Vision AI Module: 11 Inspiring Stories

Choose the best tool for your TinyML projects:

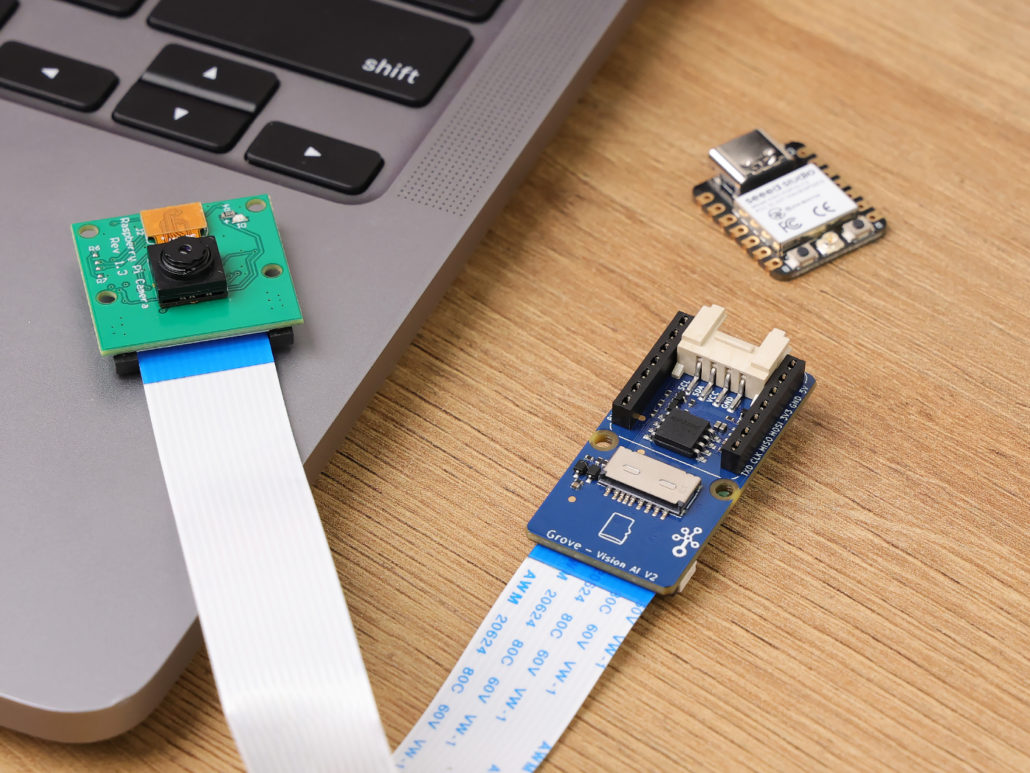

Grove – Vision AI Module V2

It’s an MCU-based vision AI module powered by Himax WiseEye2 HX6538 processor, featuring rm Cortex-M55 and Ethos-U55. It integrates Arm Helium technology, which is finely optimized for vector data processing, enables:

- Award-winning low power consumption

- Significant uplift in DSP and ML capabilities

- Designed for battery-powered endpoint AI applications

With support for Tensorflow and Pytorch frameworks, it allows users to deploy both off-the-shelf and custom AI models from Seeed Studio SenseCraft AI. Additionally, the module features a range of interfaces, including IIC, UART, SPI, and Type-C, allowing easy integration with popular products like Seeed Studio XIAO, Grove, Raspberry Pi, BeagleBoard, and ESP-based products for further development.

Seeed Studio XIAO ESP32S3 Sense & Seeed Studio XIAO nRF52840 Sense

Seeed Studio XIAO Series are diminutive development boards, sharing a similar hardware structure, where the size is literally thumb-sized. The code name “XIAO” here represents its half feature “Tiny”, and the other half will be “Puissant”.

Seeed Studio XIAO ESP32S3 Sense integrates an OV2640 camera sensor, digital microphone, and SD card support. Combining embedded ML computing power and photography capability, this development board can be your great tool to get started with intelligent voice and vision AI.

Seeed Studio XIAO nRF52840 Sense is carrying Bluetooth 5.0 wireless capability and is able to operate with low power consumption. Featuring onboard IMU and PDM, it can be your best tool for embedded Machine Learning projects.

Click here to learn more about the XIAO family!

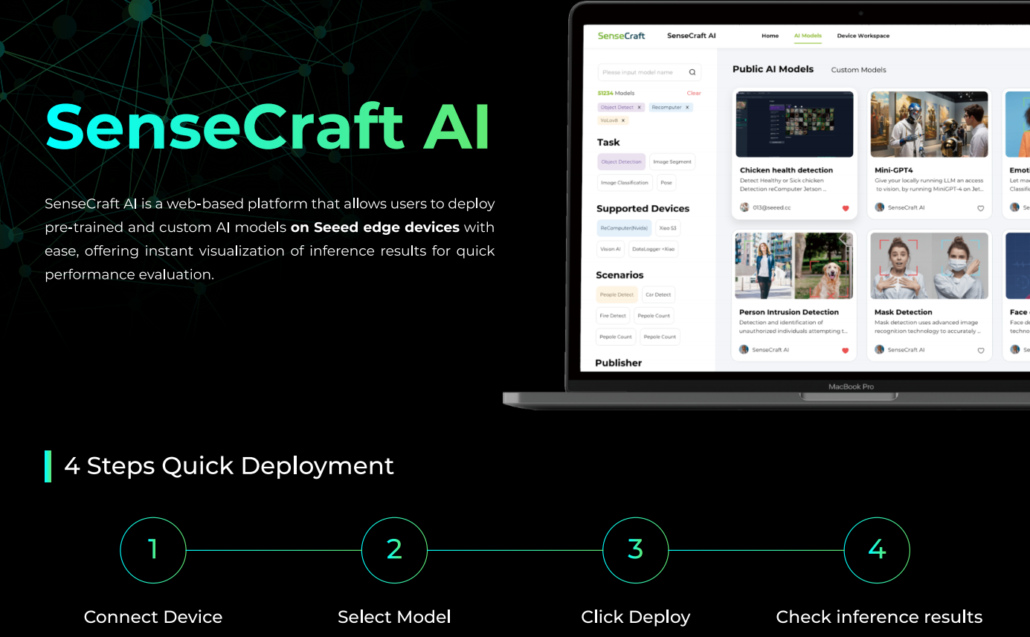

SenseCraft AI

SenseCraft AI is a platform that enables easy AI model training and deployment with no-code/low-code. It supports Seeed products natively, ensuring complete adaptability of the trained models to Seeed products. Moreover, deploying models through this platform offers immediate visualization of identification results on the website, enabling prompt assessment of model performance.

Ideal for tinyML applications, it allows you to effortlessly deploy off-the-shelf or custom AI models by connecting the device, selecting a model, and viewing identification results.

Please feel free to reach out to iot@seeed.cc for any inquiries or if you’d like to engage in further project discussions. Your questions and interest are welcomed.