IoT-Enabled Tree Disease Detection: Harnessing Vision AI, Wio Terminal, and TinyML

Discover a powerful tool to detect and prevent tree diseases from harming our environment by utilizing IoT and TinyML technologies.

Seeed Hardware: SenseCAP K1100 – The Sensor Prototype Kit with LoRa® and AI, Grove – CO2 & Temperature & Humidity Sensor (SCD30)

Software: Edge Impulse, Arduino, Thonny, Autodesk Fusion 360, Ultimaker Cura

Industry: Smart Agriculture, Forestry

The Background

With climate change and extensive deforestation posing serious threats to trees and plants, the susceptibility of these crucial life forms to contagious diseases has escalated. These diseases, primarily fungal in nature, are triggered by factors such as drought, elevated carbon dioxide levels, overcrowding, and damage to stems or roots. They can rapidly spread over long distances, jeopardizing ecosystems. Given the vital role trees play in pollination, the spread of tree diseases can lead to devastating consequences, including crop yield losses, animal fatalities, infectious epidemics, and even land degradation due to soil erosion. To mitigate these challenges, early disease detection is imperative, but it’s equally crucial to consider environmental stressors that can render trees more susceptible to disease. Therefore, a holistic approach that integrates disease detection and environmental monitoring is needed to protect forests, farms, and arable lands from these hazards.

The Challenge

To address this issue, Kutluhan Aktar has developed a project called “IoT AI-Driven Tree Disease Identifier w/ Edge Impulse & MMS.” This innovative approach employs Grove-Vision AI to gather images of infected trees, thereby creating a comprehensive dataset. Using Edge Impulse, the models are trained and deployed to identify tree diseases at an early stage. The results are then communicated via MMS, enabling swift action to prevent further spread and harm to forests, farms, and arable lands.

The Solution

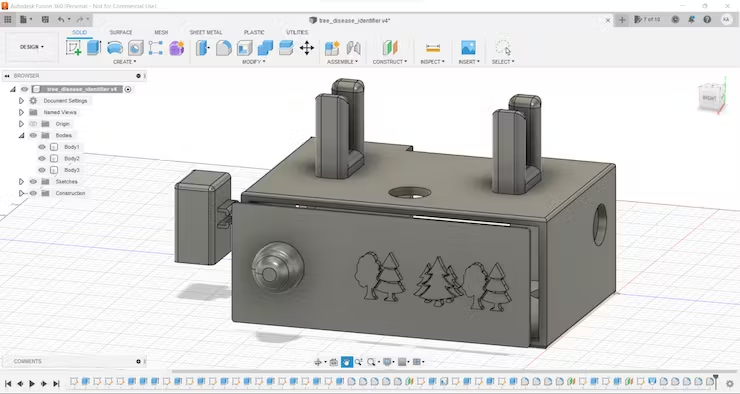

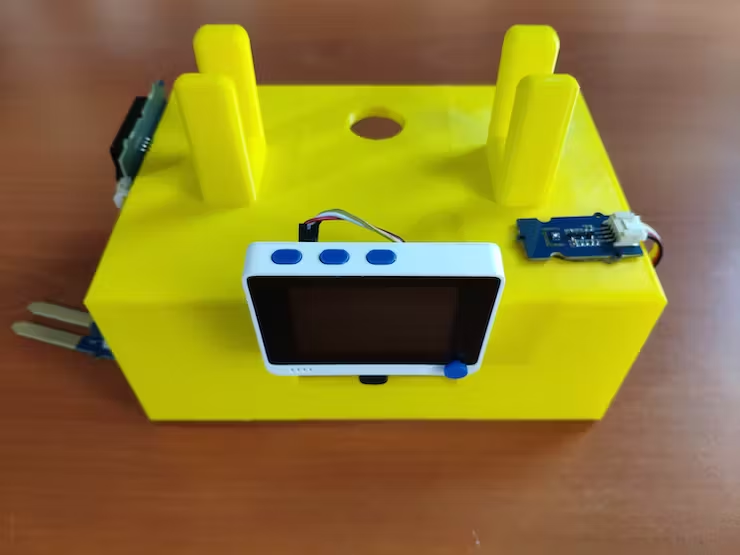

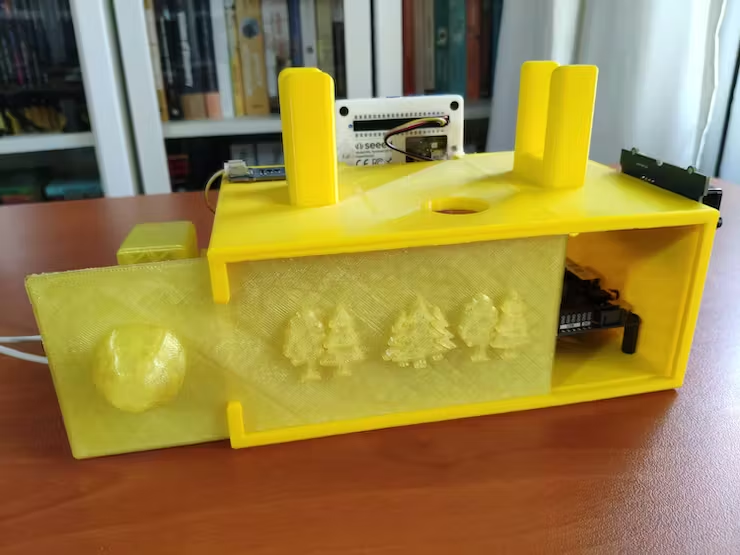

Step 1: Designing and printing a forest-themed case

Kutluhan designed and 3D printed a durable outdoor case for the device. The aim was to create an affordable and user-friendly solution. The case includes a sliding cover with a handle to protect the device from dust and secure its wires. It also has a movable part for attaching the Vision AI module. To give it a forest-themed look, he added tree icons. Two stands were added on top of the case to hold an HDMI screen for monitoring. He used software like Autodesk Fusion 360 and Ultimaker Cura for designing and slicing the 3D models. The case was printed using autumn-themed PLA filaments. After printing, he assembled the case, connected the sensors, and attached components like the Vision AI module and HDMI display to LattePanda 3 Delta, making it ready for outdoor use.

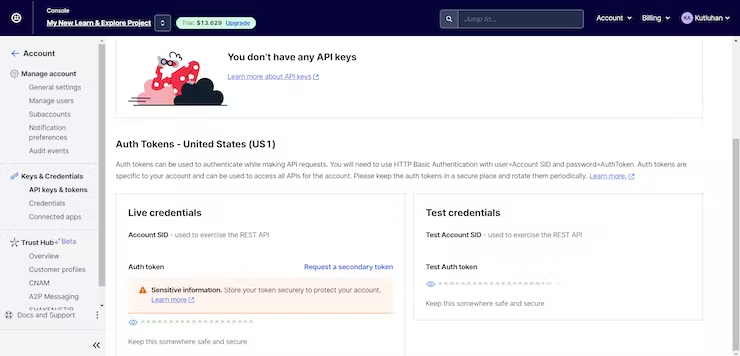

Step 2: Creating a Twilio account to send MMS

Kutluhan set up Twilio for sending MMS messages. Twilio offers a straightforward way to send image messages globally, and it’s free for trial accounts. The process involved signing up for Twilio, verifying a phone number, and configuring MMS settings in Python. He also activated a virtual phone number, added it to a Messaging Service, and obtained the necessary credentials for sending MMS messages via Twilio’s API.

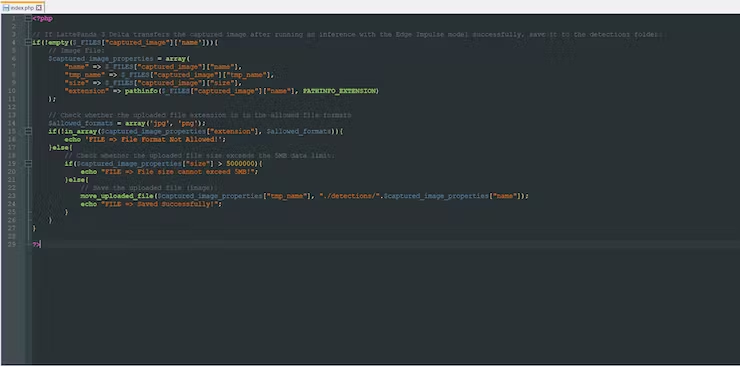

Step 3: Developing a web application in PHP to save the transferred detection results (images)

In this step, he created a PHP-based web application to facilitate the sending of modified images with bounding boxes via MMS using Twilio. LattePanda 3 Delta, after adding bounding boxes to the detected objects, sends the modified image to this web application. The application checks for successful image transfers, file format compatibility, and file size within a 5MB limit, and then stores the received image in a “detections” folder. The web application is hosted on his website, theamplituhedron.com, but could also be set up with a localhost tunneling service like ngrok for direct image transfer from a local environment via Twilio.

Step 4: Setting up Wio Terminal on the Arduino IDE

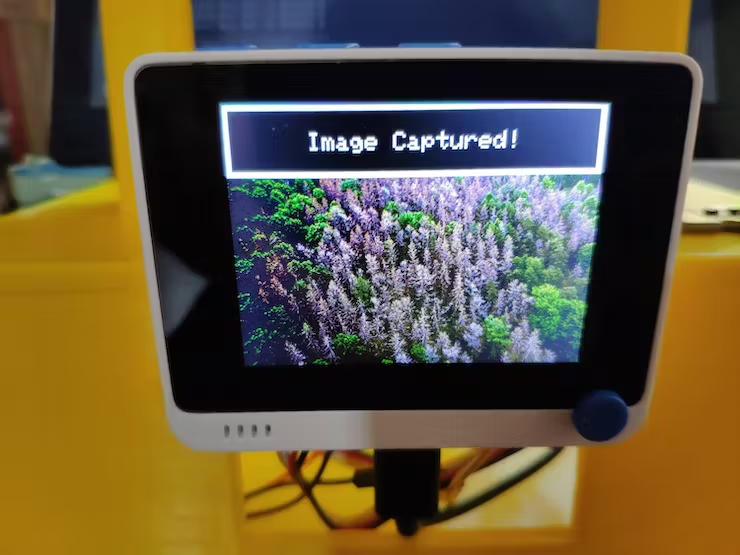

In this step, he set up Wio Terminal to log environmental data and display images on its TFT LCD screen. He began by configuring Wio Terminal on the Arduino IDE and installing necessary libraries for sensors. To display images correctly, he resized them in Microsoft Paint and converted them to a compatible BMP format using a Python script. The script also allowed for choosing between 8-bit and 16-bit BMP formats. Finally, he transferred the converted BMP files to the SD card and copied the RawImage.h file to the Arduino sketch folder for image display.

Step 5: Collecting environmental factors and sending commands to LattePanda 3 Delta w/ Wio Terminal

In this step, after setting up Wio Terminal and installing necessary libraries and modules, Kutluhan programmed Wio Terminal to collect environmental data and save it to a CSV file on the SD card, including date, CO2 levels, temperature, humidity, moisture, tVOC (total volatile organic compounds), and CO2eq (carbon dioxide equivalent). To control LattePanda 3 Delta and issue commands for image capture or model execution, he utilized the configurable buttons on Wio Terminal. Button A triggered image capture (‘A’), and Button B initiated the model run (‘B’). Additionally, Wio Terminal automatically sent the model run command (‘B’) to LattePanda 3 Delta every 5 minutes. Some adjustments were made to the Histogram library to resize and modify histogram colors. The code ensured environmental factors were recorded, displayed on the TFT screen, and thresholds were checked, with notifications given through the built-in buzzer. Data was saved to the CSV file on the SD card, and commands were communicated via serial communication with LattePanda 3 Delta.

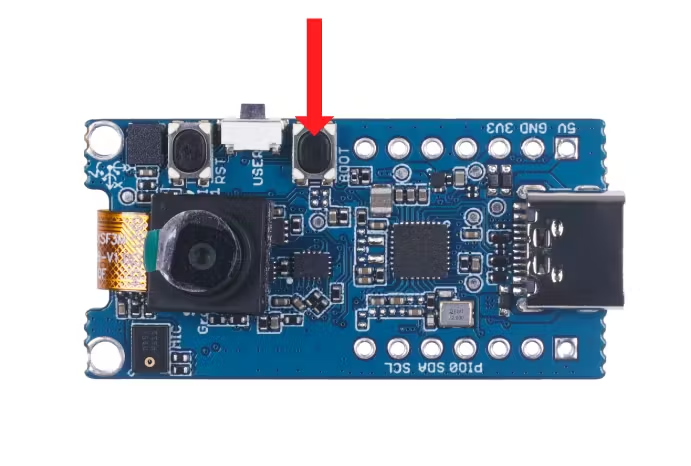

Step 6: Capturing and storing images of diseased trees w/ the Grove Vision AI module

In this step, he programmed LattePanda 3 Delta to capture tree images using the Vision AI module upon receiving the capture command (‘A’) from Wio Terminal via serial communication. Before proceeding, he had to configure the correct settings for Wio Terminal and the Vision AI module on LattePanda 3 Delta. This involved uploading the data collection firmware to the Vision AI module, setting up rules for USB recognition in Ubuntu (Linux), and adjusting permissions for the Wio Terminal serial port.

He utilized a single code file for all functions, which was detailed in Step 8. To obtain the image buffer from the Vision AI module, he modified functions provided by Seeed Studio. This included defining the module’s image descriptions, vendor ID, and product ID, as well as functions for receiving commands from Wio Terminal, searching for the Vision AI module, establishing communication, reading data from the module, and saving captured images with timestamps.

To create a comprehensive dataset for his object detection model, he ventured into a forest near his hometown to capture images of trees infected with various foliar and bark diseases. These included leaf rusts, leaf spots, leaf blisters, powdery mildew, needle rusts, needle casts, tar spots, rusts, and anthracnose. The device successfully captured these images, forming the dataset for training and testing his object detection model.

Step 7: Building an object detection (FOMO) model with Edge Impulse

After successfully capturing infected tree images and storing them on LattePanda 3 Delta, the next step involved working on an object detection (FOMO) model to detect potential tree diseases and prevent their spread. To build this model, he chose to use Edge Impulse, a platform known for its model deployment options and the FOMO machine learning algorithm designed for constrained devices like LattePanda 3 Delta.

Edge Impulse FOMO (Faster Objects, More Objects) is an innovative algorithm capable of object detection, object counting, and real-time object tracking with significantly lower processing power and memory requirements compared to other models.

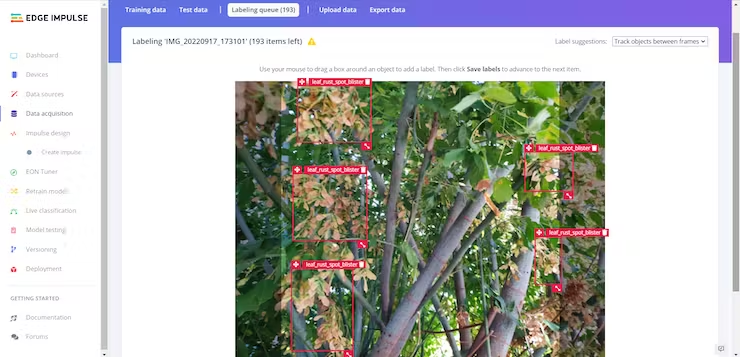

Before creating the model, he needed to format his dataset to include data scaling (resizing) and data labeling. He categorized tree diseases based on whether they affected the foliar or bark parts of the tree and used these categories to label each image sample in Edge Impulse.

He signed up for Edge Impulse, created a new project, and selected the “Bounding boxes (object detection)” labeling method. After uploading his data set, he manually labeled different tree diseases based on the two categories, taking advantage of Edge Impulse’s intuitive labeling interface.

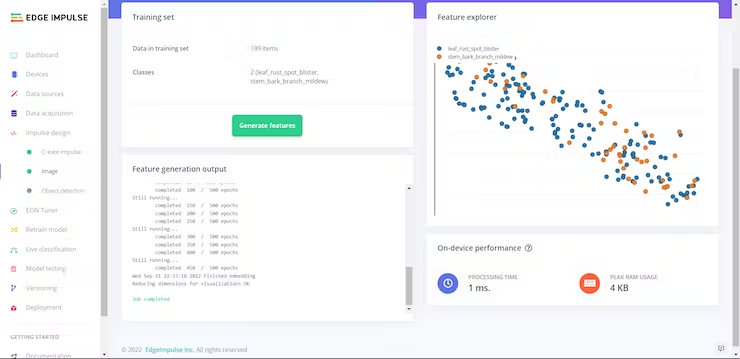

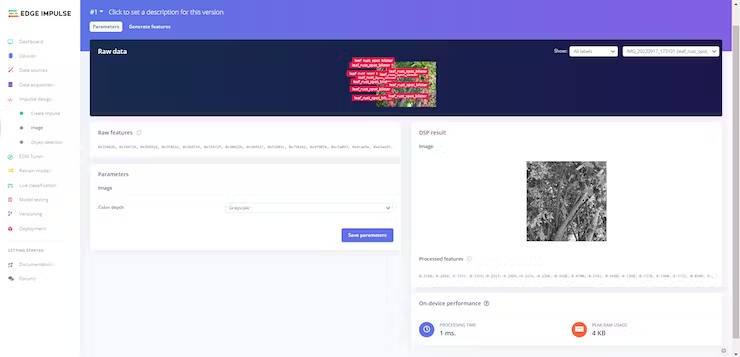

Once the labeling was complete, he designed an impulse for his model using the Image preprocessing and Object Detection (Images) learning blocks. He configured the model’s parameters, set image dimensions, selected the grayscale color depth, and generated features for the object detection model. Training was initiated, and he adjusted the neural network settings and architecture for improved accuracy.

After training the model with his data set, Edge Impulse evaluated the F1 score (accuracy) at approximately 54%, noting that accuracy was higher for foliar (leaf) tree diseases than for bark (stem) tree diseases due to limited and diverse training samples for bark diseases.

He tested the model’s accuracy, achieving an evaluated accuracy of 90%. Finally, he deployed the model as a fully optimized Linux x86_64 application to be used on LattePanda 3 Delta.

Step 8: Setting up the Edge Impulse FOMO model on LattePanda 3 Delta

After deploying the FOMO object detection model on LattePanda 3 Delta, he uploaded it to the device, enabling real-time tree disease detection with bounding boxes. The model was run in Python using Thonny and required several libraries to function. After adjusting permissions, LattePanda 3 Delta captured tree images via the Vision AI module and transmitted detected labels and modified images as MMS messages through Twilio’s API. The main.py file contained the code for these operations, utilizing Python Threads for simultaneous processing.

Step 9: Running the FOMO model on LattePanda 3 Delta to detect tree diseases

The Edge Impulse object detection model analyzes images, assigning confidence scores to two labels: “leaf_rust_spot_blister” and “stem_bark_branch_mildew.” When triggered either automatically or manually, the device captures and enhances images by adding bounding boxes around detected objects. The modified image is saved, sent to a web app, and an MMS is sent to a verified phone number via Twilio’s API. If no objects are detected, it sends a “Not Detected!” message and logs results for debugging. The experiments confirm precise detection of foliar tree diseases with accurate bounding boxes.

The conclusion

In conclusion, this project successfully leveraged an Edge Impulse object detection model to accurately identify foliar tree diseases from captured images. The system efficiently added bounding boxes to highlight detected objects and shared the results via both a web application and MMS notifications. This project demonstrated reliable disease detection and showcased the potential for real-world applications in agriculture and plant health monitoring.

More Information

Learn More Project Details on Hackster: IoT AI-driven Tree Disease Identifier w/ Edge Impulse & MMS

Seeed Studio TinyML Case Studies

Please feel free to reach out to maker.team@seeed.cc for any inquiries or if you’d like to engage in further project discussions. Your questions and interest are welcomed.