Developing Vision AI Application with NVIDIA DeepStream Graph Composer

Imagine the workflow of developing a video analytics application, there should be a programming pipeline in your head, as you got the input stream to decode and send it to the inference, checking out how much fps you can get, and then finally getting the video display result. Successful but exhausted

A bit about Graph Composer

NVIDIA Graph Composer stands out as a powerful visual programming method to create the AI application pipeline through an easy-to-use graphic interface. This low-code tool suite can help you generate DeepStream-compatible video analytics pipelines with drag-and-drop components. Currently, NVIDIA provides several pre-trained models that are ready to drag and drop into your pipeline:

For people/objects (recognization & behavior analysis):

- ClassDetectorModel

- AudioClassifier

- Facial Landmark

- Gesture Recognition

- ActionRecognitionNet

For traffic status tracking/parking management:

- VehicleMakeClassifier

- VehicleTypeClassifier

- CarColorClassifier

- CarDetector360d

Build Video Analytics Application on the Edge

Now, let’s dive into a real demonstration of people traffic detection to see how Graph Component shows benefits to developers!

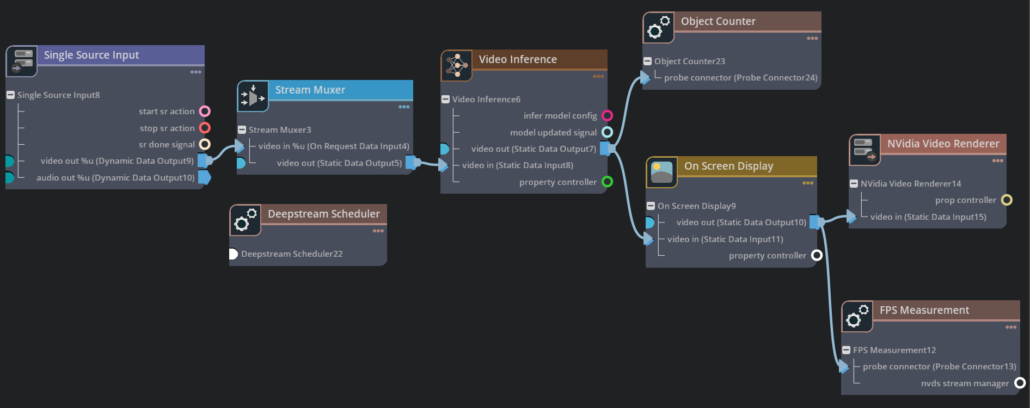

We start with the single source input whose form could be either a video file or even an RTSP stream, then connect it with the stream Muxer which is capable of handling multiple video inputs simultaneously (as we know, even the smallest Jetson Nano edge device can take up to 8 video streaming inputs at the same time).

Muxer output these resources into the video inferencing step, where we choose the object detection algorithm with the PeopleNet model from the NGC model registry to apply to the video input, then the output of this step splits off into the object counter component which allows us to accumulate a running total of each object. Meanwhile, the On Screen Display component could allow us to draw bounding boxes over those detected objects. Finally, all go to the Nvidia Video Renderer, which could render the results or draw them back in a visual representation. You can always check the analyzing performance in the execution result based on the FPS Measurement component.

After going over the whole workflow, you’ll see it’s so flexible and easy to use. The IDE-like developing environment lets everyone in a very tight loop to build the application. You can also see the execution to make iterations quickly.

Stay tuned for our next step – planning to discover how to import our own-built detection model into Graph Composer, so that enrich the application scenarios for video analytics!

Seeed NVIDIA Jetson Ecosystem

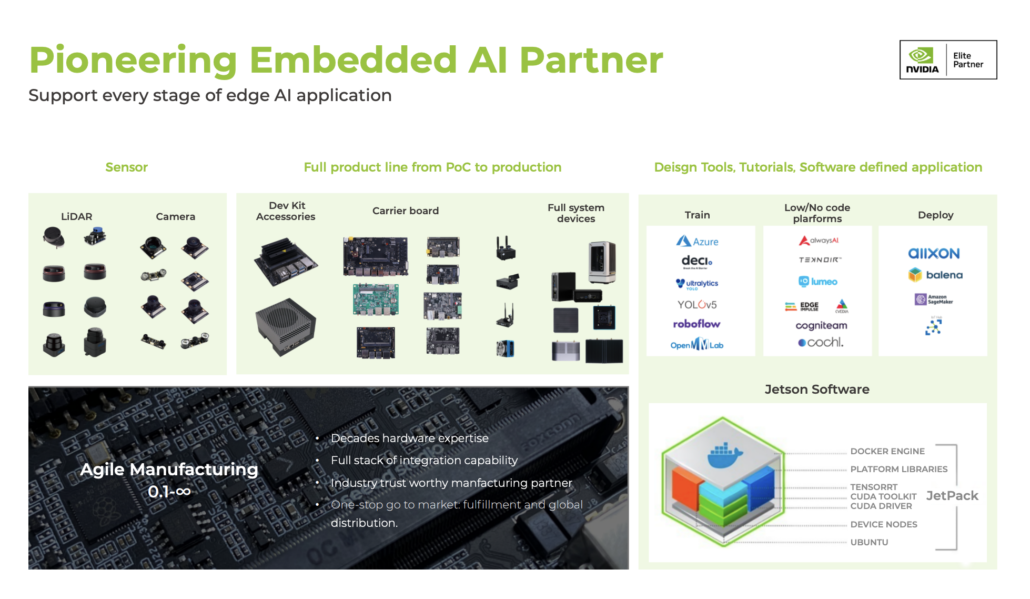

Seeed is an Elite partner for edge AI in the NVIDIA Partner Network. Explore more carrier boards, full system devices, customization services, use cases, and developer tools on Seeed’s NVIDIA Jetson ecosystem page.

Join the forefront of AI innovation with us! Harness the power of cutting-edge hardware and technology to revolutionize the deployment of machine learning in the real world across industries. Be a part of our mission to provide developers and enterprises with the best ML solutions available. Check out our successful case study catalog to discover more edge AI possibilities!

Take the first step and send us an email at edgeai@seeed.cc to become a part of this exciting journey!

Download our latest Jetson Catalog to find one option that suits you well. If you can’t find the off-the-shelf Jetson hardware solution for your needs, please check out our customization services, and submit a new product inquiry to us at odm@seeed.cc for evaluation.