Deploy YOLOv8 models on NVIDIA Jetson using TensorRT

Ultralytics YOLOv8 is a cutting-edge, state-of-the-art (SOTA) model that builds upon the success of previous YOLO versions and introduces new features and improvements to further boost performance and flexibility. YOLOv8 is designed and recognized as fast, accurate, and easy to use, making it an excellent choice for a wide range of object detection and tracking, instance segmentation, image classification, and pose estimation tasks.

Bring the SOTA model to the compact edge device

Previously in 2022 and this year, we introduced how to deploy YOLOv5 &YOLOv8 on NVIDIA Jetson Devices, using DeepStream-Yolo(Kudos to the project!). Following the guide, you can reach around 60fps at 640×640 with Jetson Xavier NX. We also have done performance benchmarks for all computer vision tasks supported by YOLOv8 running on reComputer J4012 powered by NVIDIA Jetson Orin NX 16GB module. (?Do you know we just released the new reComputer Industrial Orin series with a fanless design, also adding more interfaces talking to factory machines?)

Now we are excited to share that you can deploy any YOLOv8 models directly using TensorRT.

Not only YOLOv8 Detect, we also tested Segment, and Pose models that perform impressive inference on Seeed’s edge devices of NVIDIA Jetson. We hope the wiki here will help you get the most out of YOLOv8. You can browse the YOLOv8 Docs for details, find trained YOLOv8 models at Roboflow Universe, and train and annotate fast at Roboflow, and Ultralytics Hub!

YOLOv8 models

- Object Detection

- Image Segmentation

- Image Classification

- Pose Estimation

- Object Tracking

Why TensorRT?

NVIDIA® TensorRT™, an SDK for high-performance deep learning inference, includes a deep learning inference optimizer and runtime that delivers low latency and high throughput for inference applications.is a library developed by NVIDIA to make inference faster on NVIDIA GPUs. TensorRT can help achieve more than 2 to 3 times faster inference on many real-time services and embedded applications when compared with running native models such as PyTorch and ONNX without TensorRT.

How to train your first YOLOv8 model?

Here we have three recommended methods for training a model:

Ultralytics HUB

You can easily integrate Roboflow into Ultralytics HUB so that all your Roboflow projects will be readily available for training. Here it offers a Google Colab notebook to easily start the training process and also view the training progress in real-time.

Use a Google Colab workspace created by us.

Here we use Roboflow API to download the dataset from the Roboflow project.

Use a local PC for the training process.

Here you need to make sure you have a powerful enough GPU and also need to manually download the dataset.

Reference

- Seeed NVIDIA Jetson Ecosystem: seeedstudio.com/tag/nvidia.html

- YOLOv8 documentation: docs.ultralytics.com

- Roboflow documentation: docs.roboflow.com

- TensorRT documentation: docs.nvidia.com/deeplearning/tensorrt/developer-guide/index.html

- Deploy YOLOv8 at NVIDIA Jetson using TensorRT and DeepStream

- YOLOv8 Performance Benchmarks on NVIDIA Jetson Devices

- Deploy YOLOv5 at NVIDIA Jetson

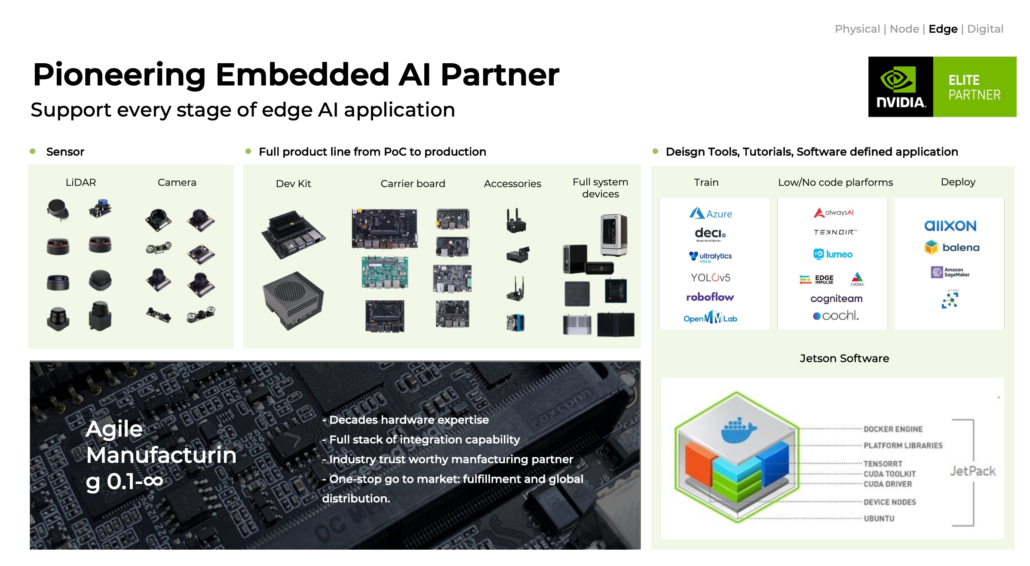

Seeed and NVIDIA Jetson Ecosystem

Seeed is an Elite partner for edge AI in the NVIDIA Partner Network. Explore more carrier boards, full system devices, customization services, use cases, and developer tools on Seeed’s NVIDIA Jetson ecosystem page.

Join the forefront of AI innovation with us! Harness the power of cutting-edge hardware and technology to revolutionize the deployment of machine learning in the real world across industries. Be a part of our mission to provide developers and enterprises with the best ML solutions available.