EdgeLab: PFLD Algorithm Achieves 99.56% Accuracy in Metering Systems

Remember we released EdgeLab a couple of days ago? Well, now we are excited to introduce an exciting application using Edgelab which is to read pointer-type metering systems with an accuracy of about 99.56%! To achieve this, we use an algorithm called PFLD. So, let’s learn more about it in this blog.

Since the second industrial revolution in the second half of the 19th century, various industrial meters have emerged in all aspects of the industrial world. These meters have a long working life and strong environmental adaptability, and therefore they still support most industrial monitoring applications in the 21st century. However, the difficulty of reading data from pointer-type meters and the difficulty of settings up early warnings are still major problems of modern informatization.

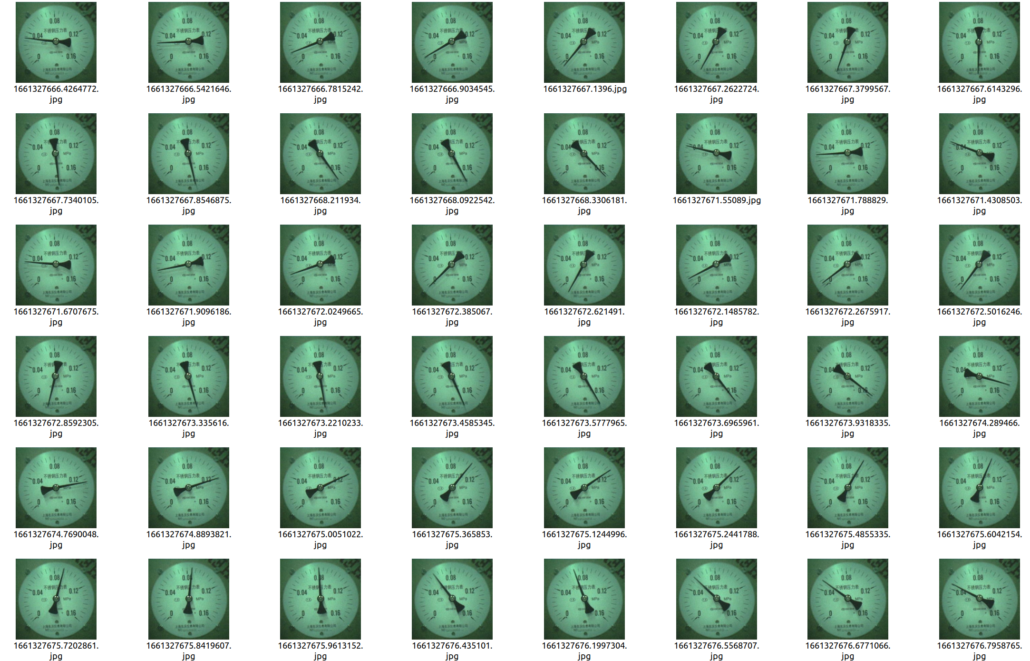

The picture above is from a meter in the real world. In the real world, the meters have water mist, deformation, blur, scratches, light spots, stains, etc. In these situations, traditional pattern recognition or classification algorithms using simple deep learning cannot meet the needs of the real world.

Algorithm Introduction

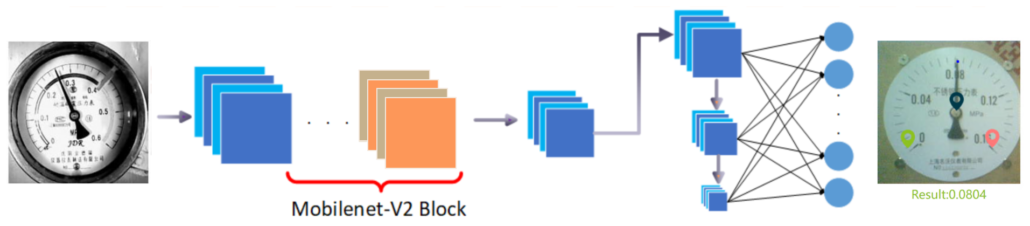

PFLD: A Practical Facial Landmark Detector is an algorithm that can meet the use conditions of accuracy, efficiency, while being light weight at the same time. It offers lightweight models with good detection accuracy on mobile devices (e.g. unconstrained pose, deformation, lighting and occlusion conditions) and runs in real-time. EdgeLab optimizes this algorithm, removes various auxiliary modules for face orientation and expressions in the algorithm, and extracts feature information marked only by key points, such as the information representing three points (head, middle, and tail), so as to obtain the meter’s correct reading, which is an end-to-end keypoint detection algorithm. Figure (1) below shows the architecture of the modified algorithm. Through the information of multiple key points, as long as there is information on one or two points on the surface of the instrument, the correct reading can be obtained. This meets the requirements of the real world, eventhough there are various complex and changeable situations as depicted in Figure (2).

If you want to learn more about the optimized algorithm, you can click here to explore it’s source code.

Also, you can click here to explore a dataset prepared by us, which includes meter readings in different locations and different lighting conditions.

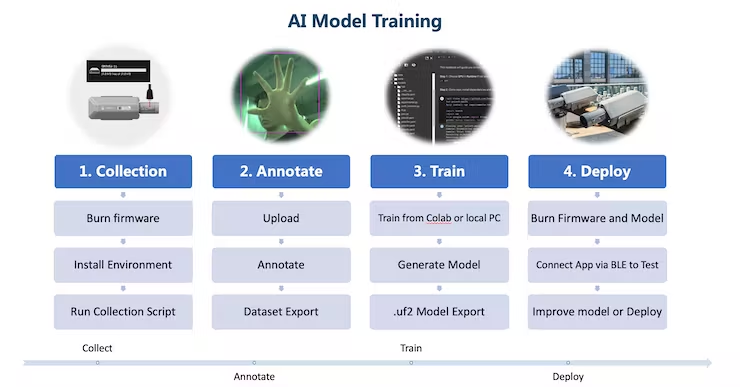

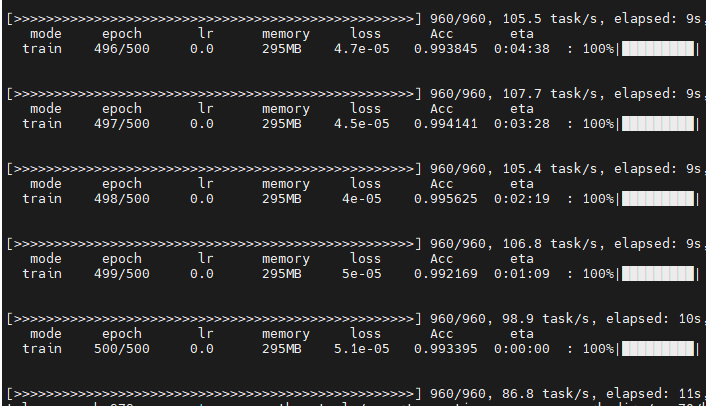

After data collection, then comes labeling, training, deployment. In the training evaluation, the accuracy reached to about 99.56%. To learn more about this training process, you can click here to explore the Edgelab Github repository.

Deploy

In the real world, sometimes it will not be easy to reach the instruments to take readings, such as the scene in the following figure:

Therefore, a smart vision device with low power consumption and long-distance wireless communication is needed, and Seeed SenseCAP A1101 – LoRaWAN Vision AI Sensor can meet the above deployment requirements.

With operating temperatures from -40 to 85℃ and IP66 waterproof grade, it is suitable to be deployed outdoors and in harsh environments.

It is also very easy to load a trained model to this device. Simply connect this device to the computer and press the onboard button to load the model uf2 file to the device.

The model trained by the previous algorithm can be deployed to this hardware by following here.

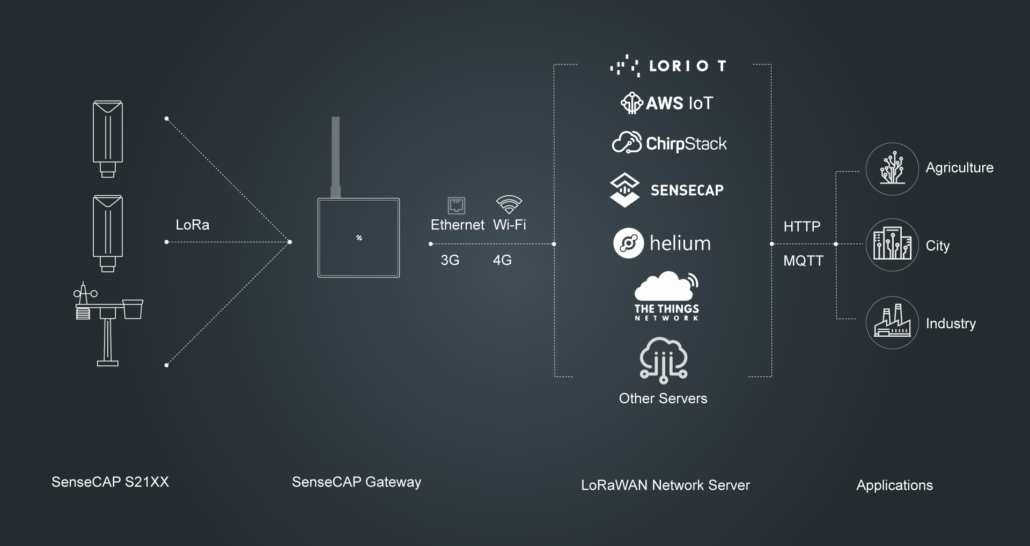

The figure below shows the overall architecture from device deployment to receiving data on the cloud.

A video of the point-type meter reading detection can be seen below:

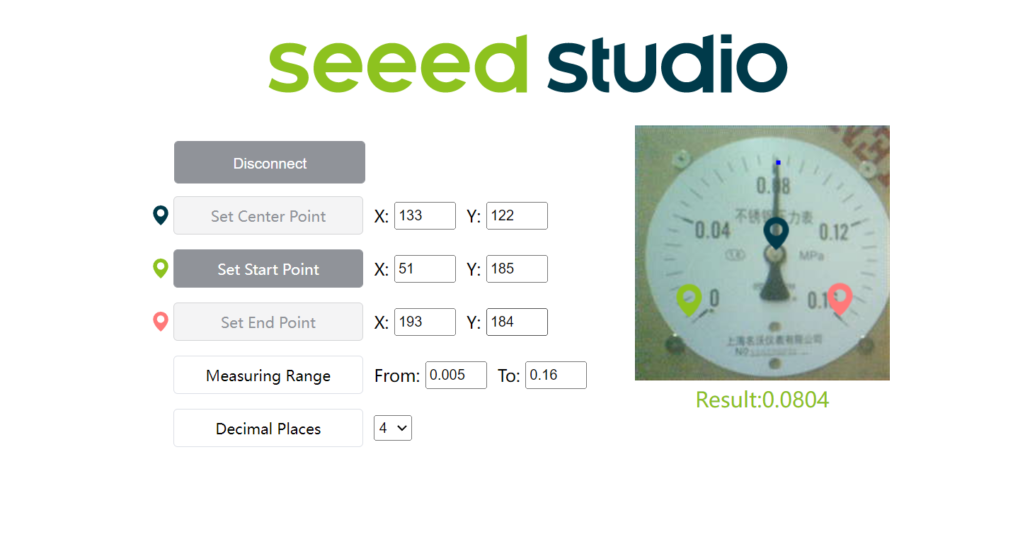

We have also developed the below web GUI to output the results

If the actual scene has special requirements for the structure, the adapted structure can also be customized according to the needs, such as a structure specially customized by SenseCAP for the general water meter structure, as shown in the figure below:

If you are an SI(System Integrator) of IoT (Internet of Things) and have large volume needs for visual recognition applications and product customizations, please contact iot@seeed.cc

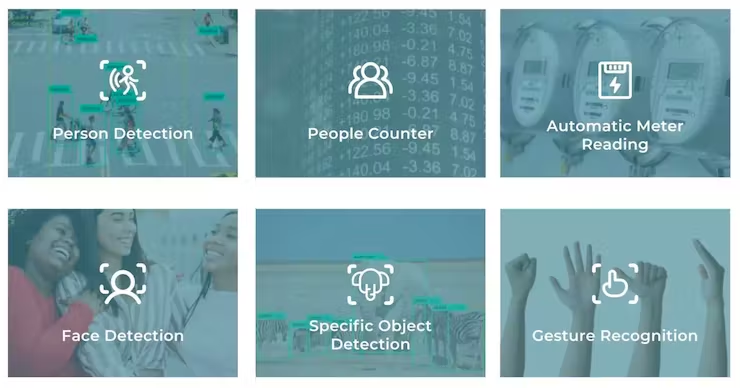

If you are a developer, in addition to metering applications, A1101 has more scenarios and uses to be explored. Welcome to share your project!

Explore more AI-related resources by Seeed!

- Few-Shot Object Detection with YOLOv5 and Roboflow wiki

- Train and Deploy Your Own AI Model Into SenseCAP A1101 wiki

- Intelligent Traffic Management System using DeepStream SDK

- Quick Start with SenseCAP K1100 – The Sensor Prototype Kit wiki