Orin Nano, a new member joins the NVIDIA Jetson series for next-level entry of edge AI

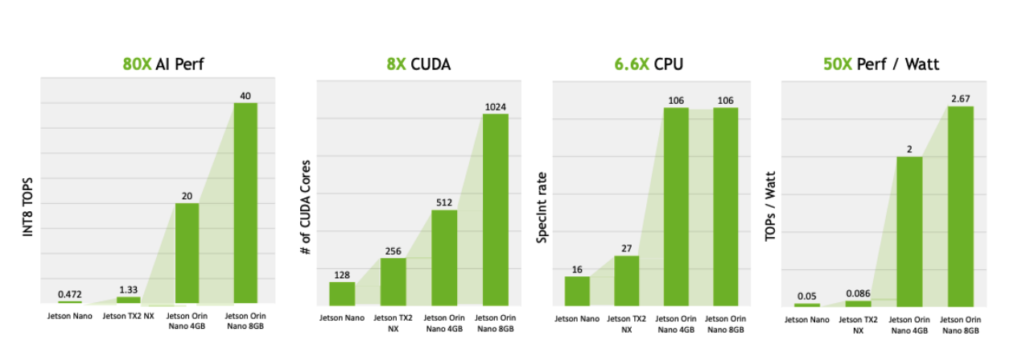

NVIDIA just launched of new Jetson Orin Nano™ system-on-modules this week GTC, Orin Nano will deliver up to 40x to 80x the performance over the prior generation, setting a new standard for entry-level edge AI and robotics with power options as little as 5W and up to 15W. To accelerate the edge ai application pipeline, Jetson Orin Nano includes a 6-core Arm Cortex-A78AE CPU, video decode engine, ISP, video image compositor, audio processing engine, and video input block.

In today’s share, let’s take a look

- Full specs of Orin Nano module, comparison with the prior generation

- Seeed new releasing Orin-based reComputer

- Emulate Orin module performance at AGX Orin

- Leverage our partner’s platform to help you develop at Orin series on Robotics, OTA, and Model Optimization.

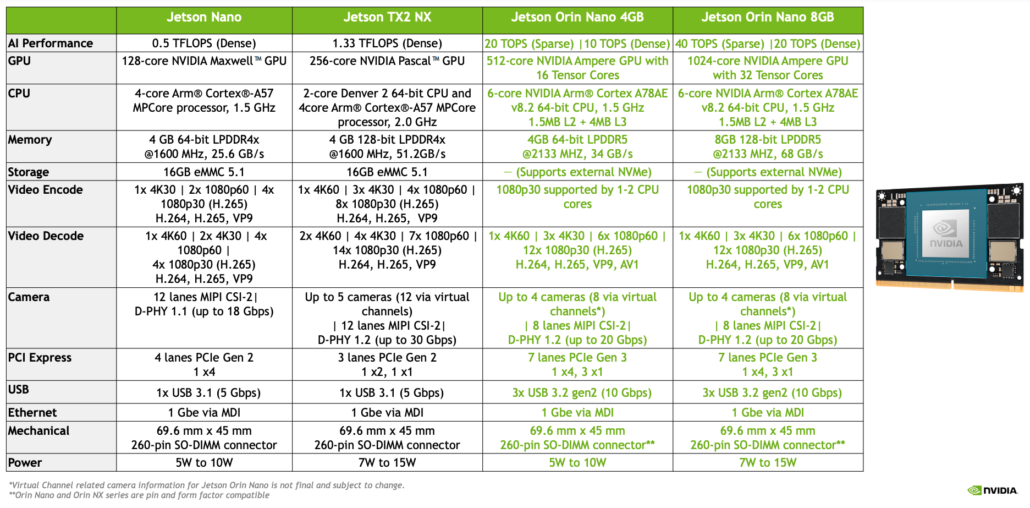

Orin Nano module

The new SoM features a 6x A78 ARM CPU Cortex-A78AE, up to 1024-core NVIDIA Ampere architecture GPU with 32 Tensor cores, and the same 260-pin SO-DIMM connector as the Jetson Orin NX module. Unlike the Jetson Nano module only provides a 4GB version, the series comes with 4GB and 8GB. Jetson Orin Nano series production modules will be available in January starting at $199.

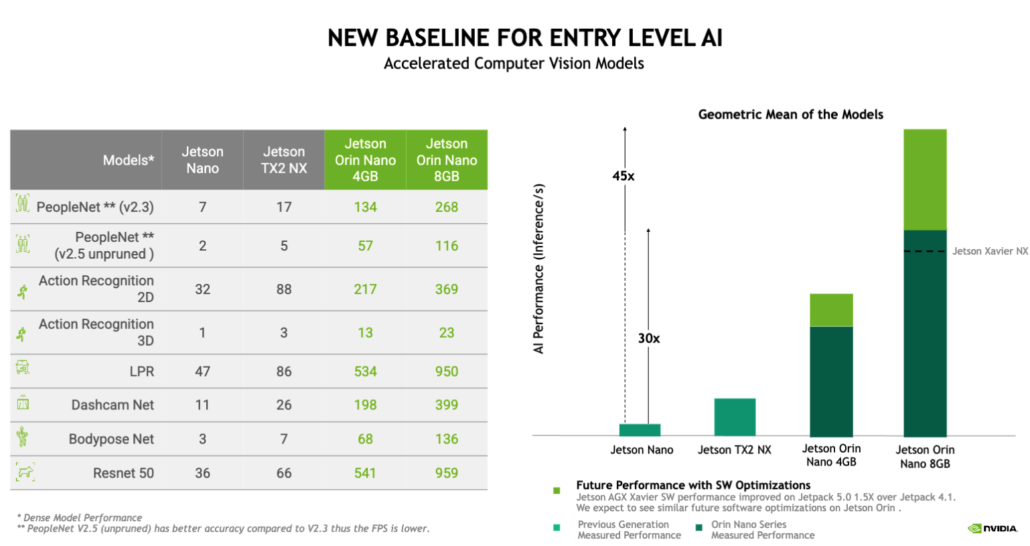

Orin Nano vs previous generation Jetson Nano and TX2 NX

The NVIDIA Ampere Architecture third-generation Tensor Cores deliver 50x performance per watt than the previous generation and bring more performance with support for sparsity, now developers can take the fine-grained structured sparsity in deep learning networks to double the throughput for Tensor Core operations.

- Orin Nano supports both FP16 and Int 8, while Jetson Nano only supports FP16.

- Better inference: NVIDIA has tested dense INT8 and FP16 pre-trained models from NGC and a standard ResNet-50 model on the new module, results has much beast earlier generation entry-level modules.

- CPU: Jetson Nano 4-core A57 to 6-core A78AE with Orin NANO.

- GPU: 128-core Maxwell to NVIDIA Ampere Architecture GPU with up to 8 streaming multiprocessors (SMs) composed of 1024 CUDA cores and up to 32 Tensor Cores for AI processing.

- Encoding capabilities level up! Orin Nano supports 1-2 CPU cores to for encoding at 1080p/30 frame rate which brings better real-time processing for edge video analytics.

- Memory bandwidth improved: TX2NX provides 51.2GB/s, Jetson Nano has 25.6GB/s, Orin NANO provides 34GB/s, and 8GB provides up to 68GB/s.

- Orin NANO has no eMMC, and directly supports external NVME for data storage.

- Compact size, as small as 70x45mm 260-pin SODIMM footprint, Jetson Orin Nano modules include various high-speed interfaces, what carrier boards are you expecting? Let us know!

- Up to seven lanes of PCIe Gen3

- Three high-speed 10-Gbps USB 3.2 Gen2 ports

- Eight lanes of MIPI CSI-2 camera ports

- Various sensor I/O

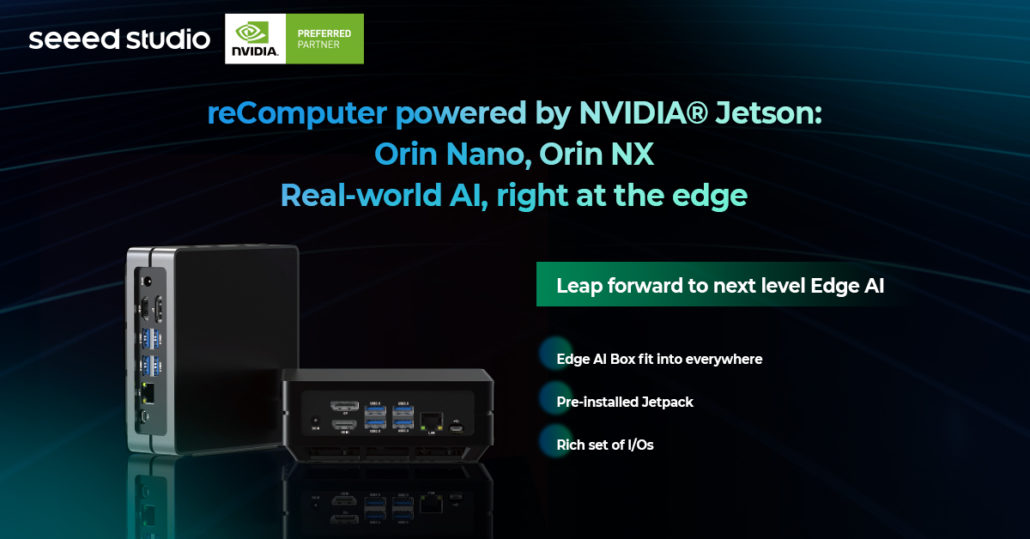

New releasing reComputer of Orin Nano and Orin NX series

reComputer J3010 and J3011 are compact edge computer built with NVIDIA Jetson Orin Nano production modules, NVIDIA Ampere Architecture GPU with up to 8 streaming multiprocessors (SMs) composed of 1024 CUDA cores and up to 32 Tensor Cores for AI processing.

reComputer of Orin Nano will be built with J401 carrier board brings rich set of I/Os to extend functionalities: 2x CSI, 1x M.2 Key M, 1x M.2 Key E, 4x USB 3.2, 1x USB 2, HDMI, CAN, RTC and 40-pin GPIO to extend capabilities, as well as a heatsink, and aluminum case. The full system will be pre-installed Jetpack 5, made it ready for development with leading AI frameworks and suitable for deploying everywhere.

As NVIDIA Preferred partner, we are also working on new carrier boards and full systems around Orin NX and Orin Nano. We will add Orin Nano and Orin NX to reComputer of Jetson series. The full system will be assembled with J401 carrier board, production module, enclosure and heatsink, as well as pre-installed Jetpack 5. Read more about Jetpack 5 in this article.

- reComputer 3010 – Orin Nano 4GB module

- reComputer 3011 – Orin Nano 8GB module

- reComputer 4011 – Orin NX 8GB module

- reComputer 4012 -Orin NX 16GB module

Let us know your more hardware expectation such as other form factor carrier boards and add-ons at our Discord!

reComputer J401 carrier board for Jetson Orin Nano/Orin NX

Carrier Board Specification

| Form factor | 100mm * 80mm |

| Display | 1x HDMI |

| CSI Camera | 2x CSI |

| Ethernet | 1x Gigabit Ethernet(101001000M) |

| M.2 Key M | 1x M.2 Key M, NVME |

| M.2 Key E | 1x M.2 Key E |

| USB | 4x USB 3.2 Type-A; 1x USB2.0 Type-C(Recovery) |

| Multifunctional Port | 1×2.0 Pitch 40-Pin |

| FAN | 1x FAN(5V PWM) |

| RTC | 1x RTC Socket; 1x RTC 2-pin |

| So-DIMM | 260 Pin |

| Power | DC 9-19V |

| CAN | 1x CAN |

| Button Header | 1×12, 2.54mm pitch |

Bring one-stop hardware service from prototype to solutions, Seeed offers manufacturing from 0.1 to ∞ including carrier board customization, and custom image integration service. For more NVIDIA Jetson product line at Seeed, developer tools, and also use cases. Learn more at our Jetson ecosystem page!

During GTC 2022, NVIDIA disclosed additional details about the Jetson Orin platform including the new Orin Nano at The NVIDIA Jetson Roadmap for Edge AI and Robotics session, available on demand for all GTC registrants.

Seeed is NVIDIA’s embedded system reseller and preferred partner. Besides providing full system devices, carrier boards, and peripherals hardware, Seeed is also working with the ecosystem software platform simplify developers build custom applications with no/low code way and deploy on the edge device in scale for production. Customers can develop their edge AI, robotics, AIoT, and embedded solutions for the NVIDIA Jetson platform, and then deploy and scale their applications on the Jetson Orin modules as they become available.

Robot and ROS Development

During GTC, it announced Jetson Orin Nano runs the NVIDIA Isaac robotics stack and features the ROS 2 GPU-accelerated framework, and NVIDIA Isaac Sim, a robotics simulation platform, is available on the cloud. And for robotics developers using AWS RoboMaker, Huang announced that containers for the NVIDIA Isaac platform for robotics development are in the AWS marketplace.

NVIDIA Isaac ROS GEMs are hardware-accelerated packages that make it easier for ROS developers to build high-performance solutions on NVIDIA hardware. Learn more about NVIDIA Developer Tools

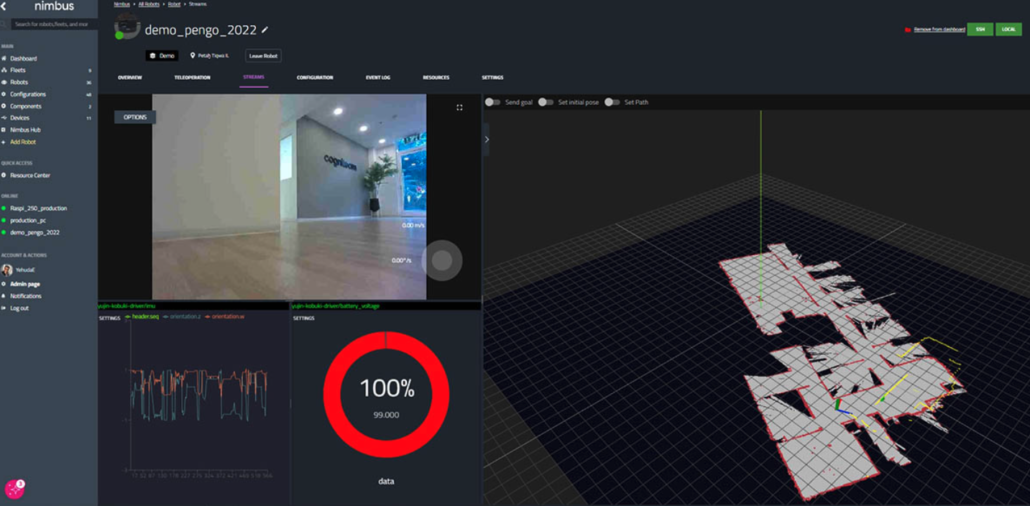

Fully support Orin series, Cogniteam Nimbus is a cloud-based solution that allows developers to manage autonomous robots more effectively. Nimbus platform also supports NVIDIA® Jetson™ and ISAAC SDK and GEMs out-of-the-box. Check out our webinar on Connect your ROS Project to the Cloud with Nimbus using Jetson Xavier NX.

With a few clicks from the browser, developers can now drag and drop ready-made NVIDIA Isaac software development kit (SDK) GEMs as “dockerized” components in Nimbus, said Cogniteam. Users also have better support for ROS 2 components and can upgrade them remotely, from anywhere in the world, it added.

Remote Fleet Management

Allxon, an industry-leading solutions provider and Seeed Edge AI partner, brings powerful remote edge device management. .

Fully support Orin series, you can enable secure OTA and remote device management with Allxon. Unlock 90 days free trial with code H4U-NMW-CPK.

Allxon’s plugIN can help developers do more than remote monitor utility and new Jetson Power GUI. On top of monitoring the system performance and power consumption of NVIDIA Jetson Orin, Allxon can help developers set up real-time alerts to instantly resolve systems errors, take preventative measures, and even manage clock frequencies to optimize CPU, GPU, thermal, and EMC performances, especially when running multiple concurrent AI application pipelines.

OTA Updates

Developers can easily deploy updates onto fleets of NVIDIA Jetson Orin modules using Allxon’s OTA update feature, to ensure all operations run on the latest and safest versions.

Remote Trigger Recovery

Allxon empowers developers with remote tools to switch the NVIDIA Jetson Orin-based products to go into recovery mode to help seamlessly recover and roll back operations without the need to go on-site. Developers can also closely monitor, analyze, and track device operations by setting up automatic device log collection, and isolating tampered datasets for forensics analysis.

Accurately emulate any Jetson Orin module at AGX Orin

You can work with the entire Jetson software stack while emulating a Jetson Orin module. Frameworks such as NVIDIA DeepStream, NVIDIA Isaac, and NVIDIA Riva work in emulation mode, and tools like TAO Toolkit perform as expected with pre-trained models from NGC. The software stack is agnostic of the emulation and the performance accurately matches that of the target being emulated.

If you are developing a robotics use case or developing a vision AI pipeline, you can do end-to-end development today for any Jetson Orin module using the Jetson AGX Orin Developer Kit and emulation mode.

The Orin Nano modules are form-factor and pin-compatible with the previously announced Orin NX modules. For customers who have AGX Orin, there offers full emulation support and allows you to get started developing for the Orin Nano series today using the AGX Orin developer kit. Please check the step by step tutorial here.

You can download orin-nano-overlay-351.tbz2, which includes the flash configuration files necessary for the Jetson AGX Orin Developer Kit to emulate a Jetson Orin Nano series module. See here for flashing instructions. Noted if you are working on Jetpack 5.0.1 developer preview, please upgrade to Jetpack 5.0.2.

Maximize Model’s Performance on Jetson Orin with Deci platform

Deci’s hardware-aware model zoo allows you to see various SOTA computer vision models and their accuracy and runtime performance results (including throughput, latency, model size, and memory footprint) on various hardware types. By taking the MobileVITV2-1.5 model and applying compilation and 16 bit quantization with NVIDIA TensorRT, one can reach a throughput speed of 91.3 frames per second. By building a superior architecture with Deci and then applying the same compression (using TensorRT), one can gain a 2.34x higher throughput to reach 214 frames per second on the NVIDIA Jetson AGX Orin Developer Kit. The model generated with Deci’s AutoNAC engine is not only faster but also more accurate (80.44) compared to MobileVIT (80.3)

Deci’s AutoNAC help you build a model specific to your needs. With AutoNAC, AI teams typically gain up to 5X increase in inference performance compared to state-of-the-art models (the range is between 2.5-10x depending on various factors). Deci’s platform can leverage NVIDIA TensorRT to automatically compile and quantize your model. The optimization includes selecting the desired hardware such as Jetson Orin and the quantization level. Deci offers 16-bit FP post quantization and 8-bit integer quantization (premium feature) to reduce the model size without compromising accuracy. The optimization process takes just a few minutes, and then you’ll receive an optimized MobileVit variant, as shown below.

Seeed Jetson Ecosystem

Seeed is an embedded system reseller and preferred partner in the NVIDIA Partner Network. Besides providing full system devices, carrier boards, peripherals, and hardware, Seeed is also working with ecosystem software platforms to help developers build custom applications with no/low code and deploy edge devices at scale for production. Customers can use the NVIDIA Jetson Orin developer kit today to build their edge AI, robotics, AIoT, and embedded solutions, and then deploy and scale their applications on the Jetson Orin modules as they become available.

We are looking forward to working with AI experts, software enterprises, and system integrators:

- Integrating your unique technology, resell or co-brand licensed solution with us.

- Building next-gen AI product powered by the NVIDIA Jetson module, one-stop bring your product to the market with Seeed’s manufacturing, fulfillment, and distribution.

- Working with Seeed Amazing Ecosystem Partners together, unlocking more AI possibilities.

For more NVIDIA Jetson product line information, developer tools, and use cases at Seeed, visit Seeed’s NVIDIA Jetson ecosystem page or contact us at at edgeai@seeed.cc