No-code AI: Deploy An Edge AI Application without Code.

Machine learning is quite widely adopted in software industry applications like social media, advertising, and e-commerce recommendation system. However, looking into real-world applications, AI is not ubiquitous and not widely adopted because more consideration needs to be fully covered when we want to apply the algorithm to real situations. There are several challenges between the gap from algorithm to deployment.

- There is not enough data and it takes time for data labeling.

- Training takes time and complex optimization.

- When you decide to apply in the real situation, what if there is no efficient network connectivity or the dataset is not accommodated? Besides performance, edge AI devices are expected to have flexible functions with multiple models installed and quickly being set up.

We need to make use of convenient AI tools, and choose the robust and compact size hardware to bring AI to the real-world faster and more reliable.

What is no-code AI?

No-code AI helps democratize artificial intelligence. It usually refers no-code development platform that can help deploy AI and machine learning models using a visual, no-code, drag-and-drop, click-to-deploy interface. For developers and AI experts, no-code AI tools help AI experts build ML solutions faster and with less effort. No-code tools also provide collaboration opportunities for data scientists, developers, and solution providers to align together making AI more widely and accessible and deployed in a low-cost way.

Creating an AI application is not easy from “end to end”:

1. data preparation 2. training 3. deploying.

From data annotation, model training, and model deployment, there are a lot of No-code AI tools that enable non-technical users to quickly and easily build accurate models for application deployment. In this blog, we will introduce different no-code AI tools that help users faster build with AI applications:

- alwaysAI

- alwaysAI is an essential Computer Vision development platform for creating and deploying machine learning applications on edge devices. By using alwaysAI, you have access to more than 100 pre-trained models which are ready-to-use. Also, the inference result can easily be visualized on any web browser!

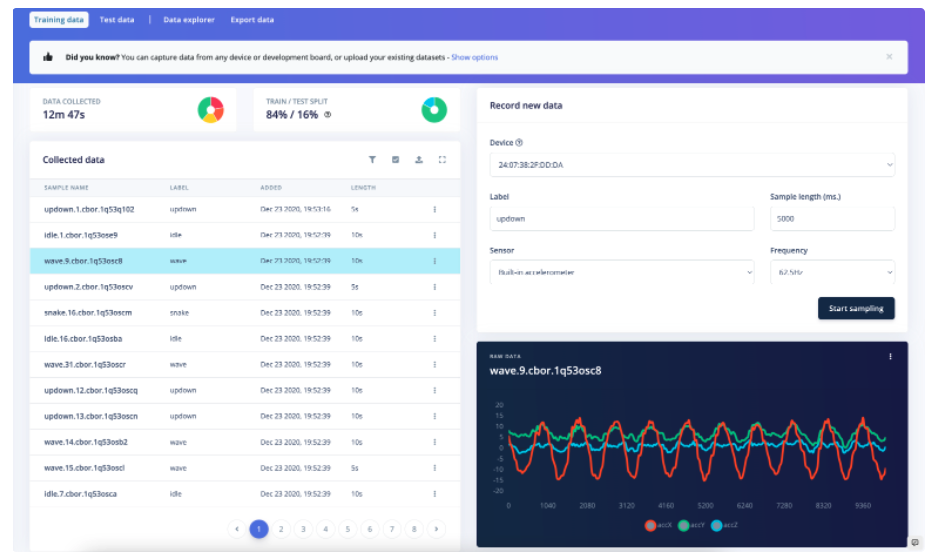

- Edge Impulse

- Edge Impulse helps build wide range of advanced edge ML solutions fast. You can easily import sensor, audio, and camera data, and deploy seamlessly to embedded systems, GPUs and custom accelerators. At Edge Impulse studio, you can collect data, design algrithms with ML blocks, and validate ML with real-time data without codes.

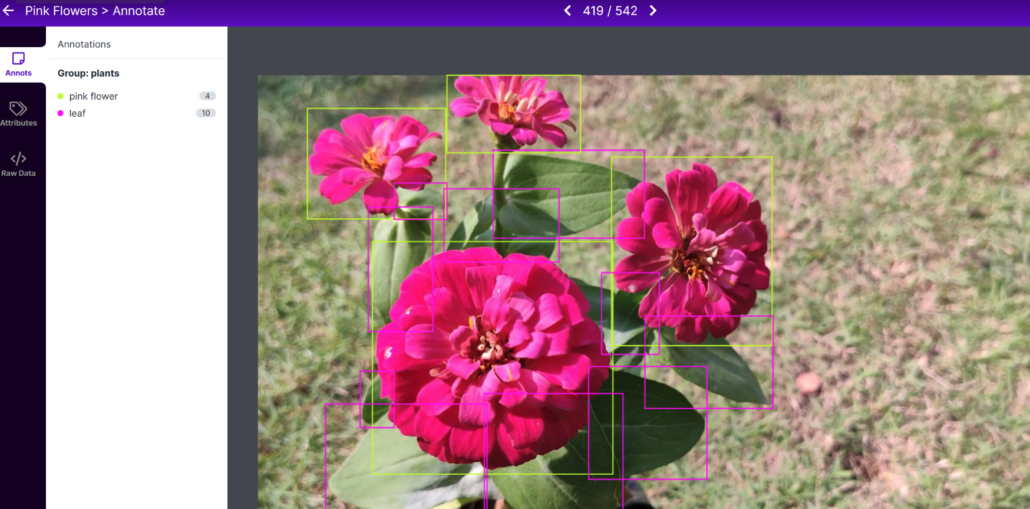

- Roboflow

- Roboflow empowers developers to build their own computer vision applications, no matter their skillset or experience. You can host a trained model with a single click or build your own custom models. Roboflow Annotate detects objects in your images and places bounding boxes around them. If an annotation is misaligned, it’s easy to adjust its size and position.

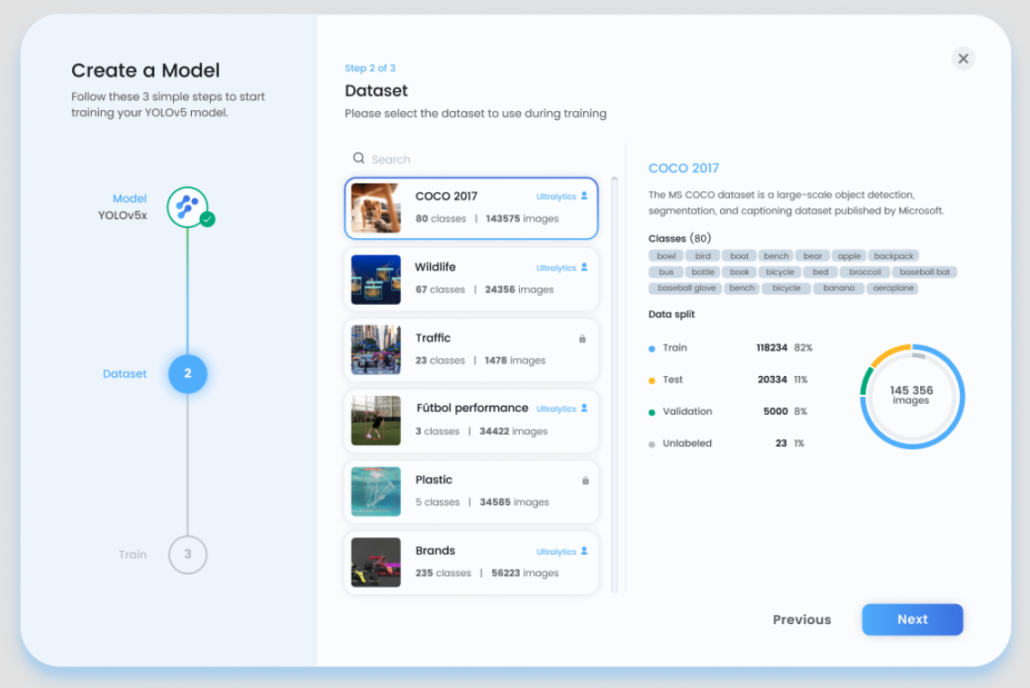

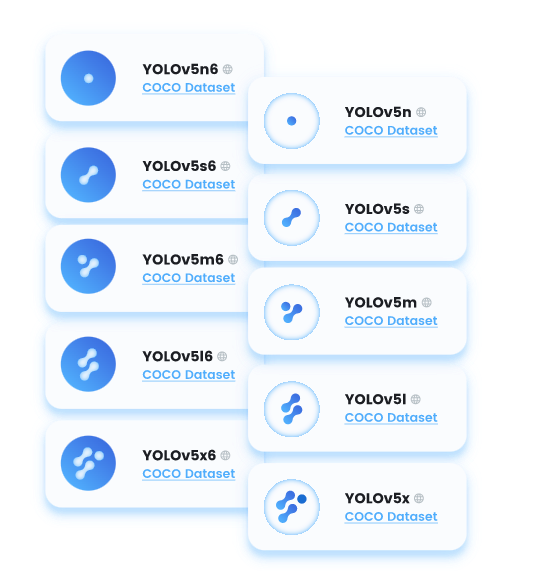

- Ultralytics HUB based on YOLOv5

- YOLOv5 is a family of object detection architectures and models pretrained on the COCO dataset, and represents Ultralytics open-source research into future vision AI methods, incorporating lessons learned and best practices evolved over thousands of hours of research and development. Ultralytics helps turn machine learning models into shareable web and mobile apps in seconds with no code.

- Seeed no-code Edge AI open-source project

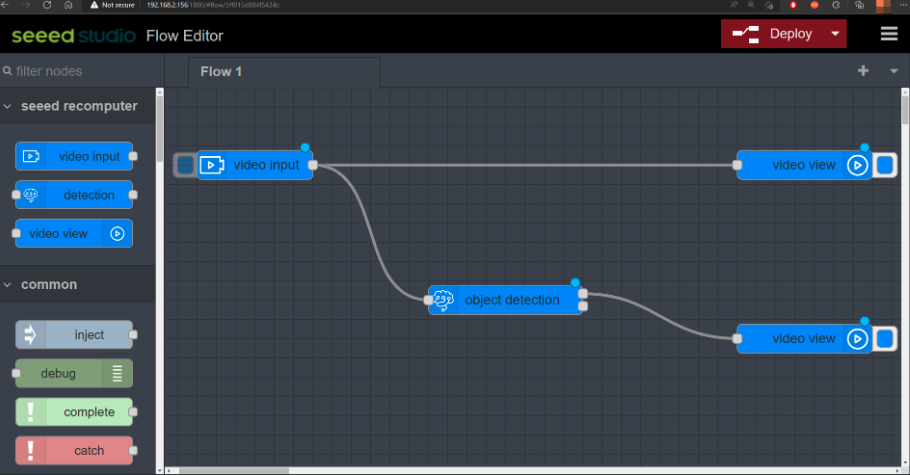

- Recently we are developing an open-source no-code Edge AI Vision Tool based on Node-RED. This open-source tool is aiming at bringing an easier way to deploy an AI application at the edge to solve real problems. For example, you can just take three steps to upgrade an IP camera with people detection. We are also calling the community join our development: submit pull requests at our repository or fill in this form to let us know the AI solution you are working on.

1. No-code AI data acquisition, annotating, and augmenting.

Data acquisition

At Edge Impulse, you can collect live data from supported hardware, or upload an already existing dataset to your project directly through the Edge impulse Studio. The data should be in the Data Acquisition Format (CBOR, JSON, CSV), or as WAV, JPG or PNG files.

AI-Assisted data labeling

You can use AI-assisted labeling at Edge Impulse Studio using YOLOv5. By utilizing an existing library of pre-trained object detection models from YOLOv5 (trained with the COCO dataset), the datasets can quickly be identified and labeled in seconds without needing to write any code!

Data annotation

Annotate images super fast, right within your browser with Roboflow.

In our tutorial of our less datasets model training, we used Roboflow to annotate images. We can directly import images or videos. If we import a video, it will be converted into a series of images. This tool is very convenient because it will let us help distribute the dataset into “training, validation, and testing”. Also, Roboflow will allow us to add further processing to these images after labeling them. Furthermore, it can easily export the labeled dataset into any format such as YOLOV5 PyTorch format which is what we exactly need!

Image Augmentations

Deep learning models that contain many features require a lot of labeled data, and if we don’t have a lot of data, how are we going to get more data for a training the neural networks? You don’t have to look for novel images to add to your dataset, we can use image augmentation to generate similar but different training samples after a series of random variations such as rotations (flips), shifts (translations), rotations (rotations) on the training images to expand the size of the training set.

Roboflow Image Augmentations (“offline augmentation”)

You can augment the dataset through flipping, rotating, cropping, shearing, blurring and shearing images. In Roboflow, augmentations are chained together. For example, if you select “flip horizontally” and “salt and pepper noise,” a given image will randomly be reflected as a horizontal flip and receive random salt and pepper noise. You can also have access to advanced augmentations such as bounding box only augmentations, cutout, and mosaic.

2. No-code AI for model training, pruning, and optimization.

Ultralytics HUB: Create and train your models with no code

Ultralytics HUB is where you bring your models to life by choosing what data for it to learn from. Your model will uncover patterns and evolve into one complete yet powerful tool for you to deploy in the real world – all without you having to know a single line of code. Ultralytics HUB is fast, simple, and easy to use. At Ultralytics HUB, you can access to all YOLOv5 architecture and train all YOLOv5 models with a few clickers of a button using Ultralytics HUB, no coding skills are necessary. Also, you can deploy trained YOLOv5 models to any destination within seconds, including Google, AWS, Azure APIs.

alwaysAI: Cloud-based server to train models

alwaysAI offers a cloud-based server to train models that seamlessly integrate with the alwaysAI platform. With alwaysAI’s Model Training Toolkit, you can train an object detection model to recognize any object you choose. You can train the data based on 100+ model catalog. You can run training directly from alwaysAI console site. With alwaysAI’s Model Training Toolkit available on the cloud, you can train an object detection model to recognize any object you choose. All you need to do is collect data, annotate that data, and train that data using one of the base models we offer. With a few button-clicks, you can start training your model. Once your model is trained, you can test it by deploying it locally or on an edge device.

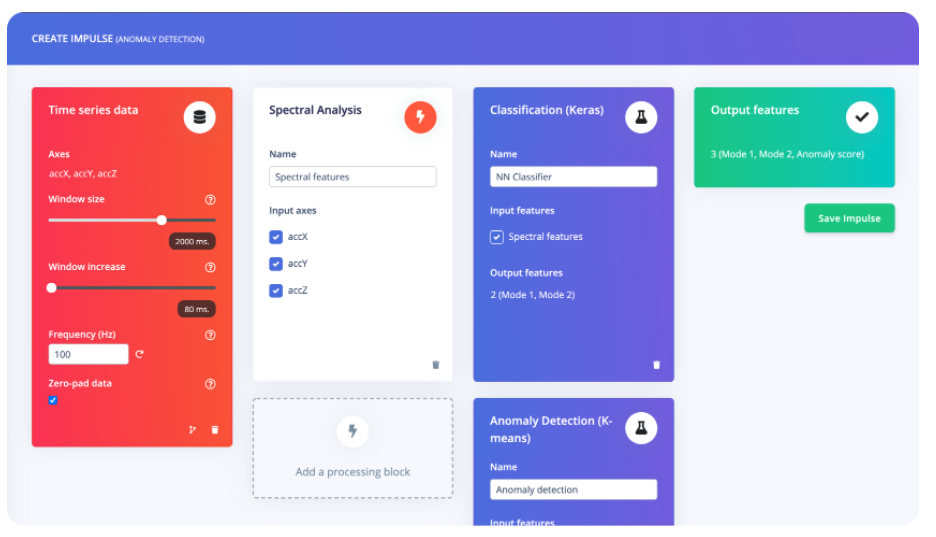

Edge Impulse: train a custom model by building your own ‘Impulse’ without codes.

If you use Edge Impulse for collecting data at the first step we mentioned, you can now create your Impulse fully at the studio dashboard. A complete Impulse will consist of 3 main building blocks: input block, processing block and a learning block.

The input block indicates the type of input data you are training your model with. This can be time series (audio, vibration, movements) or images.

A processing block is basically a feature extractor. It consists of DSP (Digital Signal Processing) operations that are used to extract features that our model learns on. These operations vary depending on the type of data used in your project. If you don’t have DSP experience, the studio will recommend the processing block based on your input data.

A learning block simply a neural network that is trained to learn on your data. It can be classification, regression, anomaly detection, image transfer learning or object detection. It can also be a custom transfer learning block.

3. No-code AI for deploying: accelerate the last mile of AI deployment at the Edge.

From 0.9 to 1, what is Seeed open-source no-code Edge AI Tool?

Check our GitHub repository here: https://github.com/Seeed-Studio/node-red-contrib-ml

We are pleased to bring you our new experience with the NVIDIA Jetson Nano Developer Kit and Seeed reComputer series for quick and easy object recognition. With just a few simple commands, you can set up the environment, then you can use Node-RED based nodes to deploy models, and the process of monitoring, identifying, and outputting results from a live screen. The whole process can’t be easier than three blocks.

What is Node-RED?

Node-RED is a programming tool for wiring together hardware devices, APIs and online services in new and interesting ways. It provides a browser-based editor that makes it easy to wire together flows using the wide range of nodes in the palette that can be deployed to its runtime in a single-click.

Here we wanna call and align with community experts for delivering AI applications at the edge! The co-inventing program can serve to connect us with developers and industry experts. We are looking forward to working hand in hand with you to provide tools and resources to help communities or businesses with last-mile challenges and accelerate your success.

What can I benefit from joining No-code Edge AI Tool development?

- The opportunity of getting free product or store coupons for Seeed edge AI devices powered by NVIDIA Jetson systems.

- The opportunity of integrating your technology into our current hardware for a deployable solution.

- Exclusive discount for Seeed hardware.

Co-inventing with Seeed as an Edge AI Open-source Project Partner

We welcome all participants who would like to join us solve real-world problems by using Seeed’s devices. We also prefer if you:

- have Node-RED, Docker, and AI experience, and would like to build new nodes, flows, templates, or more functions targeting full AI applications.

- have built AI applications using Jetson Nano/Xavier NX before.

Please submit the Pull Request at our ?repository, ?fill in this form or contact edgeai@seeed.cc if you have any suggestions!

Please read the following notes for submitting PRs and receiving store coupons and samples:

- The review of the applications will take up to 7 business days, and we’ll also share approved Pull Requests (if any) on our Twitter, LinkedIn, Discord, and Edge AI Newsletter

- We will send store $20-$100 coupons to approved PRs and suggestions contributors. The coupon can be used for Seeed edge AI device built with NVIDIA Jetson embedded system.

Currently, we are focusing on applications based on reComputer series based on NVIDIA Jetson platform.

- reComputer J1010, built with Jetson Nano 4GB module, M.2 Key E + 1x USB 3.0, 2X USB 2.0

- reComputer J1020, built with Jetson Nano production module, M.2 Key M + 4x USB 3.0

AI software or solutions: join our Edge AI partner program!

Join Seeed as an Edge AI Partner: Transform Your Business, Delivering Real-World AI Together

- Integrate your unique AI technique into our current hardware: resell or co-brand licensed devices at our channels.

- Build your next-gen AI product powered by the NVIDIA Jetson module and bring your product concept to the market with Seeed’s Agile Manufacturing 0-∞.

- Co-inventing with Seeed. Combining our partners’ unique skills and Seeed’s hardware expertise, let’s unlock the next AI ideas!

Seeed Studio is a hardware innovator with a mission to become the most integrated platform for creating solutions for IoT, AI, and Edge Computing application scenarios. To accelerate AI helping real-world problem solving, we are looking forward to integrating the latest software and hardware technologies.

Meanwhile, we are eager to empower developers and industry experts who know exactly where the pain points are. We hope to collaborate with those who are at the forefront of every industry and have a deeper understanding of people’s specific needs so that we can partner with them to provide more customized services to different verticals.