Everything about TensorFlow Lite and start deploying your machine learning model

This blog covers everything about TensorFlow Lite and gets started with deploying your machine learning models, and check out our recommended XIAO BIE sense.

Just heard of TensorFlow Lite and want to know more about it? Today we will discuss everything about TensorFlow Lite. Hope this blog gives you a better understanding of it:

- What is TensorFlow Lite?

- Architectural Design of TensorFlow Lite

- How does Tensorflow Lite work?

- TensorFlow Lite vs TensorFlow: What’s the important distinction

- Start deploying machine learning model with TensorFlow Lite

- Best Option of MCU for Deploying Machine Learning with TF Lite

- [Project] Speech Recognition on XIAO BLE Sense with TensorFlow Lite

What is TensorFlow Lite?

TensorFlow Lite (TF Lite) is an open-source, cross-platform deep learning framework launched by Google for on-device inference, which is designed to provide support for multiple platforms, including Android and iOS devices, embedded Linux, and microcontrollers. It can convert TensorFlow pre-trained models into special formats that can be optimized for speed or storage. It also helps developers run TensorFlow models on mobile, embedded, and IoT devices.

Simply put, TensorFlow Lite aims to deploy the trained model on the mobile or embedded terminal.

What are the features of TensorFlow Lite?

- Lightweight: Enables on-device machine learning model inference with a small binary scale and fast initialization/startup

- High performance: Optimized for faster model loading time, support for hardware acceleration, etc.

- Cross-platform: Supports Android and iOS devices, embedded Linux, and microcontrollers.

- Low latency: no need for data to and from the server

- Secure: Any personal data will not leave the device and will not reveal privacy

- Supported multiple languages: including Java, Swift, Objective-C, C++, and Python.

- Abundant example reference: provides end-to-end examples of common machine learning tasks on multiple platforms, such as image classification, object detection, and more.

Specific reference documentation can be found on TensorFlow Lite Github.

The above features of Tensorflow Lite fit perfectly with all the features required for inference at the edge.

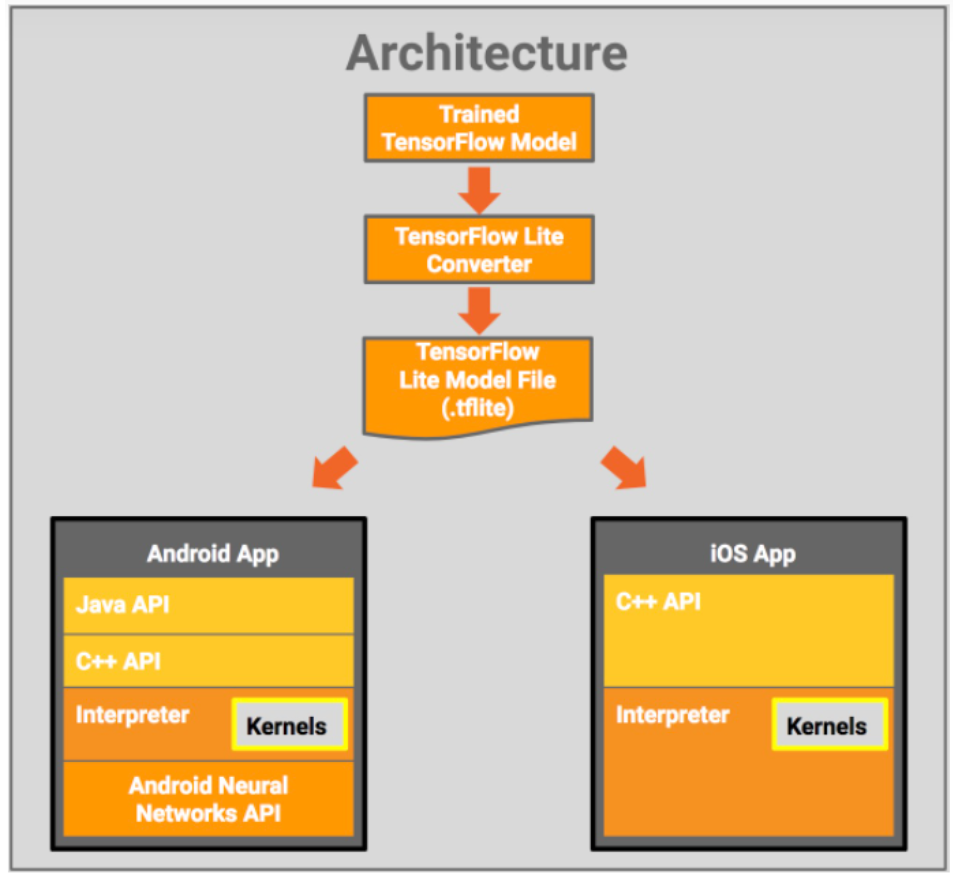

Architectural Design of TensorFlow Lite

From the figure we can see that this contains three components include:

- TensorFlow Model: Save the trained TensorFlow model to disk.

- TensorFlow Lite Converter: This program converts the trained model to the TensorFlow Lite file format.

- TensorFlow Lite Model File: This format is based on FlatBuffers and optimized for maximum speed and minimum size.

So how does it work? What are the functions of the above three components? We’re going to the next section right now.

How does Tensorflow Lite work?

1、Select and train a model

Suppose you want to perform an image classification task. The first is to determine the model for the task. You can choose:

–Use pre-trained models like Inception V3, MobileNet, etc.

You can check out the TensorFlow Lite example apps here. Explore pre-trained TensorFlow Lite models and learn how to use them for various ML applications in the sample application.

–Create your custom models

Create models with custom datasets using the TensorFlow Lite Model Maker tool.

–Apply transfer learning to pre-trained models

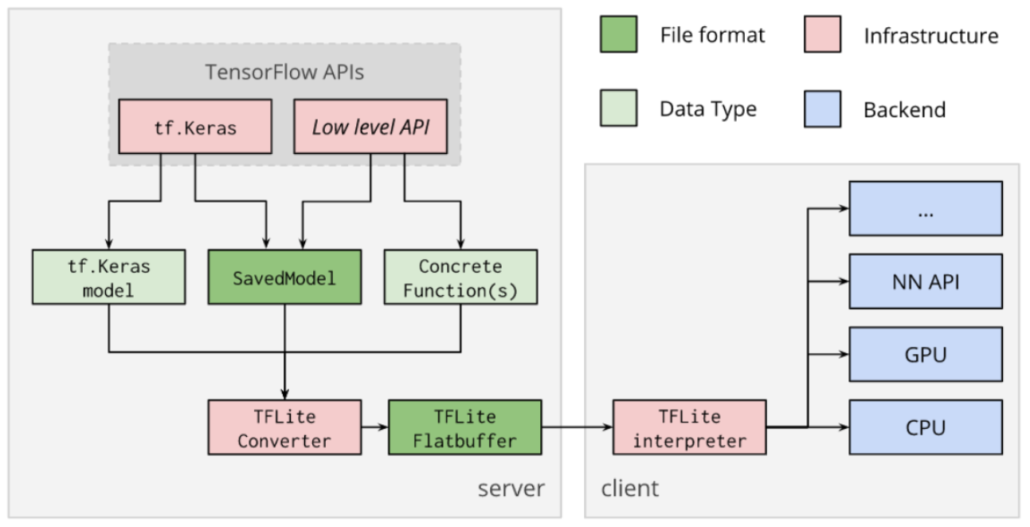

2、Transform models using Converter

After the model training is complete, you need to use the TensorFlow Lite Converter to convert the model to a TensorFlow Lite model. The TensorFlow Lite model is a lightweight version that is very efficient in terms of accuracy and has a smaller footprint. These properties make TF Lite models ideal for working on mobile and embedded devices.

Source:https://www.tensorflow.org/lite/convert/index

Regarding the selection of pre-trained models section, you may want to get more information about this.TensorFlow Lite already supports many trained and optimized models:

- MobileNet: A class of vision models capable of recognizing 1000 different object classes, specially designed for efficient execution on mobile and embedded devices.

- Inception v3: Image recognition model, similar in functionality to MobileNet, offering higher accuracy but larger.

- Smart Reply: An on-device conversational model that enables one-click replies to incoming conversational chat messages. First- and third-party messaging apps use this feature on Android Wear.

Inception v3 and MobileNets have been trained on the ImageNet dataset. You can retrain on your own image dataset through transfer learning.

You may refer to the information on On-Device Conversational Modeling with TensorFlow Lite.

TensorFlow Lite vs TensorFlow: What’s the important distinction

TensorFlow Lite is TensorFlow’s light-weight solution, that is specifically designed for the mobile platform and embedded devices.

TensorFlow Lite is intended to supply the ability to perform predictions on a trained model (load the model instead of training it). On the other hand, TensorFlow is used to build (train) the model.

TensorFlow can be used for network training and inference, while TensorFlow Lite is designed for TensorFlow is used for network training and inference, and has certain requirements on the computing power of the device. For devices with limited computing power such as mobile phones, tablets, and other embedded devices, TensorFlow Lite can provide efficient and reliable inference.

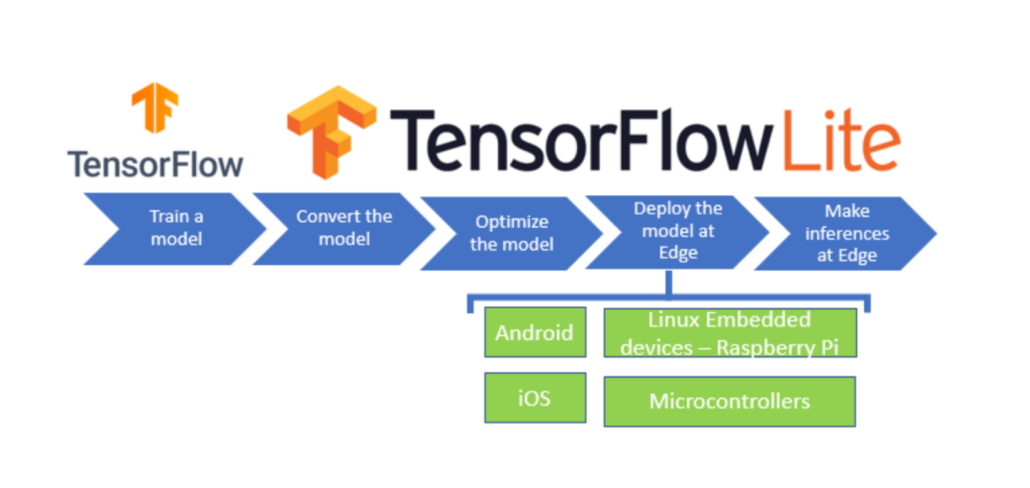

Start deploying machine learning model with TensorFlow Lite

Machine learning(ML) is a discipline in which computers build models based on data and use the models to simulate human intelligence. Machine learning is profoundly changing our lives and work. It is the core of artificial intelligence(AI) and the fundamental way to make computers intelligent.

TensorFlow Lite supports on-device machine learning inference with low latency and small storage. It provides end-to-end examples for common machine learning tasks on multiple platforms, such as image classification, object detection, poses estimation, question answering, and text classification. This makes the TensorFlow Lite model ideal for machine learning, especially on small, low-power microcontrollers.

TensorFlow Lite for Machine Learning can be applied to:

- Mobile devices(iOS and Android)

- Internet Of Things (IoT)

- Embedded Linux devices(Raspberry Pi,reTerminal)

- Microcontrollers

There are many applications related to TensorFlow Lite Raspberry Pi. It is recommended that you check this blog. It describes how to Run the TensorFlow model on reTerminal with TensorFlow Lite Runtime.

And another blog on how to use Wio Terminal and Tensorflow Lite for microcontrollers to create an intelligent metro station.

Microcontrollers are typically small, low-power computing devices that can be embedded into hardware that needs to perform basic computations to implement various functions.

Why bring machine learning to tiny microcontrollers?

By bringing machine learning to tiny microcontrollers, we can make billions of devices in our lives smarter. Such deployments can be applied to home appliances and IoT devices as well. And the deployment of intelligent learning on the microcontroller does not require expensive hardware equipment, nor does it require a reliable Internet connection, which greatly reduces the difficulty and cost of ML deployment.

So bringing machine learning to microcontrollers makes a lot of sense for deploying machine learning models on MCUs.

?️Ready to apply Machine learning right into the world?

Next, I will introduce you to a tiny microcontroller that supports TensorFlow Lite with a small size and high-cost performance.

Let’s find out!

Best Option of MCU for Deploying Machine Learning with TensorFlow Lite

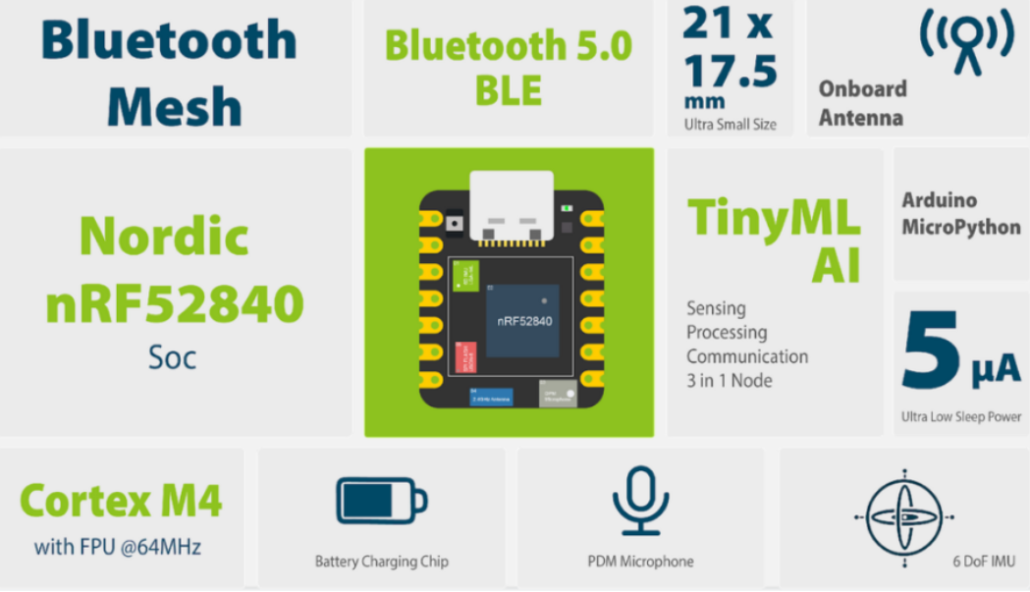

Seeed XIAO BLE nRF52840 Sense -Support TinyML/TensorFlow Lite

Why is XIAO BLE Sense the best microcontroller option for deploying machine learning with TensorFlow Lite?

Seeed XIAO BLE nRF52840 Sense is a tiny Bluetooth BLE development board designed for IoT and AI applications. It features an onboard antenna, 6 Dof IMU, and microphone, all of which make it an ideal board to run AI using TinyML and TensorFlow Lite.

Here are key features of XIAO BLE Sense:

- Powerful CPU: ARM® Cortex™-M4 32-bit processor supports the deployment and operation of machine learning

- Wireless capabilities: supports Bluetooth 5.0, NFC, and ZigBee brings more possibilities for IoT project implementation

- Ultra-small size suitable for wearable and other tiny devices

- Ultra-low sleep power: 5 μA, deep sleep model

- Support Arduino/ MicroPython/ CircuitPython for user-friendly development and use

- Onboard PDM microphone and 6-axis IMU

——provide a great convenience for users & suitable for machine learning deployment with TensorFlow lite Audio, gesture recognition, etc.

- Price concessions, with high-cost performance

——Compared to the official price of the Arduino Nano 33 BLE Sense, the Seeed XIAO BLE Sense is more than half the price, only $15.99.

In view of the above characteristics, XIAO BLE Sense is indeed suitable for machine learning TensorFlow Lite deployment and embedded machine learning projects.

If you are interested in programming embedded machine learning, we have Codecraft graphic programming that can help you quickly start your own ML project. And we have set up a #tinyml channel on our Discord server, please click to join for 24/7 making, sharing, discussing, and helping each other out.

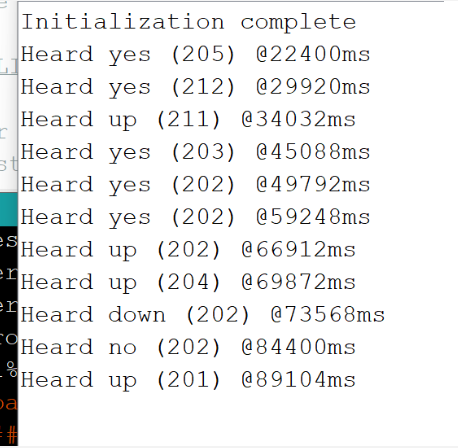

[Project] Speech Recognition on XIAO BLE Sense with TensorFlow Lite

This project uses TensorFlow Lite model on XIAO BLE Sense and performs speech recognition with onboard microphone.

First you need the initial hardware and software setup for XIAO BLE Sense, you can consult and follow the steps in the “XIAO BLE (Sense) Getting Started” wiki. Also, you need to download the tflite-micro-arduino-examples library in zip file format and import it into the Arduino IDE.

Next, perform data training and generate a TensorFlow Lite model and perform the inference process. You can view the specific software code in this wiki.

Summary

With the basic knowledge of TensorFlow Lite and the features of XIAO BLE Sense in your hands, you can deploy and run your machine learning project with TensorFlow Lite on the powerful MCU, XIAO BLE Sense!

Hope you learn more about TensorFlow Lite in this guide! If you have any questions about TensorFlow Lite, feel free to leave a comment in the comments section below.

Interested in XIAO series products? You can view all our XIAO family products here!