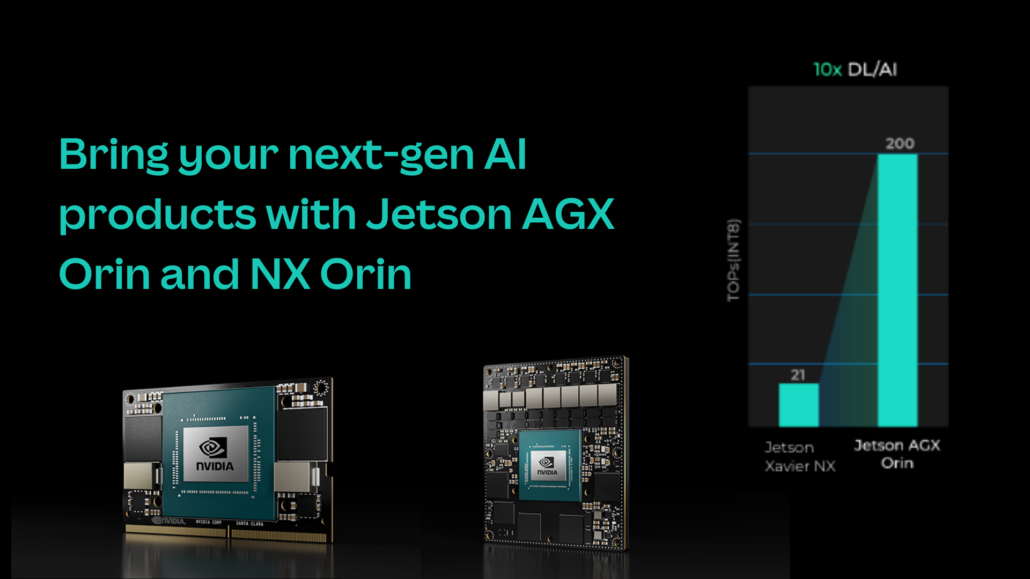

NVIDIA Orin: bring your next-gen AI products with Jetson AGX Orin and NX Orin

? Exciting news! NVIDIA Jetson AGX Orin Developer Kit is available at Seeed now for pre-order, be the first one to get the AGX Orin Dev kit now! Check out our all-new release for NVIDIA Jetson series:

- NVIDIA Jetson AGX Orin Developer Kit – Pre-order now

- reComputer Jetson -10 starting from $199, shipping 4/30

- reComputer Jetson -20 Xavier NX 8GB/16GB, shipping in July

- reServer Orin Q4

- reServer NX Q2

Jetson Orin Production Module Roadmap 2022

From NVIDIA’s updated Jetson roadmap, Jetson Orin production modules will be available starting in 2022. The production module will be essential to product design and following AI products roadmap.

- Jetson AGX Orin 32GB in July, $899 over 1K units.

- Jetson AGX Orin 64GB in October, $1599 over 1K units

- Jetson Orin NX 16GB in September, $599 over 1K units.

- Jetson Orin NX 8GB in December, $399 over 1K units

NVIDIA Jetson AGX Orin Developer Kit is available at Seeed now for pre-order. If you already used/considering about using AGX Xavier for your product, it will be a good time to have a test with AGX Orin Dev Kit to obtain 6 to 8 times AI performance, 64GB module will be $300 higher than AGX Xavier, however, 32GB version will stay at $899. According to NVIDIA’s roadmap, people can get AGX Orin production module for their mass product as soon as in July, 2022.

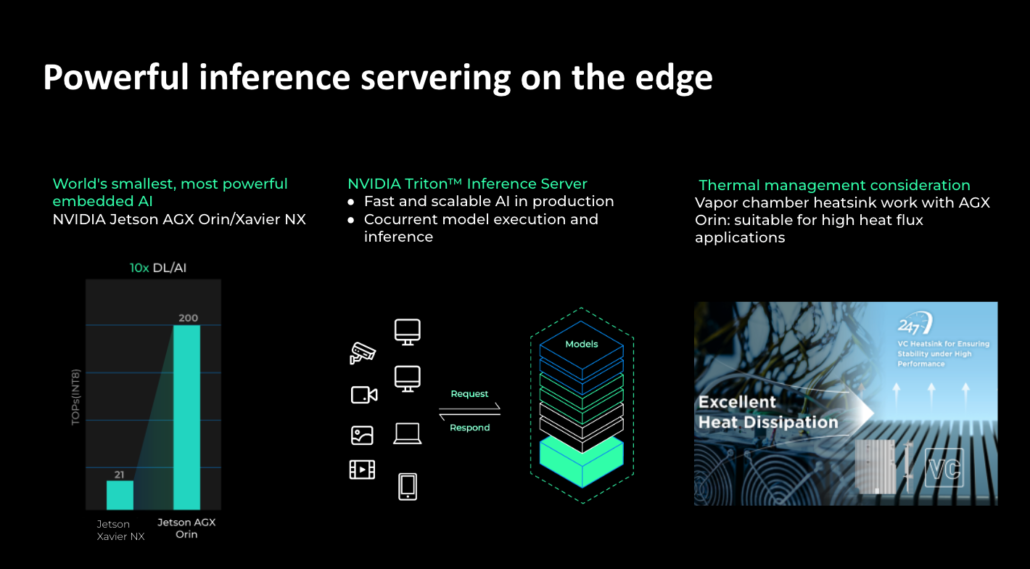

Comes to the superior AI performance, Seeed also released reServer – Jetson AGX Orin with pre-built Triton Inference Server, 256 GB NVME SSD, Dual SATA III 6.0, high-speed 10 Gigabit Ethernet port, and ensure the device Chill 24/7 with Vapor Chamber heatsink. reServer Jetson AGX Orin is prepared for the most powerful and relaible local inference server for heavy ML workload and concurrent execution of multiple deep learning applications, bringing fast and scalable AI in production.

In past three years, Xavier NX has been widely deployed into Agriculture, Healthcare, Transporation, and Retail applications. With the same form factor, now AI performance can be lifted up to 5 times if you switch to the Orin NX module. Will Orin NX be your next consideration?

But back to now, don’t miss out Seeed Jetson-20 built with Xavier NX 8GB/16GB, starting from $599, is ready to ship in July! Start your real-world development and deployment quickly with the NX module as well as rich interfaces on a carrier borad, thermal management and reComputer case.

Let’s take a close look at the specification comparison between Xavier NX and Orin NX, AGX Xavier and AGX Orin. We will keep updating our new product progress for Jetson Orin! Please also check out my previous blog Compare NVIDIA Jetson AGX Orin with AGX Xavier: 8x AI performance, in-advance Ampere GPU, CPU, Memory & Storage.

Compare Jetson Orin to Jetson AGX Xavier and Xavier NX

Jetson Benchmark: Jetson Xavier NX and Jetson AGX Orin MLPerf v2.0 Results

| Model | Jetson Xavier NX | Jetson AGX Xavier | Jetson AGX Orin |

|---|---|---|---|

| PeopleNet | 124 | 196 | 536 |

| Action Recognition 2D | 245 | 471 | 1577 |

| Action Recognition 3D | 21 | 32 | 105 |

| LPR Net | 706 | 1190 | 4118 |

| Dashcam Net | 425 | 671 | 1908 |

| Bodypose Net | 105 | 172 | 559 |

| ASR: Citrinet 1024 | 27 | 34 | 113 |

| NLP: BERT-base | 58 | 94 | 287 |

| TTS: Fastpitch-HifiGAN | 7 | 9 | 42 |

Jetson AGX Orin 64GB and 32GB Modules

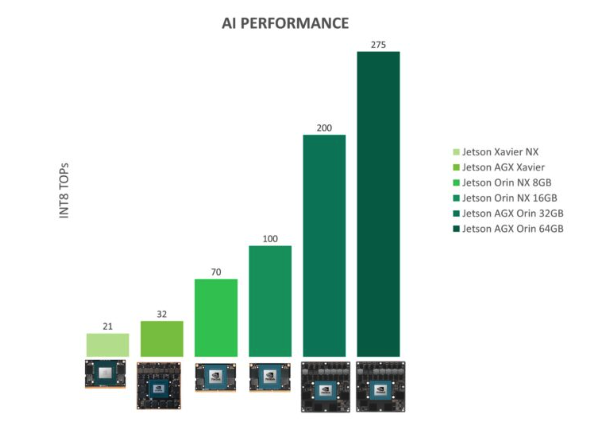

NVIDIA Jetson AGX Orin modules deliver up to 275 TOPS of AI performance with power configurable between 15W and 60W. This gives you up to 8X the performance of Jetson AGX Xavier in the same compact form factor for robotics and other autonomous machine use cases.

| Jetson AGX Orin Series | Jetson AGX Xavier Series | |||

| Jetson AGX Orin 32GB | Jetson AGX Orin 64GB | Jetson AGX Xavier 64GB | Jetson AGX Xavier 32GB | |

| Price (1K+ Units) | $899 | $1599 | $1299 | $899 |

| AI Performance | 200 TOPS | 275 TOPS | 32 TOPs | 32 TOPs |

| GPU | 1792-core NVIDIA Ampere GPU with 56 Tensor Cores | 2048-core NVIDIA Ampere GPU with 64 Tensor Cores | 512-core NVIDIA Volta™ GPU with 64 Tensor Cores | 512-core NVIDIA Volta™ GPU with 64 Tensor Cores |

| CPU | 8-core NVIDIA Arm® Cortex A78AE v8.2 64-bit CPU 2MB L2 + 4MB L3 | 12-core NVIDIA Arm® Cortex A78AE v8.2 64-bit CPU 3MB L2 + 6MB L3 | 8-core NVIDIA Carmel Arm®v8.2 64-bit CPU 8MB L2 + 4MB L3 | 8-core NVIDIA Carmel Arm®v8.2 64-bit CPU 8MB L2 + 4MB L3 |

| DL Accelerator | 2x NVDLA v2.0 | 2x NVDLA v2.0 | 2x NVDLA v1 | 2x NVDLA v1 |

| Memory | 32 GB | 64GB | 64 GB | 32 GB |

| Storage | 64GB eMMC 5.1 | 64GB eMMC 5.1 | 32 GB eMMC 5.1 | 32 GB eMMC 5.1 |

| Power | 15W – 40W | 15W – 60W | 10W | 15W | 30W | 10W | 15W | 30W |

| Mechanical | 100mm x 87mm 699-pin connector Integrated Thermal Transfer Plate | 100mm x 87mm 699-pin connector Integrated Thermal Transfer Plate | 100 mm x87 mm699-pin connector integrated Thermal Transfer Plate | 100 mm x87 mm699-pin connector integrated Thermal Transfer Plate |

Jetson Orin NX 16GB and 8GB Modules

Jetson Orin NX modules deliver up to 100 TOPS of AI performance in the smallest Jetson form factor, with power configurable between 10W and 25W. The Orin NX Series is form factor compatible with the Jetson Xavier NX series and delivers up to 5x the performance, or up to 3X the performance at the same price.

| Jetson Xavier NX Series | Jetson Orin NX Series | |||

| Jetson Xavier NX 16GB | Jetson Xavier NX | Jetson Orin NX 8GB | Jetson Orin NX 16GB | |

| $499 | $399 | $399 | $599 | |

| AI Performance | 21 TOPs | 21 TOPs | 70 TOPS | 100 TOPS |

| GPU | 384-core NVIDIA Volta™ GPU with 48 Tensor Cores | 384-core NVIDIA Volta™ GPU with 48 Tensor Cores | 1024-core NVIDIA Ampere GPU with 32 Tensor Cores | 1024-core NVIDIA Ampere GPU with 32 Tensor Cores |

| CPU | 6-core NVIDIA Carmel Arm®v8.2 64-bit CPU 6MB L2 + 4MB L3 | 6-core NVIDIA Carmel Arm®v8.2 64-bit CPU 6MB L2 + 4MB L3 | 6-core NVIDIA Arm® Cortex A78AE v8.2 64-bit CPU 1.5MB L2 + 4MB L3 | 8-core NVIDIA Arm® Cortex A78AE v8.2 64-bit CPU 2MB L2 + 4MB L3 |

| DL Accelerator | 2x NVDLA v1 | 2x NVDLA v1 | 1x NVDLA v2.0 | 2x NVDLA v2.0 |

| Memory | 16 GB | 8 GB | 8 GB | 16 GB |

| Storage | 16 GB eMMC 5.1 * | 16 GB eMMC 5.1 * | — (Supports external NVMe) | — (Supports external NVMe) |

| Power | 10W | 15W | 20W | 10W | 15W | 20W | 10W – 20W | 10W – 25W |

| Mechanical | 69.6 mm x 45 mm260-pin SO-DIMM connector | 69.6 mm x 45 mm 260-pin SO-DIMM connector | 69.6mm x 45mm 260-pin SO-DIMM connector | 69.6mm x 45mm 260-pin SO-DIMM connector |

reComputer Jetson: real world AI at the Edge, starts from $199

Built with Jetson Nano 4GB/ Xavier NX 8GB/16GB

- Edge AI box fit into anywhere

- Embedded Jetson Nano/NX Module

- Pre-installed Jetpack for easy deployment

- Nearly same form factor with Jetson Developer Kits, with rich set of I/Os

- Stackable and expandable

reServer Jetson: inference center for the edge

Powered by Jetson NX

- Rapid network access: 2.5GbE port x1 (RX: 2.35 Gbits/sec, TX: 1.4 ~ 1.6 Gbits/sec), 1GbE port x1

- Hybrid connectivity: Support 5G, 4G, LoRaWAN (modules not included)

- Rich peripherals: HDMI 2.0 x1,DP1.4 x1,USB3.1 GEN2 (up to 10Gbit) x2

- Expandable storage: Dual SATA III data connectors for 3.5”/2.5″ SATA hard disk drives

- Excellent heat dissipation: Quiet cooling bottom fan + aluminum heatsink cooling fan

- Ready-to-use: Pre-installed Jetpack system 4.6.1

- Pre-installed 2.5 inches 256GB SSD x1, up to SATA3

Software Features

In software perspective, Jetson AGX Orin Developer Kit features a powerful NVIDIA native AI software stack with support for SDKs and software platforms, including the following:

- NVIDIA JetPack

- NVIDIA Riva

- NVIDIA DeepStream

- NVIDIA Isaac

- NVIDIA TAO

Seeed Jetson series is compatible with the entire NVIDIA Jetson software stack, industry-leading AI development, and device management platforms. Welcome developers to join communities with all the coolest AI ideas and get ready for AI production.

- Edge Impulse: the leading development platform for machine learning on edge devices, free for developers and trusted by enterprises. Try it now for building models in minutes.

- AlwaysAI: a complete developer platform for Computer Vision applications on IoT devices. Get started today with our tutorial, and quickly deploy CV applications at the edge.

- YOLOv5 : YOLO is an abbreviation for the term ‘You Only Look Once’. It is an algorithm that detects and recognizes various objects in an image in real-time. YOLOv5 is the latest version of YOLO which performs much better than the previous iterations of YOLO and it is now based on the PyTorch framework.

- Roboflow: annotate images super fast, right within your browser. Easily train a working computer vision model.

- Deci: empowers deep learning developers to accelerate inference on edge or cloud, reach production faster, and maximize hardware potential. Check out How to Deploy and Boost Inference on NVIDIA Jetson.

- Nimbus: log on to Nimbus, simply drag and drop to configure your robot application in the cloud.

- Allxon: Enable Efficient Remote Hardware Management Services for NVIDIA Jetson Platform. Enjoy an exclusive 90-day free trial to unlock all functions with code H4U-NMW-CPK. Read our partnership story here.

JETSON BENCHMARKS

NVIDIA Jetson is used to deploy a wide range of popular DNN models and ML frameworks to the edge with high-performance inferencing, for tasks like real-time classification and object detection, pose estimation, semantic segmentation, and natural language processing (NLP). The tables below show inferencing benchmarks from the NVIDIA Jetson submissions to the MLPerf Inference Edge category.

Jetson Xavier NX and Jetson AGX Orin MLPerf v2.0 Results

| Model | Jetson Xavier NX (TensorRT) | NVIDIA Orin (TensorRT) |

|---|---|---|

| Image Classification ResNet-50 | 1243.14 | 6138.84 |

| Object Detection SSD-small | 1782.53 | 6883.49 |

| Object Detection SSD-Large | 36.69 | 207.66 |

| Speech to Text RNN-T | 260.52 | 1110.23 |

| Natural Language Processing BERT-Large | 61.40 | 476.34 |

- NVIDIA Orin can be found in Jetson AGX Orin

- These are preview results, and can be found at: v2.0 Results | MLCommons

- These results were achieved with the NVIDIA Jetson AGX Orin Developer Kit running a preview of TensorRT 8.4.0, and CUDA 11.4

- ResNet-50, SSD-small, and SSD-Large were run on the GPU, and both DLAs

- Learn more about these results in the blog: NVIDIA Orin Leaps Ahead in Edge AI in MLPerf Tests | NVIDIA Blog

Jetson AGX Xavier and Jetson Xavier NX MLPerf v1.1 Results

| Model | Jetson Xavier NX (TensorRT) | Jetson AGX Xavier 32GB (TensorRT) |

|---|---|---|

| Image Classification ResNet-50 | 1245.10 | 2039.11 |

| Object Detection SSD-small | 1786.91 | 2833.59 |

| Object Detection SSD-Large | 36.97 | 55.16 |

| Speech to Text RNN-T | 259.67 | 416.13 |

| Natural Language Processing BERT-Large | 61.34 | 96.73 |

- Full Results can be found at v1.1 Results | MLCommons

- These results were achieved on Jetson AGX Xavier Developer Kit and Jetson Xavier NX Developer kit running Jetpack 4.6, TensorRT 8.0.1, CUDA 10.2

- ResNet-50, SSD-small, and SSD-Large were run on the GPU and both DLAs

- These MLPerf Results can be reproduced with the code in the following link: https://github.com/mlcommons/inference_results_v2.0/tree/master/closed/NVIDIA

Jetson Pretrained Model Benchmarks

NVIDIA pretrained models from NGC start you off with highly accurate and optimized models and model architectures for various use cases. Pretrained models are production-ready. You can further customize these models by training with your own real or synthetic data, using the NVIDIA TAO (Train-Adapt-Optimize) workflow to quickly build an accurate and ready-to-deploy model. The table below shows inferencing benchmarks for some of pretrained models running on Jetson modules.

Jetson Xavier NX and Jetson AGX Orin MLPerf v2.0 Results

| Model | Jetson Xavier NX | Jetson AGX Xavier | Jetson AGX Orin |

|---|---|---|---|

| PeopleNet | 124 | 196 | 536 |

| Action Recognition 2D | 245 | 471 | 1577 |

| Action Recognition 3D | 21 | 32 | 105 |

| LPR Net | 706 | 1190 | 4118 |

| Dashcam Net | 425 | 671 | 1908 |

| Bodypose Net | 105 | 172 | 559 |

| ASR: Citrinet 1024 | 27 | 34 | 113 |

| NLP: BERT-base | 58 | 94 | 287 |

| TTS: Fastpitch-HifiGAN | 7 | 9 | 42 |

- These Benchmarks were run using Jetpack 5.0

Jetpack 5.0 includes L4T with Linux kernel 5.10 and a reference file system based on Ubuntu 20.04. Jetpack 5.0 enables a full compute stack update with CUDA 11.x and new versions of cuDNN and Tensor RT. It will include UEFI as a CPU bootloader and will also bring support for OP-TEE as a trusted execution environment. Finally, there will be an update to DLA support for NVDLA 2.0, as well as a VPI update to support the next generation PVA v2.

- Each Jetson module was run with maximum performance (MAX-N mode for Jetson AGX Xavier (32 GB) and Jetson AGX Orin, and 20W mode for Jetson Xavier NX)

- Reproduce these results by downloading these models from the NVIDIA NGC catalog

NVIDIA Jetson powered Edge AI platform at Seeed

At Seeed, you will find everything you want to work with NVIDIA Jetson Platform – official NVIDIA Jetson Dev Kits, Seeed-designed carrier boards, and edge devices, as well as accessories.

Seeed will continue working on the Jetson product line and will be ready to combine our partners’ unique technology with Seeed’s hardware expertise for an end-to-end solution.

Join Seeed as an Edge AI Partner: Transform Your Business, Delivering Real-World AI Together

- Integrate your unique AI technique into our current hardwares: resell or co-brand licensed devices at our channels.

- Build your next-gen AI product powered by the NVIDIA Jetson module and bring your product concept to the market with Seeed’s Agile Manufacturing 0-∞.

- Co-inventing with Seeed. Combining our partners’ unique skills and Seeed’s hardware expertise, let’s unlock next AI ideas