OpenVINO for Intel 11th Gen Tiger Lake Processors

While Intel’s next generation hardware has captured the attention of many, many of the accompanying software frameworks that have recently emerged are equally significant and impressive. Today, we’ll be talking specifically about OpenVINO for edge AI deployment.

11th Gen Intel® Core™ Processors

Intel’s 11th Generation Tiger Lake processors were first revealed in September 2020, and have since then seen a great amount of praise and welcome. Featuring an upgraded 10nm semiconductor fabrication process from the previous 14nm, these Tiger Lake processors demonstrated both a grand leap in performance and a significant increase in power efficiency.

Not only that, the higher end of the Tiger Lake series comes with an outstanding bump in graphics performance thanks to the new Intel® Iris® Xe Graphics, which is great news for handling machine learning workloads. It also features a deep learning boost-powered AI engine, which further dramatically boosts the performance of machine learning inference runtimes on these new processors.

Pairing up with frameworks like the OpenVINO toolkit, we’re expecting Intel’s 11th Gen Tiger Lake processors to play an integral role in providing a foundation for the rise of edge AI applications like computer vision!

What is OpenVINO?

The OpenVINO toolkit is a comprehensive toolkit for deploying edge machine learning applications such as computer vision, speech recognition, natural language processing, recommendation systems and more on Intel hardware. With support for modern neural network architectures including convolutional neural networks, recurrent and attention-based networks, OpenVINO was designed as an end-to-end solution that standardises, optimises and finally deploys your edge machine learning workloads effectively.

OpenVINO’s Core Features

- Enables CNN-based deep learning inference on the edge

- Supports seamless, integrated heterogeneous execution across Intel Hardware:

- Intel® CPUs

- Intel® Processor Graphics

- Intel® Neural Compute Stick 2

- Intel® Vision Accelerator Design with Intel® Movidius™ VPUs

- Speeds time-to-market via an easy-to-use library of computer vision functions and pre-optimized kernels

- Includes optimized calls for computer vision standards, including OpenCV and OpenCL™

The OpenVINo Toolkit workflow starts from its Model Optimizer, which is a cross-platform command-line tool that converts a trained neural network from its source framework to an open-source, nGraph-compatible Intermediate Representation (IR) for use in OpenVINO. Many popular frameworks are supported, like Caffe, TensorFlow, MXNet, Kaldi and ONNX. Aside from converting, it will also optimise your model by removing extra layers and combining operations to make it simpler and faster.

From here, you can then take advantage of the comprehensive suite of tools offered by OpenVINO, such as:

- Deep Learning Inference Engine – A unified API for high performance inference on supported Intel hardware

- Post-Training Optimization tool – A tool to calibrate a model and then execute it in the INT8 precision

- Deep Learning Streamer (DL Streamer) – Streaming analytics framework for constructing graphs of media analytics components

- OpenCV – Optimised OpenCV computer vision library for Intel® hardware

- Intel® Media SDK – Hardware accelerated video decode, encode & processing (Linux only)

- Additional Tools – Benchmark App, Cross Check Tool, Compile tool, and more!

Extensive Documentation & Examples

To help users to get started, OpenVINO comes with tons of documentation and examples. For instance, the Inference Engine Samples provide a set of reference console applications to accelerate your deployment process. Furthermore, the Open Model Zoo has application demos with pre-trained models and extensive documentation, which you can use as templates to build your deep learning application!

OpenVINO can be also used through the Deep Learning Workbench, a convenient web-based graphical interface.

Why use OpenVINO?

With edge AI applications playing an increasingly important role in industries and smart cities, there are also many challenges that must be addressed in developing them. Namely, OpenVINO solves three key problems when deploying deep learning applications on the edge.

Faster Processing for Real Time Applications

While it’s theoretically possible to run on state of the art machine learning inference runtimes even on relatively weak hardware, processing speeds are a major concern for deploying real time applications on the edge.

Vision-based analysis of security footage and anomaly detection in industrial machinery, for example, are applications that must be responded to with as little latency as possible to avoid further damages. Thus, the optimisations offered by OpenVINO are a significant advantage for such applications which are deployed on Intel hardware, which will run faster and more effectively.

Optimised Power Consumption

With the boost in processing power from Intel’s new 11th Gen Tiger Lake processors, concerns surrounding edge AI applications will instead now turn to power efficiency. Running clunky, unoptimised runtimes on edge devices will increase power consumption and hurt the sustainability of large scale edge solutions in the long run. Hence, OpenVINO’s optimisations will thus not only allow machine learning applications to run faster, but also consume less energy as they operate.

Effective, Streamlined Development Tools

OpenVINO offers a comprehensive set of tools to build effective machine learning inference runtimes for edge deployment, which can allow you to significantly reduce the time-to-market and development costs of your applications. Recall also that you can use many popular machine learning frameworks with OpenVINO – so it’s easy to port any existing projects over to take advantage of these tools! That said, I think that OpenVINO will definitely be a game-changer for developers who use it consistently in the long run.

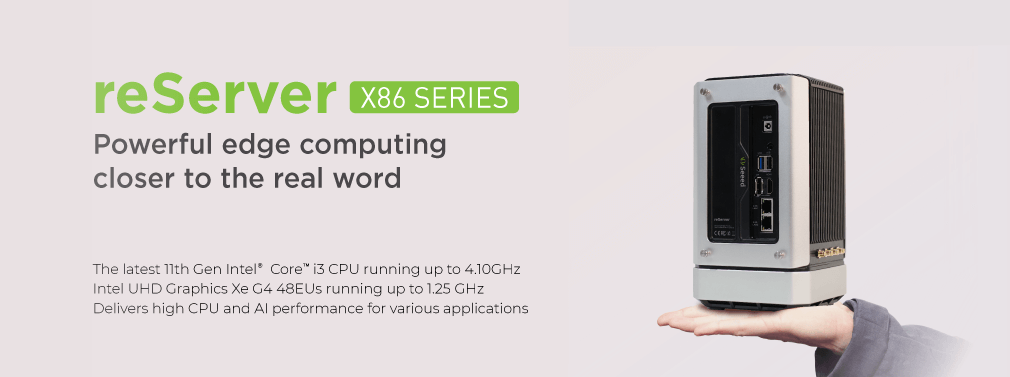

Introducing reServer

If you’re looking for hardware that can leverage the advantages of both the Intel Tiger Lake processors and OpenVINO, look no further than the reServer. The reServer is a compact and powerful server that can be used in both edge and cloud computing scenarios, powered by the latest 11th Generation Intel Core i3 CPU with Intel UHD Xe Graphics. Naturally, this means that it’s fully compatible with OpenVINO and you can use it to optimise and deploy deep learning applications for the edge effectively.

Not only that, reServer comes with a variety of network connectivity capabilities, including two high-speed 2.5-Gigabit Ethernet ports and hybrid connectivity with 5G LoRaWAN, BLE and WiFi – as well as integrated cooling and dual SATA III connectors for internally mounting 3.5” SATA hard disk drives. It truly is a complete solution for powerful edge computing applications.

Product Features

- CPU: Latest 11th Gen Intel® Core™ i3 CPU running up to 4.10GHz

- Graphics: Intel UHD Graphics Xe G4 48EUs running up to 1.25 GHz

- Rich Peripherals: Dual 2.5-Gigabit Ethernet, USB 3.0 Type-A, USB 2.0 Type-A, HDMI and DP output

- Hybrid connectivity including 5G, LoRa, BLE and WiFi (Additional Modules required for 5G and LoRa)

- Dual SATA III 6.0 Gbps data connectors for 3.5” SATA hard disk drives with sufficient internal enclosure storage space

- M.2 B-Key/ M-Key/ E-Key for expandability with SSDs or 4G and 5G modules

- Compact server design, with an overall dimension of 124mm*132mm*233mm

- Quiet cooling fan with a large VC heat sink for excellent heat dissipation

- Easy to install, upgrade and maintain with ease of access to the internal components

Don’t wait, learn more on the Seeed Online Store!

Summary & More Resources

OpenVINO is a framework that helps you convert, optimise and deploy machine learning inference runtimes effectively on Intel hardware. If you’re already using Intel’s hardware, you should definitely look at OpenVINO to optimise the deployment of your edge machine learning applications. Otherwise, this may be an important factor to consider if you’re still in the process of choosing!

For more resources, be sure check out the following links!

- OpenVINO™ Toolkit Overview – OpenVINO

- Tiger Lake UP3 Processors – Intel

- What is an Edge Server? – Edge Computing Embedded Systems

- Edge AI – What is it and What can it do for Edge IoT?