How NVIDIA Jetson Clusters Supercharge GPU Edge Computing

Edge computing has seen an immense rise in importance and application areas in today’s IoT connected world. In this field, cluster computing on the edge, GPU clustering included, has been one significant way of easing the transition away from cloud computing by delivering great amounts of computing power in compact and portable form factors. In this article, we will specifically discuss how you can use NVIDIA Jetson Clusters to bring your edge computing applications to the next level!

We will be covering the following content and more!

- What is a GPU Cluster?

- What is an NVIDIA Jetson Cluster & What are its Benefits?

- GPU Cluster Applications & Examples

- Building an NVIDIA Jetson Cluster: Hardware Recommendations

- Tutorial: Use Kubernetes with an NVIDIA Jetson Cluster for GPU-Powered Computing

What is GPU Clustering on the Edge?

Edge GPU clusters are computer clusters that are deployed on the edge, that carry GPUs (or Graphics Processing Units) for edge computing purposes. Edge computing, in turn, describes computational tasks that are performed on devices which are physically located in the local space of their application. This is in contrast to cloud computing, where these processes are handled remotely.

Firstly, What is Cluster Computing?

Computer clusters are defined as a group of computers that work together so that they can be viewed as a single system. A cluster is then typically used to process large workloads by distributing tasks across the multiple computers in the system. There are three major types of clusters, each serving different purposes and contributing their own set of benefits to cluster computing!

- High Availability – Ensures that applications are always available by rerouting requests to another node in the event of a failure.

- Load Balancing – Spreads computing workloads evenly across slave nodes to handle high job volumes.

- High Performance – Multiple slave nodes are used in parallel to increase computing power for tasks with high computing requirements.

Cluster Computing + GPUs = GPU Clusters

As their name suggests, GPU Clusters consist of computers that are equipped with GPUs. GPUs, in contrast with CPUs or central processing units, specialise in parallel computing. They break complex problems into much smaller tasks in order to compute them all at once, in order to achieve high throughput computing.

Traditionally, graphics processing alone benefited from GPUs, since the rendering of textures, lighting and shapes had to be done simultaneously to produce smooth motion graphics. However, with modern GPU frameworks, their parallel computing capabilities can now be extended to other areas like data processing and machine learning – giving rise to General Purpose GPUs or GPGPUs!

I’ve covered both Cluster Computing & GPU Clusters in depth in my previous articles, so be sure to check them out for more details!

What are NVIDIA Jetson Clusters & Why Use Them?

Traditionally, cluster computing was unique to cloud computing, but as advances in Single Board Computers (SBCs) & network infrastructure take strides, this is no longer the case. In this section, we will be looking at NVIDIA Jetson Clusters, which are GPU Clusters built from NVIDIA’s Jetson modules, and why you should consider it for your edge computing applications!

Introducing the NVIDIA Jetson Series

NVIDIA’s Jetson modules are GPU-enabled compute modules that provide great performance and power efficiency to run software effectively on the edge. GPU capabilities allow these modules to power a range of applications that require higher performance, including AI-powered Network Video Recorders (NVRs), automated optical inspection systems in precision manufacturing, and autonomous robots!

Furthermore, NVIDIA’s reputation in the semiconductor industry is outstanding. With their GPUs powering the majority of the global GPU applications, NVIDIA has for years consistently designed and produced high quality GPUs for a vast range of consumer, industry, and research applications. Naturally, the NVIDIA Jetson series is no exception, featuring great build quality and performance!

CUDA & NVIDIA Jetpack

Beyond NVIDIA’s great hardware in their products, however, the greatest advantage of running an NVIDIA Jetson powered GPU cluster can be argued to lie heavily in their software capabilities, namely CUDA & NVIDIA Jetpack compatibility.

CUDA is NVIDIA’s industry-leading proprietary GPGPU framework for programming and developing applications with GPUs. It features GPU-accelerated libraries, debugging and optimisation tools, a C/C++ compiler and a runtime library that enables you to build and deploy your application on a variety of computer architectures. With excellent support, integration and development compatibility with numerous GPU applications, CUDA is in fact the preferred choice by developers worldwide.

NVIDIA’s Jetpack SDK is a comprehensive solution from NVIDIA to build AI applications, and supports all Jetson modules & developer kits. It consists of a Linux operating system, CUDA-X accelerated libraries, as well as APIs for Deep Learning, Computer Vision, Accelerated Computing and Multimedia. Even better, it includes samples, documentation and developer tools to help you get developing easily!

Thus, building a GPU cluster powered by NVIDIA Jetson products provides not only powerful hardware, but is also complete with also easy to use software and libraries that will be sure to accelerate your development process!

Building NVIDIA Jetson Clusters with Jetson Mate

So you’ve decided to build your very own NVIDIA Jetson GPU cluster, and you’re looking for a place to start. You may have already come across some examples that wire multiple Jetson Nano Development Kits together. While that definitely works, the wiring can get rather complex, and even worse: that form factor is hardly suitable for field deployment – which is the whole point of an edge computing system!

To tackle these challenges, Seeed is proud to share our complete edge GPU clustering solution with the Jetson Mate and NVIDIA’s Jetson Nano / Xavier NX modules. Complete with a carrier board and the Jetson modules, you can easily get your hands on a complete NVIDIA GPU Cluster that is powered by NVIDIA’s industry-leading GPUs for edge applications!

You can now pick up the hardware for a complete edge GPU cluster from Seeed in two convenient packages:

- Jetson Mate Cluster Standard with 1 Jetson Nano SoM and 3 Jetson Xavier NX SoMs

- Jetson Mate Cluster Advanced with 4 Jetson Xavier NX SoMs

Continue reading to learn more about the Jetson Mate, Jetson Nano and Jetson Xavier NX!

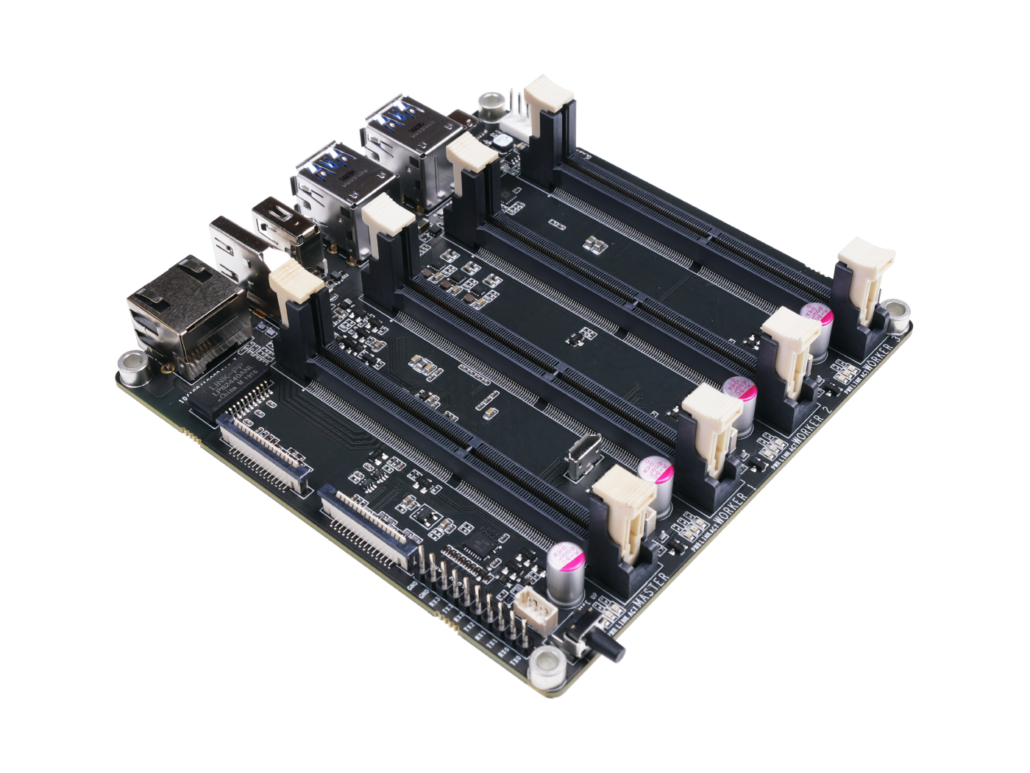

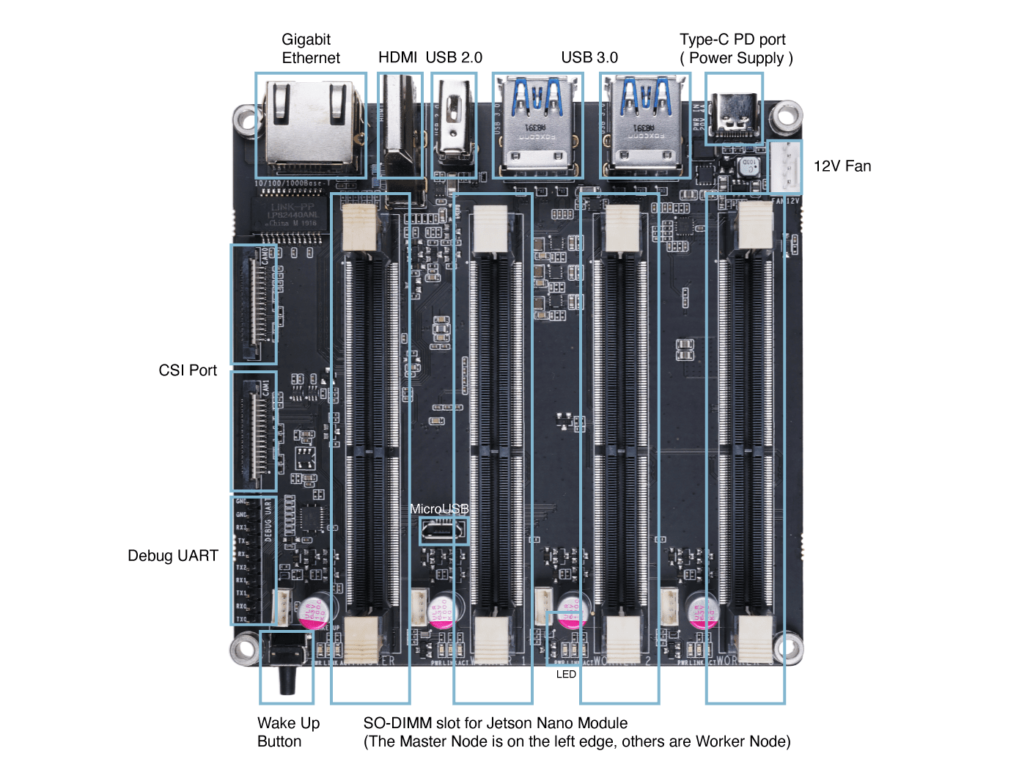

Introducing the Jetson Mate

The Jetson Mate carrier board is a comprehensive and reliable solution that has been specially designed for building NVIDIA Jetson clusters. Equipped with an onboard 5-port gigabit switch that enables up to 4 SoMs to communicate with each other, as well as independent power for 3 worker/slave nodes, the Jetson Mate with its rich peripherals (CSI, HDMI, USB, Ethernet) and inbuilt fan is a complete solution for building NVIDIA GPU clusters on the edge.

The Jetson Mate can house up to 4 of NVIDIA’s very own Jetson Nano / Xavier NX SoMs in its compact form factor to deliver immense computing power on the edge. With an easy-to-build design that can be easily set up with our step-by-step guide, the Jetson Mate also offers high flexibility and performance for your GPU clusters.

To learn more about the Jetson Mate, be sure to visit its product page on the Seeed Online Store!

NVIDIA Jetson Nano Module

Designed specially for AI applications with NVIDIA’s JetPack SDK, you can easily build, deploy and manage powerful machine learning applications at the edge with low power consumption with the Jetson Nano and its 128 NVIDIA CUDA Cores.

Product Features:

- Quad-Core ARM Cortex-A57 MPCore Processor

- NVIDIA Maxwell GPU with 128 NVIDIA CUDA Cores

- 4GB 64-Bit LPDDR4 Memory at 1600MHz 25.6GBps

- 16GB eMMC Storage

- NVIDIA JetPack SDK for AI Development

Pick up your very own NVIDIA Jetson Nano Module on the Seeed Online Store!

NVIDIA® Jetson Xavier™ NX Module

While a tad bit pricier than the Jetson Nano, the Jetson Xavier NX module absolutely pulls out all the stops when it comes to GPU compute power. With 384 NVIDIA CUDA cores and 48 Tensor cores for machine learning, the Jetson Xavier NX is capable of up to a whopping 6 TFLOPS (trillion floating point operations per second) for FP16 values and 21 TOPS (trillion operations) for INT8 values. With the same NVIDIA Jetpack compatibility, the Jetson Xavier NX module will be sure to cover all the bases of your edge computing application.

Product Features:

- Compact size SoM powerful enough for advanced AI applications with low power consumption

- Supports entire NVIDIA Software Stack for application development and optimization

- More than 10X the performance of Jetson TX2

- Enables development of AI applications using NVIDIA JetPack™ SDK

- Easy to build, deploy, and manage AI at the edge

- Flexible and scalable platform to get to market with reduced development costs

- Continuous updates over the lifetime of the product

Pick up your very own NVIDIA Jetson Xavier NX Module on the Seeed Online Store!

Using NVIDIA GPU Clusters in Edge Computing

NVIDIA GPU Clusters powered by the Jetson Mate & Jetson SoMs can help you achieve limitless computing applications on the edge. Here are some example use cases where GPU clusters have become extremely important!

Graphics Rendering

We’ve talked about how GPUs have evolved to perform high-throughput general purpose computing, but that certainly isn’t to say that its original role of graphics rendering is now obsolete! Photo, video editing, 3D modelling, virtual or augmented reality are just some of the many relevant modern applications that continue to rely on the traditional functions of GPUs. Unfortunately, laptops equipped with discrete GPUs are large and expensive, whereas desktop solutions lack the portability required in numerous situations.

For a mobile and affordable solution, consider deploying an NVIDIA GPU cluster! Packed in a much smaller form factor yet still delivering significant amounts of power, you can offload graphics intensive workloads from your main computer to the edge GPU cluster to more efficiently process intensive graphics workloads.

Machine Learning on the Edge

NVIDIA Jetson clusters specialise in handling edge artificial intelligence (Edge AI) or edge machine learning workloads. Machine learning, or neural networks / deep learning in particular, require a considerable amount of computational power due to the great number of calculations that must be performed. As a result, powerful GPUs housed in data centres have long been indispensable for handling simultaneous calculations in machine learning workloads.

Today, however, that is no longer necessarily the case. With an NVIDIA Jetson cluster, you can easily take advantage of powerful hardware acceleration and optimised machine learning libraries and APIs to develop effective solutions for machine learning on the edge. In this way, our edge devices can now be made smarter, with capabilities to perform complex tasks like make predictions, process complex data, and even administer solutions.

Using an NVIDIA Jetson cluster is just one example of how you can take advantage of the shift to Edge AI. In fact, along with it come several key benefits, including reduced latency, reduced bandwidth requirement and cost, increased data security and improved reliability. Read about Edge AI and its transformative effects in Edge IoT in my previous article!

Deploy Scalable Applications with Kubernetes

These days, it’s almost impossible to talk about cluster computing without mentioning Kubernetes, which is an open-source platform for managing containerised workloads and services. While it’s definitely not the only solution available, it is one of the most popular ways to deploy computer clusters in 2021. You can think of it as a management interface that helps you manage your clusters, scaling resources up or down as required to make the most efficient use of your NVIDIA GPU clusters and more!

Tutorial: Use Kubernetes with NVIDIA Jetson Clusters for GPU-Powered Computing

In this section, I’m going to show you just how easy it is to set up your very own NVIDIA Jetson Cluster running Kubernetes with the Jetson Mate and the powerful Jetson Nano modules. You can also read the complete tutorial on our Seeed Wiki page.

Required Materials

To follow along with this tutorial, the following items are recommended. Take note that you will need to have at least two Jetson Nano modules, since we will need one to act as the master node, and the other to act as the worker node.

- Jetson Mate Carrier Board

- Jetson Nano Module (at least 2)

- Qualified Type-C Power Adapter (65W minimum) with PD Protocol

Naturally, you can also skip the trouble of buying individual components by opting directly for the Jetson Mate Cluster Standard or Jetson Mate Cluster Advanced!

Install & Configure Jetson OS

We will have to configure the operating system for each of the modules using NVIDIA’s official SDK manager.

First, choose the target hardware as shown below.

Then, choose the OS and Libraries you want to install:

Download and install the files. While downloading, insert the Jetson Nano compute module into the main node of the Jetson Mate.

Following this, short the 2 GND pins according to the picture shown.

Connect the Jetson Mate to your computer via the micro USB port and power on the machine by pressing the wake up button.

The final step is to flash the operating system onto the compute module. When the installation of the OS and software library is completed, you will see a window pop up. Select Manual Setup option, then click flash and wait until completion. That’s it!

Take note that all the modules can only be flashed when installed on the main node. You are required to flash and configure all your modules one by one on the main node.

Running Kubernetes on our Cluster

In the following steps, we will install and configure Kubernetes to run on our cluster of NVIDIA Jetson Nano modules!

Configuring Docker

For both Worker & Master modules, we need to configure the docker runtime to use “nvidia” as default.

Modify the file located at /etc/docker/daemon.json as follows.

{

"default-runtime" : "nvidia",

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

}

}Restart the Docker daemon with the following command,

sudo systemctl daemon-reload && sudo systemctl restart dockerthen validate the Docker default runtime as NVIDIA.

sudo docker info | grep -i runtimeHere’s a sample output:

Runtimes: nvidia runc

Default Runtime: nvidiaInstalling Kubernetes

For both Worker & Master modules, install kubelet, kubeadm, and kubectl with the following commands in the command line.

sudo apt-get update && sudo apt-get install -y apt-transport-https curl

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

# Add the Kubernetes repo

cat <<EOF | sudo tee /etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF

sudo apt update && sudo apt install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectlDisable the swap. Note: You have to turn this off every time you reboot.

sudo swapoff -aCompile deviceQuery, which we will use in the following steps.

cd /usr/local/cuda/samples/1_Utilities/deviceQuery && sudo makeConfigure Kubernetes

On the Master module only, initialize the cluster:

sudo kubeadm init --pod-network-cidr=10.244.0.0/16The output shows you the commands that can be executed for deploying a pod network to the cluster, as well as commands to join the cluster. If everything is successful, you should see something similar to this at the end of the output:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.2.114:6443 --token zqqoy7.9oi8dpkfmqkop2p5 \

--discovery-token-ca-cert-hash sha256:71270ea137214422221319c1bdb9ba6d4b76abfa2506753703ed654a90c4982bFollowing the instructions from the output, run the following commands:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configInstall a pod-network add-on to the control plane node. In this case, we use calico.

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlMake sure that all pods are up and running:

kubectl get pods --all-namespacesHere’s the sample output:

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system kube-flannel-ds-arm64-gz28t 1/1 Running 0 2m8s

kube-system coredns-5c98db65d4-d4kgh 1/1 Running 0 9m8s

kube-system coredns-5c98db65d4-h6x8m 1/1 Running 0 9m8s

kube-system etcd-#yourhost 1/1 Running 0 8m25s

kube-system kube-apiserver-#yourhost 1/1 Running 0 8m7s

kube-system kube-controller-manager-#yourhost 1/1 Running 0 8m3s

kube-system kube-proxy-6sh42 1/1 Running 0 9m7s

kube-system kube-scheduler-#yourhost 1/1 Running 0 8m26sOn the Worker modules only, it is now time to add each node to the cluster, which is simply a matter of running the kubeadm join command provided at the end of the kube init command. For each Jetson Nano you want to add to your cluster, log into the host and run:

the cluster - your tokens and ca-cert-hash will vary

$ sudo kubeadm join 192.168.2.114:6443 --token zqqoy7.9oi8dpkfmqkop2p5 \

--discovery-token-ca-cert-hash sha256:71270ea137214422221319c1bdb9ba6d4b76abfa2506753703ed654a90c4982bOn the Master node only, you should now be able to see the new nodes when running the following command:

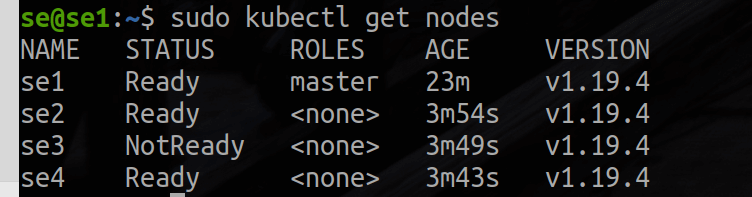

kubectl get nodesHere’s the sample output for three worker nodes.

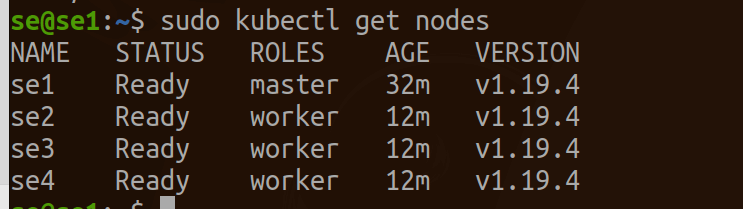

To keep track of your nodes, tag each worker node as a worker by running the following commands according to the number of modules you have! Since this example uses three workers, we will run:

kubectl label node se2 node-role.kubernetes.io/worker=worker

kubectl label node se3 node-role.kubernetes.io/worker=worker

kubectl label node se4 node-role.kubernetes.io/worker=worker

Now you have your very own kubernetes cluster running on your Jetson Mate & Jetson Nano modules! From here, you can do a variety of things, such as use a Jupyter runtime to run data analytics or machine learning workloads on the cluster!

To read more on how you can do that, be sure to visit the Seeed Wiki Page!

Summary & More Resources

If you’re looking for a reliable and tested option for building edge GPU clusters, I strongly recommend going with the NVIDIA Jetson cluster with the Jetson Mate Cluster. As a comprehensive hardware solution that is backed with industry-leading support and software compatibility from NVIDIA, you’ll be hard pressed to find a better solution for your edge computing application!

Keen to learn more about clustering or edge computing? Try the following resources:

- Cluster Computing on the Edge – What, Why & How to Get Started

- Building Edge GPU Clusters – Edge Computing Guide

- Edge AI – What is it and What can it do for Edge IoT?

- How Machine Learning has Transformed Industrial IoT