Edge AI 101- What is it, Why is it important, and How to implement Edge AI?

Updated on Feb 23rd, 2024

Edge AI, short for edge artificial intelligence, is immensely popular right now. It’s the next frontier of development for Internet of Things (IoT) systems – but how much do you really know about it? Join me in this article to learn all about Edge ML and how industry leaders are using it to change the way we live and work.

This article will cover the following content:

- AI, Deep Learning and Machine Learning

- What is Edge AI?

- Edge Computing vs. Cloud Computing

- How does Edge AI Benefit Us?

- Why is Edge AI Important?

- Choose hardware of SBC, GPU, TPU, and Microcontrollers for Edge AI

- TinyML at the Edge

- Edge AI solutions

- Getting Started with Edge AI – Device,Tools, Courses Projects & More

What is Edge AI?

In the simplest terms, Edge AI refers to the use of artificial intelligence in the form of Machine Learning (ML) algorithms deploying and inferencing on edge devices.

Machine learning is a broad field that has seen tremendous progress in recent years. It is based on the principle that a computer can autonomously improve its own performance on a given task by learning from data – sometimes even beyond the capabilities of humans.

Edge AI is the combination of Edge Computing and AI to run machine learning tasks directly on connected edge devices. Edge AI can perform a myriad of tasks such as object detection, speech recognition, fingerprint detection, fraud detection, autonomous driving, etc. Real company applications will be further explored later in this article.

Today, machine learning can perform many advanced tasks, including but not limited to:

- Computer vision: image classification, object detection, semantic segmentation

- check our computer vision 101 and get started with projects!

- Speech recognition, natural language processing (NLP), chatbots, translation,

- Forecasting (Eg. Weather & Stock Markets), Recommendation systems

- Anomaly detection, Predictive maintenance

You might wonder: Machine learning has been around for so long, what makes Edge AI suddenly so special? To shed some light on this, let’s first start from the very beginning of what is AI and Deep Learning before we dive into edge in Edge AI really means. So hopefully, after this article, you will get to understand more about Edge AI and start or integrate your current processes with the more efficient and effective Edge AI.

What is AI?

Artificial Intelligence (AI) is the simulation of human intelligence processes by machines and programming them to mimic human abilities such as learning, analyzing, and predicting.

There are three phases in an AI Pipeline that allows for an AI to work effectively.

We will cover useful tools and development platforms in the later paragraphs for three sections to help you get started quickly.

1. Data Management

This phase is where data scientists wrangle the extensive volumes of data and uncovers patterns and insights within them. Data wrangling is the process of transforming raw data into readily used formats with the intent to make it more valuable for analytics. It is sometimes known as data cleaning, remediation, or munging.

2. Model Training

The next phase is to train the dataset, which is used to train a model to perform a specific task. Thus, the AI is literally created when the training is complete. This phase requires a huge amount of data and takes a substantial amount of time to complete as the training experience must be rich for the model to be efficient and effective.

3. Inference

The last phase is where AI gets deployed to perform its job of solving problems it was designed to solve. It can be deployed into serves, PC, cloud, or edge devices. It processes the data gathered from phase two to understand the new data input and outputs a result according to the parameters set.

AI Applications, happening everywhere we live.

Internet (hold on, aren’t we talking about Edge? But we can feel more about AI everywhere within internet software, check here to learn the gap between AI software to real-world production.)

| Internet | Lifestyle | Transport | Robotics | Healthcare | Retail | Manufacturing |

| Search engines | Voice assistant | Autonomous vehicles | Delivery robots | Tumor Diagnosis. | Chatbots | Industrial machine communication |

| Recommendation systems | Facial recognition | Navigation (GPS) | Autonomous vacuum cleaner | Fall detection for elderly care | Drive-thru experience | Predictive maintenance |

| Language translations | Fingerprint reader | Traffic management | Inventory management | X-Ray machine | Shopping cart with vision AI | Quality control |

| E-commerce | Security system | Pedestrian and cyclist detection | Robot arm | Remote Health Assistants. | Amazon Go store | Anomaly detection |

Machine Learning

A subset of AI is Machine Learning (ML), which trains a machine to solve a specific AI problem. It is the study of algorithms that allow computers to improve automatically through experience. It uses large datasets, well-researched statistical methods, and algorithms to train models to predict outcomes from any new or incoming information.

Deep Learning

Deep learning is a further subset of ML, and it uses artificial neural networks to solve an AI problem. It consists of many successive transformations of the data that are changed together from the top to bottom, and it employs an ANN (which will be elaborated more later) with many hidden layers, big data, and high computer resources. Similar to ML, it requires large datasets to be effective. With more data, we are able to train deep learning models to be more powerful and rely less on assumptions.

Deep learning is commonly used when dealing with more complex inputs or non-tabular data such as images, text, videos, and speech.

Differences Between Machine Learning and Deep Learning

Deep learning can automatically detect attributes from raw data, while ML requires manual extraction, which requires further processing time. Due to this, deep learning requires much more data than ML to function properly.

In terms of datasets, deep learning is better for processing non-tabular data, as previously mentioned, compared to ML as it does not require an extra step of data manipulation.

What is ANN?

An artificial Neural Network (ANN) is a computational model that mimics the way our brain cells work so that computers are able to learn and make decisions as if they were humans. It uses algorithms that independently make adjustments and learn as they receive new inputs.

ANN has three or more layers that are interconnected.

Input Layer: This is where information from the outside world is inputted into the ANN model. It would pass on information to the next layer, the hidden layer.

Hidden Layer: This is where all the computations are performed on the input data. It would then pass on the computed information to the last layer, the output layer. There can be multiple hidden layers in any model.

Output Layer: This is where conclusions of the model can be derived from all the computations performed in the hidden layer.

Edge Computing

What is Edge Computing?

Edge computing refers to processing that happens where data is produced, handling, and storing data locally in an edge device. Instead of delivering back to the cloud, edge devices can gather and process data locally, such as a user’s computer, IoT device, or edge server. This real-time processing allows them to respond faster and more effectively and reduce latency and bandwidth use.

Reasons to use Edge Computing?

- Would faster results affect my ability to act on INSIGHTS from my AI model?

- Would higher-fidelity DATA affect the results of my model?

- Is it important that the data I collect stays in the LOCATION it is collected?

Real-life Applications

Edge Computing vs Cloud Computing

In essence, both edge and cloud computing are meant to do the same things – process data, run algorithms, etc. However, the fundamental difference in edge and cloud computing is where the computing actually takes place.

In edge computing, information processing occurs on distributed IoT devices that are on the field and in active deployment (or on the edge). Some examples of edge devices include smartphones, as well as various SBCs and microcontrollers.

In cloud computing, however, that same information processing occurs at a centralised location, such as in a data centre.

Traditionally, cloud computing has dominated the IoT scene. Because it is powered by data centres that naturally have greater computing capabilities, IoT devices on the edge could simply transmit local data and maintain their key characteristics of low power consumption and affordability. Although cloud computing is still a very important and powerful tool for IoT, edge computing has been getting more attention lately for two important reasons.

Reason 1: The hardware on edge devices has become more capable while remaining cost competitive.

Reason 2: Software is becoming increasingly optimized for edge devices.

This trend is progressing tremendously that it is now possible to run machine learning, which has long been ‘reserved’ for cloud computing due to high computational requirements, on edge computing devices! And thus, Edge AI was born.

Edge AI: Bringing Cloud to the Edge to Evolve IoT

With Edge AI, IoT devices are becoming smarter. What does that mean? Well, with machine learning, edge devices are now able to make decisions. They can make predictions, process complex data, and administer solutions.

For example, edge IoT devices can process operating conditions to predict if a given piece of machinery will fail. This allows companies to perform predictive maintenance and avoid the larger damages and costs that would have been incurred in the event of a complete failure.

On the other hand, a security camera equipped with edge AI may no longer only capture video. We will be able to identify humans and count foot traffic. Or, with facial recognition, even identify exactly who has passed through an area and when. Very “big brother”-esque, I know; but still amazing nonetheless!

As machine learning develops, many exciting possibilities will now extend to edge devices as well. But the crux of this paradigm shift is clear – more than ever, cloud capabilities are being moved to the edge; and for good reason.

Benefits of Edge AI

1. Reduced Latency

The most direct advantage of processing information on the edge is that there is no longer a need to transmit data to and from the cloud. As a result, latencies in data processing can be greatly reduced.

In the prior example of preventative maintenance, an Edge AI enabled device would be able to respond almost immediately to, for instance, shut the compromised machinery down. If we used cloud computing to perform the machine learning algorithm instead, we would lose at least a second of time during to the transmission of data to and from the cloud. While that may not sound significant, every margin of safety that can be achieved is often worth pursuing when it comes to operation-critical equipment!

2. Reduced Bandwidth Requirement and Cost

With less data being transmitted to and from edge IoT devices, there will be a lower requirement and thus costs in network bandwidth.

Take an image classification task for example. With a reliance on cloud computing, the entire image must be sent for online processing. But if edge computing was used instead, there would no longer be a need to send that data. Instead, we can simply send the processed result, which is often several orders of magnitude smaller than the raw image. If we multiply this effect by the number of IoT devices in a network, which can be as many as thousands or more, these savings are nothing to scoff at!

3. Increased Data Security

A reduction in the transmission of data to external locations also means fewer open connections and fewer opportunities for cyber attacks. This keeps edge devices operating safely out of the reach of a potential intercept or data breach. Furthermore, since data is no longer stored in the centralized cloud, the consequences of a single breach are heavily mitigated.

4. Improved Reliability

With the distributed nature of Edge AI and edge computing, operational risks can also be distributed across the entire network. In essence, even if the centralized cloud computer or cluster fails, individual edge devices are able to maintain their functions since the computing processes are now independent of the cloud! This is especially important for critical IoT applications, such as in healthcare.

Why is Edge AI Important?

While the tangible benefits of Edge AI are clear, its intrinsic impacts may be more elusive.

Edge AI Alters the Way we Live

For one, Edge AI represents the first wave of truly integrating artificial intelligence into daily living. While artificial intelligence and machine learning research have been around for decades, we are now just starting to see their practical uses in consumer products. Self-driving cars, for example, are a product of advancements in Edge AI. Slowly but surely, Edge AI is changing the way that we interact with our environment in many ways.

Edge AI Democratises Artificial Intelligence

The use and development of artificial intelligence is no longer exclusive to research institutions and wealthy corporations. Because Edge AI is designed to run on relatively affordable edge devices, it is now more accessible than ever for any individual to learn how artificial intelligence works and to develop it for their own uses.

More importantly, Edge AI makes it possible for educators around the world to bring artificial intelligence and machine learning into classroom learning in a tangible manner. For example, by providing students with hands-on experience with edge devices.

Edge AI Challenges the Way We Think

It’s commonly said that the potential of AI and machine learning are only limited by the creativity and imagination of humankind – and this could not be more true. As machine learning becomes more advanced, many tasks that once only humans could do will become automated, and our internal notions of productivity and purpose will be heavily challenged.

While there’s no telling for certain what the future will hold, I’m generally optimistic about what Edge AI brings to the table as I believe that it will push us towards more creative and fulfilling jobs. What do you think?

Edge AI with edge GPU: NVIDIA Jetson embedded system

About NVIDIA Jetson Modules

NVIDIA® Jetson™ brings accelerated AI performance to the Edge in a power-efficient and compact form factor. The Jetson family of modules all use the same NVIDIA CUDA-X™ software, and support cloud-native technologies like containerization and orchestration to build, deploy, and manage AI at the edge. Together with NVIDIA JetPack™ SDK, Jetson modules will help you develop and deploy innovative products across all industries.

Buy the latest NVIDIA Jetson Production module-powered edge vices, carrier boards, and add-ons at Seeed

reComputer built with Jetson Nano/NX: real world AI at the Edge, starts from $199

Built with Jetson Nano 4GB/ Xavier NX 8GB/16GB

- Edge AI box fit into anywhere

- Embedded Jetson Nano/NX Module

- Pre-installed Jetpack for easy deployment

- Nearly same form factor with Jetson Developer Kits, with rich set of I/Os

- Stackable and expandable

Edge AI with TPU: Google Coral series

Google’s Coral series is equipped with their Tensor Processing Units (TPUs), which are purpose-built ASICs designed specially for neural network machine learning with TensorFlow Lite. The Coral Dev board is an all-in-one, TPU-equipped platform that allows you to prototype TFLite applications easily, which can even be scaled to production with its flexible SoM design!

Exclusive for educators:

Join the Coral Global Education Program partnered with Seeed to receive an exclusive discount of 35% off of the Coral Dev Board 1GB list price of $129.99 to qualified applicants if you are a student or an educator. Please fill out the questionnaire to apply to the program and get an exclusive educational discount. Please also feel free to email coraledu@seeed.cc to find out more about the education program.

Product Features:

- NXP i.MX 8M SoC (Quad Cortex-A53, Cortex-M4F) CPU

- Integrated GC7000 Lite Graphics

- Google Edge TPU coprocessor

- 1 GB LPDDR4, 8GB eMMC

- Suite of Interfaces: HDMI, MicroSD, WiFi, Gigabit Ethernet and more!

To learn more about the Coral Dev Board, please visit its product page on the Seeed Online Store!

Alternatively, you might be interested in the Coral USB Accelerator, which allows you to use Google’s Edge TPU with your existing development boards via USB! For embedded applications that require more power, the Coral M.2 Accelerator with Dual Edge TPU can be used with any system via the speedy M.2 interface, and sports two Edge TPUs for some serious machine learning capabilities!

SBCs for Edge AI

Edge AI is developing rapidly, and nobody wants to miss out. Fortunately, getting started with Edge AI is easier than ever with the wide variety of edge devices available. In this section, I’d like to share a few SBC recommendations for those of you who would like to get your toes wet with Edge AI!

Intel Celeron-powered ODYSSEY X86 series

When it comes to general-purpose computing, you’ll be hard pressed to find anything better than the ODYSSEY x86 series. Running on the powerful x86 CPU architecture, this SBC is capable enough to meet any edge computing requirement, or even serve as a mini PC to replace your desktop. We recommend use Odyssey X86 series with Frigate, an open-source NVR camera system locally processed AI.

Product Features:

- Intel® Celeron® J4125, Quad-Core 1.5-2.5GHZ

- Dual-Band Frequency 2.4GHz/5GHz WiFi

- Intel® UHD Graphics 600

- Dual Gigabit Ethernet

- Integrated Arduino Coprocessor ATSAMD21 ARM® Cortex®-M0+

- Raspberry Pi 40-Pin Compatible

- 2 x M.2 PCIe (B Key and M Key)

- Support Windows 10 & Linux OS

- Compatible with Grove Ecosystem

Interested to learn more? Learn more about the ODYSSEY x86J4158 on the Seeed Online Store now!

Beginner-Friendly: Raspberry Pi 4B

If you’re a beginner or if you’re looking for a beginner-friendly option for your child, you most certainly won’t go wrong with the Raspberry Pi 4B. Although the popular Raspberry Pi isn’t as powerful as the other recommendations I’ve shared so far, it is still a very capable SBC for anyone who is trying to learn Edge AI or general computing. Check how to run TensorFlow model on reTerminal(a Raspberry Pi CM4 powered HMI device) with TensorFlow Lite Runtime.

Edge AI solutions

Edge AI solution for Industry 4.0

With Edge AI, IoT devices are becoming smarter. What does that mean? With machine learning, edge devices are now able to make decisions. They can make predictions, process complex data, and administer solutions. For example, edge IoT devices can process operating conditions to predict if a given piece of machinery will fail. This allows companies to perform predictive maintenance and avoid the larger damages and costs that would have been incurred in the event of a complete failure.

?Download our edge AI use case and example book here, learn how NVIDIA Jetson system help across industries.

Helmet Detection

Edge devices embedded with AI are capable of monitoring PPE including hard hats compliance in the work environment in real-time and signaling any PPE violations to safety and maintenance. Computer vision combined with machine learning can automate the process of monitoring PPE compliance.

Check out our Helmet Detection Brochure by building applications using Edge Impulse and YOLOv5.

Edge AI solution for Traffic Management

Build intelligent transportation system for smart city and public safety

AI-integrated cameras can ease the bottlenecks and chokepoints that often hinder traffic in our cities. Traffic congestion occurs mainly due to the neglect of certain factors, such as the distance between two moving vehicles, traffic lights, road signs, and pedestrians at the crossroad. The intelligent transportation system (ITS) is a major computer vision applied field, including vehicle classification, traffic violation detection, traffic flow analysis, parking lot detection, license plate recognition, pedestrian detection, traffic sign detection, collision avoidance, and road condition monitoring, and in-vehicle driver attention detection.

Check out our Edge AI solution brochure of intelligent traffic management with examples of Deploying NVIDIA Jetson-powered applications into smart cities utilizing fast training & deployment platforms and pertained models.

- Pedestrian and Bicyclists detection Car and people detection using alwaysAI

- License plate detection

TinyML: Is Edge AI Possible on Microcontrollers?

Throughout this article, we’ve talked about how Edge AI is fitting artificial intelligence applications into smaller and less powerful computers. But really, how small can we go? How about microcontrollers that have only kilobytes of RAM?

As it turns out, the answer is a resounding YES – thanks to a new concept known as TinyML!

TinyML, short for Tiny Machine Learning, is a subset of machine learning that employs optimisation techniques to reduce the computational space and power required by machine learning models. Specifically, it aims to bring ML applications to compact, power-efficient, and most importantly affordable microcontroller units.

Further fuelling the TinyML movement, companies like Edge Impulse & OpenMV are helping to make Edge AI more accessible through user-friendly platforms. Now, anyone can deploy machine learning applications almost literally anywhere, even without prior expertise!

Best Microcontrollers for Edge AI

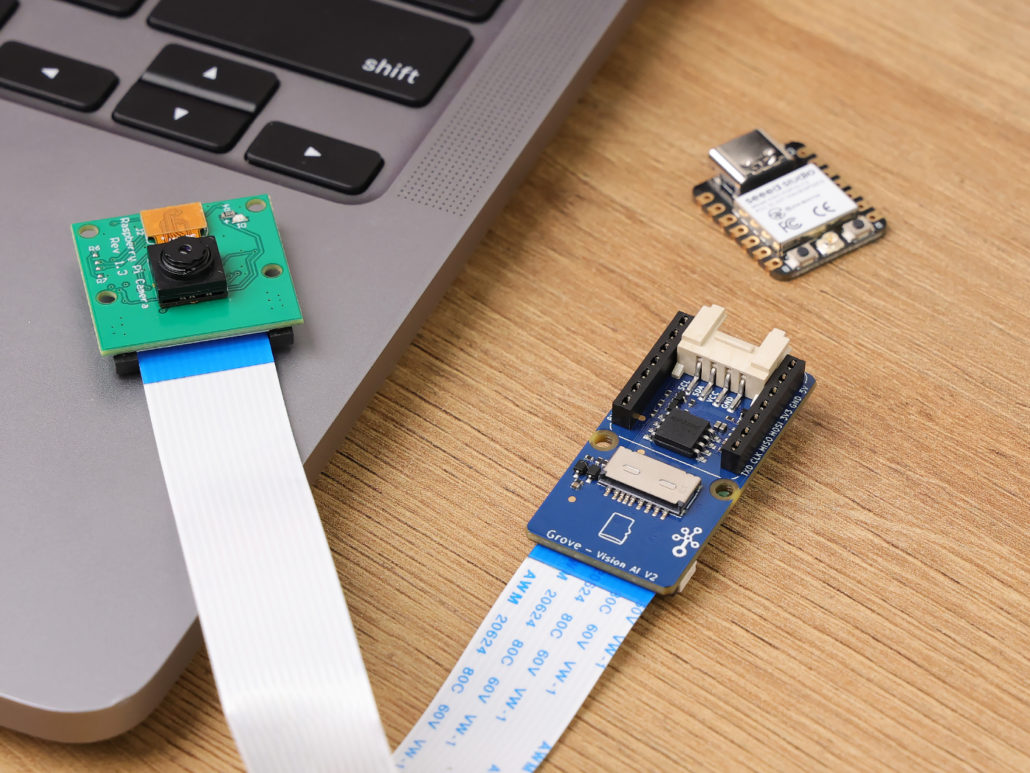

Grove – Vision AI Module V2

It’s an MCU-based vision AI module powered by Himax WiseEye2 HX6538 processor, featuring rm Cortex-M55 and Ethos-U55. It integrates Arm Helium technology, which is finely optimized for vector data processing, enables:

- Award-winning low power consumption

- Significant uplift in DSP and ML capabilities

- Designed for battery-powered endpoint AI applications

With support for Tensorflow and Pytorch frameworks, it allows users to deploy both off-the-shelf and custom AI models from Seeed Studio SenseCraft AI. Additionally, the module features a range of interfaces, including IIC, UART, SPI, and Type-C, allowing easy integration with popular products like Seeed Studio XIAO, Grove, Raspberry Pi, BeagleBoard, and ESP-based products for further development.

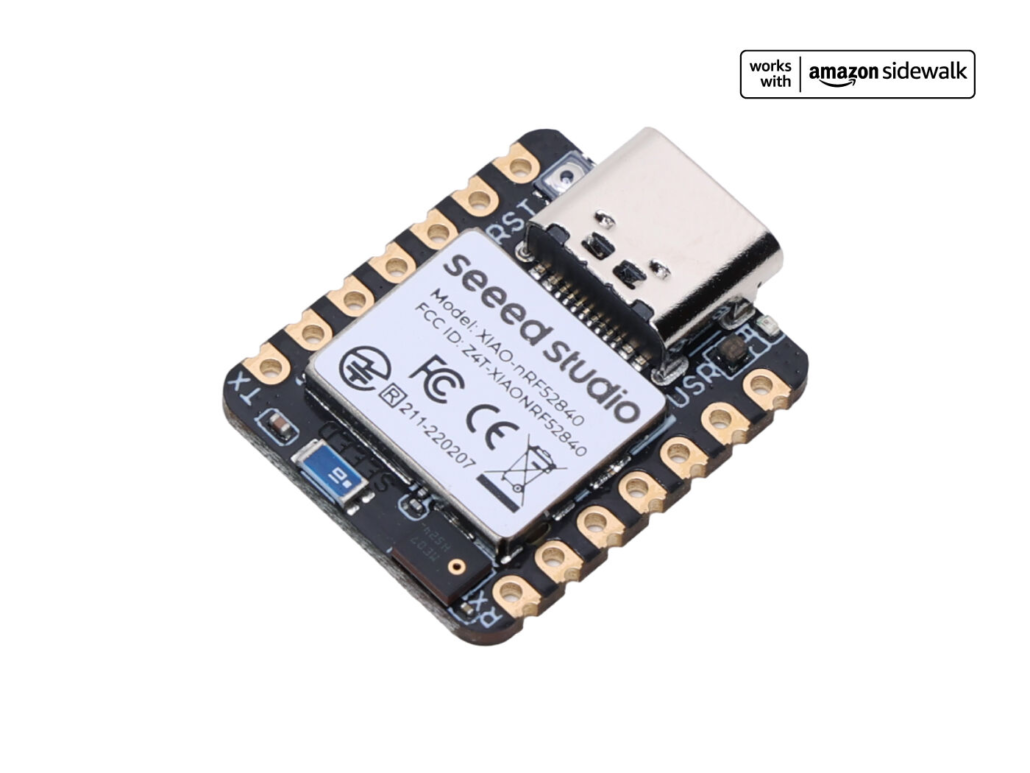

Seeed Studio XIAO ESP32S3 Sense & Seeed Studio XIAO nRF52840 Sense

Seeed Studio XIAO Series are diminutive development boards, sharing a similar hardware structure, where the size is literally thumb-sized. The code name “XIAO” here represents its half feature “Tiny”, and the other half will be “Puissant”.

Seeed Studio XIAO ESP32S3 Sense integrates an OV2640 camera sensor, digital microphone, and SD card support. Combining embedded ML computing power and photography capability, this development board can be your great tool to get started with intelligent voice and vision AI.

Seeed Studio XIAO nRF52840 Sense is carrying Bluetooth 5.0 wireless capability and is able to operate with low power consumption. Featuring onboard IMU and PDM, it can be your best tool for embedded Machine Learning projects.

Click here to learn more about the XIAO family!

Wio Terminal

The Wio Terminal is a complete Arduino development platform based on the ATSAMD51, with wireless connectivity powered by Realtek RTL8720DN. As an all-in-one microcontroller, it has an onboard 2.4” LCD Display, IMU, microphone, buzzer, microSD card slot, light sensor & infrared emitter. The Wio Terminal is officially supported by Edge Impulse, which means that you can easily use it to collect data, train your machine learning model, and finally deploy an optimised ML application!

Edge AI Tools, Projects & Getting Started

Edge AI is still relatively new, with different companies still in the process developing their own smart solutions. Despite this, there are already many resources to help you get started with building your very own Edge AI project!

Computer Vision and embedded machine learning

- Edge AI no code Vision tool, Seeed latest open-source project for deploying AI application within 3 nodes.

- NVIDIA DeepStream SDK delivers a complete streaming analytics toolkit for AI-based multi-sensor processing and video and image understanding on Jetson.

- NVIDIA TAO tool kit, built on TensorFlow and PyTorch, is a low-code version of the NVIDIA TAO framework that accelerates the model training

- alwaysAI: build, train, and deploy computer vision applications directly at the edge of reComputer. Get free access to 100+ pre-trained Computer Vision Models and train custom AI models in the cloud in a few clicks via enterprise subscription. Check out our wiki guide to get started with alwaysAI.

- Edge Impulse: the easiest embedded machine learning pipeline for deploying audio, classification, and object detection applications at the edge with zero dependencies on the cloud.

- Roboflow provides tools to convert raw images into a custom-trained computer vision model of object detection and classification and deploy the model for use in applications. See the full documentation for deploying to NVIDIA Jetson with Roboflow.

- YOLOv5 by Ultralytics: use transfer learning to realize few-shot object detection with YOLOv5 which needs only a very few training samples. See our step-by-step wiki tutorials

- Deci: optimize your models on NVIDIA Jetson Nano. Check webinar at Deci of Automatically Benchmark and Optimize Runtime Performance on NVIDIA Jetson Nano and Xavier NX Devices

Remote Fleet Management

Enable secure OTA and remote device management with Allxon. Unlock 90 days free trial with code H4U-NMW-CPK.

Robot and ROS Development

- NVIDIA Isaac ROS GEMs are hardware-accelerated packages that make it easier for ROS developers to build high-performance solutions on NVIDIA hardware. Learn more about NVIDIA Developer Tools

- Cogniteam Nimbus is a cloud-based solution that allows developers to manage autonomous robots more effectively. Nimbus platform supports NVIDIA® Jetson™ and ISAAC SDK and GEMs out-of-the-box. Check out our webinar on Connect your ROS Project to the Cloud with Nimbus.

Jetson AI courses and certifications

NVIDIA’s Deep Learning Institute (DLI) provides developers, educators, students, and lifelong learners with practical, hands-on training and certification in edge computing. It’s a great way to gain the critical AI skills you need to thrive and advance in your career. After completing these free open source courses, you can even earn a certificate to demonstrate your understanding of Jetson and AI.

Use transfer learning along with Ultralytics YOLOv5 and Roboflow to train a dataset with very few samples.

Check out more details and ? try it follow our wiki!

In the wiki we showed the difference in training time between using a small dataset collected by ourselves and a large dataset available publicly. Also, we will use the trained model to perform faster inference on an edge device such as the NVIDIA Jetson platform with better accuracy.

Smart Weather Station with TFLite on the Wio Terminal

Weather stations are a popular project amongst the maker community. Why not take it one step further and add Edge AI capabilities to enable local weather predictions? This project by Dimitry Maslov does exactly that – visit the full article here for all the details!

Materials Needed:

This tutorial is also part of our Learn TinyML using Wio Terminal and Arduino IDE series. Be sure to check each of them out!

- Learn TinyML using Wio Terminal and Arduino IDE #1 Intro

- Learn TinyML using Wio Terminal and Arduino IDE #2 Audio Scene Recognition and Mobile Notifications

- TinyML using Wio Terminal and Arduino IDE #3 People Counting and Azure IoT Central Integration

- Learn TinyML using Wio Terminal and Arduino IDE #4 Weather prediction with Tensorflow Lite for Microcontrollers a.k.a. I just like data

Machine Learning Powered Inventory Tracking with Raspberry Pi

This project uses machine learning-powered object detection to count objects in a photo! The inventory numbers are then uploaded to Azure IoT Central so that the inventory can be monitored anytime, anywhere.

Materials Needed:

- Raspberry Pi 4

- Raspberry Pi Camera Module V2, OR

- Any USB Webcam

Keen to try this for yourself? Visit my complete step-by-step tutorial here!

Build Handwriting Recognition with Wio Terminal & Edge Impulse

Do you think it’s possible to perform handwriting recognition with just a single distance sensor? The answer to that is, well, sort of! This project uses machine learning on time series data from just one ToF sensor to recognise handwriting gesture patterns! While it’s very much a proof of concept project and far from actual implementation, I hope this inspires you to think of crazy ideas for your own project!

Materials Needed:

Like always, you can visit the full step-by-step tutorial here!

Summary & More Resources

Thanks for reading this article! I hope I’ve managed to shed some light on what Edge AI is and what it means for the future of IoT and even humankind. With SBCs and microcontrollers now joining the fray, there’s no better time to explore machine learning applications and build some Edge AI projects for yourself!

To wrap up, here are some more resources which you may find useful:

- What is Industrial IoT? [Case Studies]

- An Introduction to TinyML – towardsdatascience.com

- What is Edge AI and What is Edge AI Used For?