Learn TinyML using Wio Terminal and Arduino IDE #4 Weather prediction with Tensorflow Lite for Microcontrollers a.k.a. I just like data

Updated on Feb 28th, 2024

In today’s article we’re going to use Wio Terminal and Tensorflow Lite for Microcontrollers to create an intelligent meteostation, able to predict the weather and precipitation for next 24 hours based on local data from BME280 environmental sensor.

For more details and visuals, watch the corresponding video!

I will tell you how to apply model optimization techniques, that will allow not only to run medium-sized Convolutional neural network, but also to have this sleeky GUI and WiFi connection all running at the same time for days and month at the time!

This is the end result, you can see there are current temperature, humidity and atmospheric pressure values displayed on the screen, together with city name, predicted weather type and predicted precipitation chance – and in the bottom of the screen there is a log output field, which you can easily re-purpose for displaying extreme weather information, AI jokes or tweets from me. While it looks good and useful as it is, there is a lot of things you can add yourself – for example above mentioned news/tweets output on the screen or using deep sleep mode to conserve energy and make it battery powered and so on.

This project expands on an article about weather prediction from my colleague, Jonathan Tan. Most notably, we will improve a few things from basic implementation in that article:

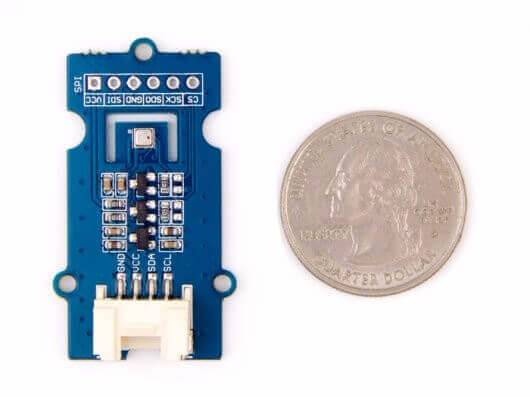

- We’ll use BME280 sensor, that will allow use to get atmospheric pressure information, together with temperature and humidity.

- The neural network model in the original project is trained to predict weather for next half an hour, based on data points from previous 3 hours, one measurement every half an hour. So, it is more of a weather descriptor, than a weather predictor really. We will utilize more advanced data processing and model architecture to predict weather type and precipitation chance for next 24 hours, based on previous 24 hours measurements.

- We will also utilize model optimizations, which will allow us to get smaller model and fit more things in Wio Terminal memory, for example, a web server and pretty LVGL interface with dark/light material themes.

Data processing and model training

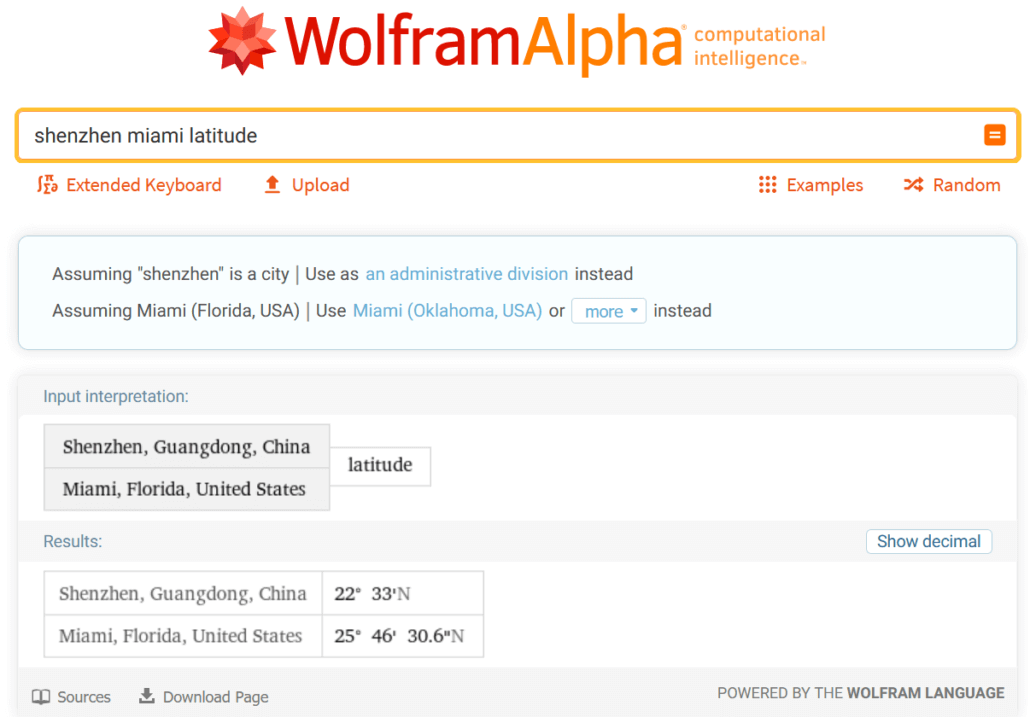

So, where shall we begin? It all starts with data of course. For this tutorial we will use a readily available weather dataset from Kaggle, Historical Hourly Weather Data 2012-2017. I live in Shenzhen, a city in Southern China – and that city is absent from the dataset, so I picked a city that is located on the similar latitude and also has a subtropical climate – Miami.

You’ll need to pick a city that at least resembles the climate where you live – it goes without saying that the model trained on data from Miami and then deployed in Chicago in winter is going to be Confused Beyond All Reason.

For data processing and model training step, let’s open Colab Notebook I prepared and shared in Github repository for this project. Jupyter Notebooks are great way to explore and present data, since they allow having both text and executable code in the same environment. The general workflow is explained in the video and Colab Notebook text sections.

Prepare the environment

Once you get the trained model it is time to deploy it to Wio Terminal. As I mentioned before, we are going to use Tensorflow Lite for Microcontrollers, which I will call Tensorflow Micro, since Tensorflow Lite for Microcontrollers is a mouthful.

Tensorflow Micro is relatively young framework, so we’ll have to jump through quite a few hoops to deploy our model to Wio Terminal. It got much better however, since when it was unveiled – just look at this video of Pete Warden, Tensorflow maintainer, doing a first live demo on stage and being noticeably nervous a few years ago.

If you are making this project on Windows, first thing you’ll need to do is to download nightly version of Arduino IDE, since current stable version 1.18.3 will not compile sketches with a lot of library dependencies (the issue is that linker command during compilation exceeds maximum length on Windows).

Second, you need to replace the content of cmsis_gcc.h file with a newer version in order to avoid `__SXTB16_RORn` not being defined. You will find the newer version of the file in Github repository for this project. Then just copy it to C:\Users\[your_user_name]\AppData\Local\Arduino15\packages\Seeeduino\tools\CMSIS\5.4.0\CMSIS\Core\Include on Windows and /home/[your_user_name]/.arduino15/packages/Seeeduino/tools/CMSIS/5.4.0/CMSIS/Core/Include on Linux.

Finally, since we’re using a Convolutional neural network and build it with Keras API, it contains an operation not supported by current stable version of Tensorflow Micro. Browsing Tensorflow issues on Github I found that there is a pull request for adding this op (EXPAND_DIMS) to list of available ops, but it was not merged into master at the time of making this video. You can git clone the Tensorflow repository, switch to PR branch and compile Arduino library by executing ./tensorflow/lite/micro/tools/ci_build/test_arduino.sh on Linux machine – the resulting library can be found in tensorflow/lite/micro/tools/make/gen/arduino_x86_64/prj/tensorflow_lite.zip. Alternatively, you can download already compiled library from this project Github repository and place it into your Arduino sketches libraries folder – just make sure you only have one Tensorflow lite library at the time!

Test with dummy data

Once this is all done, create an empty sketch and save it. Then copy the model you trained to the sketch folder and re-open the sketch. Change the variable name of model and model length to something shorter. Then use the code from wio_terminal_tfmicro_weather_prediction_static.ino for testing:

Let’s go over the main steps we have in C++ code

We include the headers for Tensorflow library and the file with model flatbuffer

#include <TensorFlowLite.h>

//#include "tensorflow/lite/micro/micro_mutable_op_resolver.h"

#include "tensorflow/lite/micro/all_ops_resolver.h"

#include "tensorflow/lite/micro/micro_error_reporter.h"

#include "tensorflow/lite/micro/system_setup.h"

#include "tensorflow/lite/micro/micro_interpreter.h"

#include "tensorflow/lite/schema/schema_generated.h"

#include "model_Conv1D.h"Notice how I have micro_mutable_op_resolver.h commented out and all_ops_resolver.h enabled – all_ops_resolver.h header compiles all the operations currently present in Tensorflow Micro and convenient for testing, but once you finished testing it is much better to switch to micro_mutable_op_resolver.h to save devices memory – it does make a big difference.

Next we define the pointers for error reporter, model, input and output tensors and interpreter. Notice how our model has two outputs – one for precipitation amount and another one for weather type. We also define tensor arena, which you can think of as a scratch board, holding input, output, and intermediate arrays – size required will depend on the model you are using, and may need to be determined by experimentation.

// Globals, used for compatibility with Arduino-style sketches.

namespace {

tflite::ErrorReporter* error_reporter = nullptr;

const tflite::Model* model = nullptr;

tflite::MicroInterpreter* interpreter = nullptr;

TfLiteTensor* input = nullptr;

TfLiteTensor* output_type = nullptr;

TfLiteTensor* output_precip = nullptr;

constexpr int kTensorArenaSize = 1024*25;

uint8_t tensor_arena[kTensorArenaSize];

} // namespaceThen in setup function, there is more boilerplate stuff, such as instantiating error reporter, op resolver, interpreter, mapping the model, allocating tensors and finally checking the tensor shapes after allocation. Here is when code might throw an error during runtime, if some of model operations are not supported by current version of Tensorflow Micro library. In case you have unsupported operations, you can either changed the model architecture or add the support for the operator yourself, usually by porting it from Tensorflow Lite.

void setup() {

Serial.begin(115200);

while (!Serial) {delay(10);}

// Set up logging. Google style is to avoid globals or statics because of

// lifetime uncertainty, but since this has a trivial destructor it's okay.

// NOLINTNEXTLINE(runtime-global-variables)

static tflite::MicroErrorReporter micro_error_reporter;

error_reporter = µ_error_reporter;

// Map the model into a usable data structure. This doesn't involve any

// copying or parsing, it's a very lightweight operation.

model = tflite::GetModel(Conv1D_tflite);

if (model->version() != TFLITE_SCHEMA_VERSION) {

TF_LITE_REPORT_ERROR(error_reporter,

"Model provided is schema version %d not equal "

"to supported version %d.",

model->version(), TFLITE_SCHEMA_VERSION);

return;

}

// This pulls in all the operation implementations we need.

// NOLINTNEXTLINE(runtime-global-variables)

//static tflite::MicroMutableOpResolver<1> resolver;

static tflite::AllOpsResolver resolver;

// Build an interpreter to run the model with.

static tflite::MicroInterpreter static_interpreter(model, resolver, tensor_arena, kTensorArenaSize, error_reporter);

interpreter = &static_interpreter;

// Allocate memory from the tensor_arena for the model's tensors.

TfLiteStatus allocate_status = interpreter->AllocateTensors();

if (allocate_status != kTfLiteOk) {

TF_LITE_REPORT_ERROR(error_reporter, "AllocateTensors() failed");

return;

}

// Obtain pointers to the model's input and output tensors.

input = interpreter->input(0);

output_type = interpreter->output(1);

output_precip = interpreter->output(0);

Serial.println(input->dims->size);

Serial.println(input->dims->data[1]);

Serial.println(input->dims->data[2]);

Serial.println(input->type);

Serial.println(output_type->dims->size);

Serial.println(output_type->dims->data[1]);

Serial.println(output_type->type);

Serial.println(output_precip->dims->size);

Serial.println(output_precip->dims->data[1]);

Serial.println(output_precip->type);

}Finally in the loop function we define a placeholder for quantized INT8 values and an array with float values, which you can copy paste from Colab notebook for comparison of model inference on device vs. in Colab.

void loop() {

int8_t x_quantized[72];

float x[72] = {0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0};

We quantize the float values to INT8 in for loop and place them in the input tensor one by one:

for (byte i = 0; i < 72; i = i + 1) {

input->data.int8[i] = x[i] / input->params.scale + input->params.zero_point;

}Then inference is performed by Tensorflow Micro interpreter and if no errors are reported, values are placed in the output tensors.

// Run inference, and report any error

TfLiteStatus invoke_status = interpreter->Invoke();

if (invoke_status != kTfLiteOk) {

TF_LITE_REPORT_ERROR(error_reporter, "Invoke failed");

return;

}Similar to input, the output of the model is also quantized, so we need to perform the reverse operation and convert it from INT8 to float.

// Obtain the quantized output from model's output tensor

float y_type[4];

// Dequantize the output from integer to floating-point

int8_t y_precip_q = output_precip->data.int8[0];

Serial.println(y_precip_q);

float y_precip = (y_precip_q - output_precip->params.zero_point) * output_precip->params.scale;

Serial.print("Precip: ");

Serial.print(y_precip);

Serial.print("\t");

Serial.print("Type: ");

for (byte i = 0; i < 4; i = i + 1) {

y_type[i] = (output_type->data.int8[i] - output_type->params.zero_point) * output_type->params.scale;

Serial.print(y_type[i]);

Serial.print(" ");

}

Serial.print("\n");

}Check and compare the values for the same data point, they should be the same for quantized Tensorflow Lite model in Colab notebook and Tensorflow Micro model running on your Wio Terminal.

Explore and try full version of the sketch

Cool! So it does work, now the next step is to make it from a demo into actually useful project. Open the sketch from Seeed Arduino sketchbook repository and have a look at its content.

I have divided the code into main sketch, get_historical_data and GUI parts. Since our model requires the data for past 24 hours we would need to wait 24 hours to perform the first inference, which is a lot – to solve this problem we get the weather for past 24 hours from openweathermap.com API and can perform the first inference immediately after device boots up and then replace the values in the circular buffer with temperature, humidity and pressure from BME280 sensor connected to Wio Terminal I2C Grove socket. For GUI I used LVGL, a Little and Versatile Graphics Library – it is also a rapidly developing project, and using it is not super easy, but the functionality is well worth it!

Follow the instructions in Github repository to install the necessary libraries and configure LVGL to run the demo.

We made it – we trained and deployed medium-sized Convolutional neural network on Wio Terminal with all the blows and whistles and optimizations to allow device run stable for long periods of time and look sharp! Do try making this project yourself and possibly improving it! It’s always a pleasure to see my videos and tutorials are helpful and inspiring for other people.

Until the next time!

Choose the best tool for your TinyML project

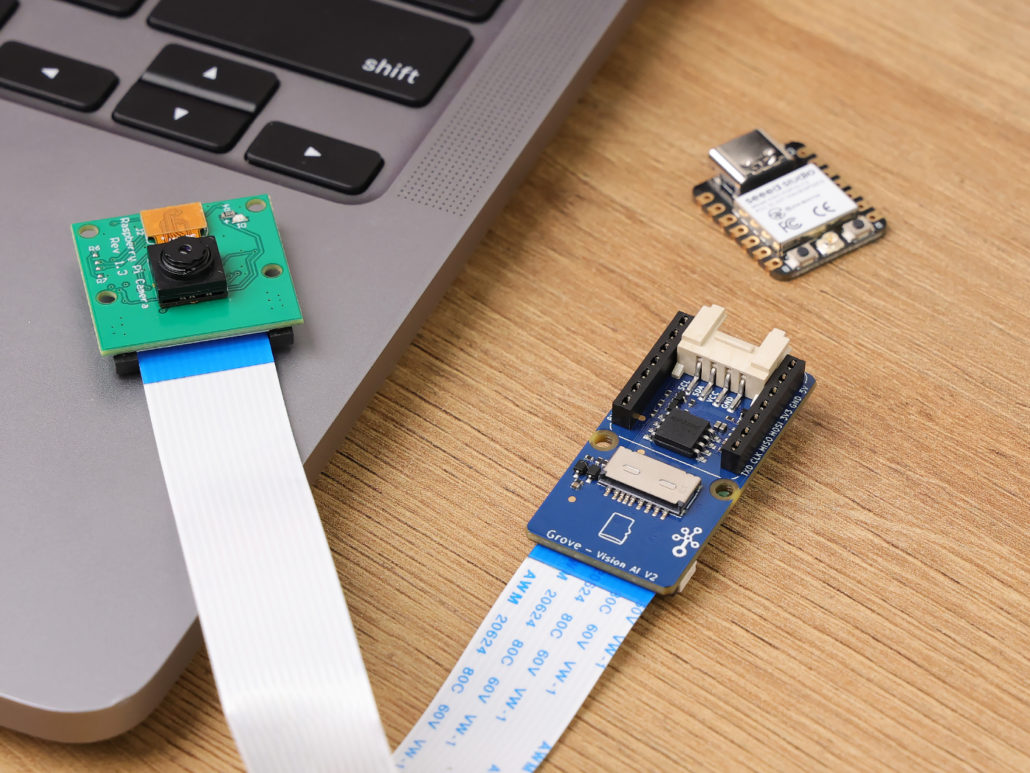

Grove – Vision AI Module V2

It’s an MCU-based vision AI module powered by Himax WiseEye2 HX6538 processor, featuring rm Cortex-M55 and Ethos-U55. It integrates Arm Helium technology, which is finely optimized for vector data processing, enables:

- Award-winning low power consumption

- Significant uplift in DSP and ML capabilities

- Designed for battery-powered endpoint AI applications

With support for Tensorflow and Pytorch frameworks, it allows users to deploy both off-the-shelf and custom AI models from Seeed Studio SenseCraft AI. Additionally, the module features a range of interfaces, including IIC, UART, SPI, and Type-C, allowing easy integration with popular products like Seeed Studio XIAO, Grove, Raspberry Pi, BeagleBoard, and ESP-based products for further development.

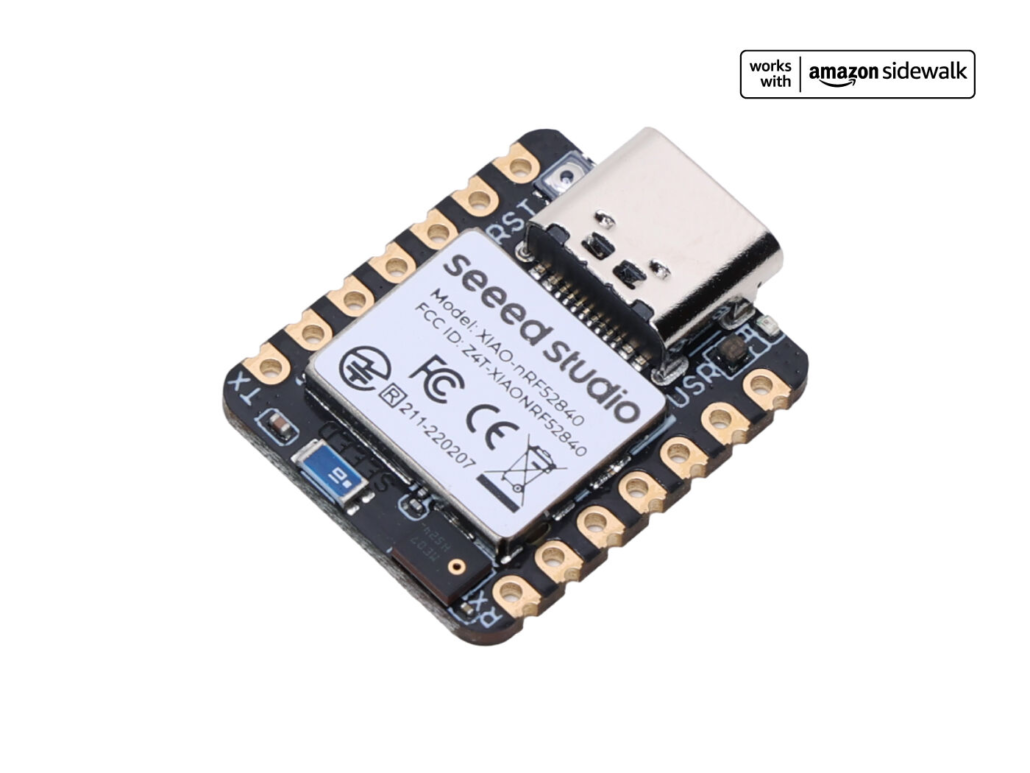

Seeed Studio XIAO ESP32S3 Sense & Seeed Studio XIAO nRF52840 Sense

Seeed Studio XIAO Series are diminutive development boards, sharing a similar hardware structure, where the size is literally thumb-sized. The code name “XIAO” here represents its half feature “Tiny”, and the other half will be “Puissant”.

Seeed Studio XIAO ESP32S3 Sense integrates an OV2640 camera sensor, digital microphone, and SD card support. Combining embedded ML computing power and photography capability, this development board can be your great tool to get started with intelligent voice and vision AI.

Seeed Studio XIAO nRF52840 Sense is carrying Bluetooth 5.0 wireless capability and is able to operate with low power consumption. Featuring onboard IMU and PDM, it can be your best tool for embedded Machine Learning projects.

Click here to learn more about the XIAO family!

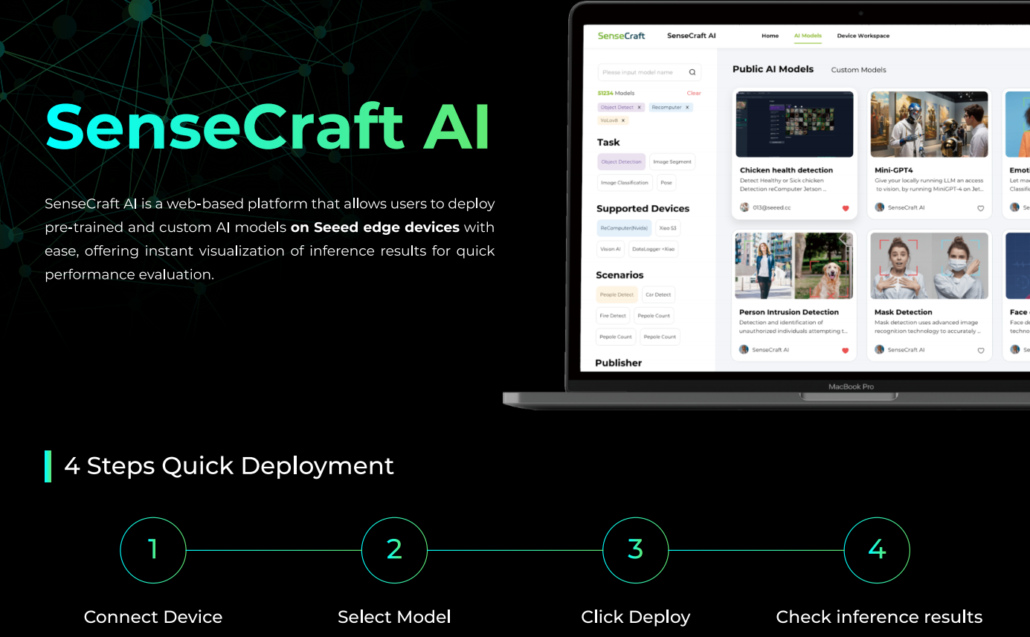

SenseCraft AI

SenseCraft AI is a platform that enables easy AI model training and deployment with no-code/low-code. It supports Seeed products natively, ensuring complete adaptability of the trained models to Seeed products. Moreover, deploying models through this platform offers immediate visualization of identification results on the website, enabling prompt assessment of model performance.

Ideal for tinyML applications, it allows you to effortlessly deploy off-the-shelf or custom AI models by connecting the device, selecting a model, and viewing identification results.