RPLIDAR and ROS programming- The Best Way to Build Robot

As LIDAR becomes more and more popular in different areas, including self-driving cars, robotics research, obstacle detection & avoidance, environment scanning and 3D modeling etc., we’ve also got many inquiries about RPLIDAR recently. Why it’s a good product for research in SLAM (not the NBA news magazine of course!)? What software is recommended for RPLIDAR and robotic applications?

Here we go! We’ve prepared a guide with details about how to quickly learn software and hardware for RPLIDAR and apply SLAM in robotic research!

A Brief Background of ROS and Lidar

As a robot software platform, ROS provides similar operating system functions for heterogeneous computer clusters and plays an important role in research of robot walking. However, as the core sensor of robot positioning navigation, Lidar plays an important role in robot autonomous walking and positioning navigation. RPLIDAR is the ideal cost-effective sensor for robots and hardware researchers and hobbyists. The combination of the ROS and RPLIDAR will definitely make the robot autonomous positioning navigation better.

The tutorial for ROS well explains ROS as the open-source software library, it is greatly used by robotics researchers and companies.

1.What is RPLIDAR?

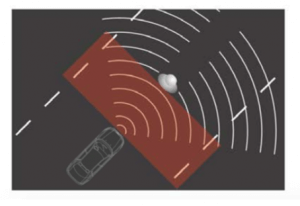

RPLIDAR is a low-cost LIDAR sensor suitable for indoor robotic SLAM application. The produced 2D point cloud data can be used in mapping, localization and object/environment modeling. RPLIDAR will be a great tool using in the research of SLAM (Simultaneous localization and mapping)

Right now, there are three kinds of RPLIDAR for different features.

RPLIDAR A1M8 is based on laser triangulation ranging principle and uses high-speed vision acquisition and processing hardware developed by SLAMTEC. The system measures distance data in more than 8000 times per second.

RPLIDAR A2M5/A2M6 is the enhanced version of 2D laser range scanner(LIDAR). The system can perform 2D 360-degree scan within 18-meter range. The generated 2D point cloud data can be used in mapping, localization and object/environment modeling.

RPLIDAR A3 can take up to 16000 samples of laser ranging per second with high rotation speed. And equipped with SLAMTEC patented OPTMAG technology, it breakouts the life limitation of traditional LIDAR system so as to work stably for a long time.

RPLIDAR Application Scenarios

2. Overview of ROS Package for RPLIDAR

This package provides basic device handling for 2D Laser Scanner RPLIDAR A1/A2 and A3.

Through the wiki, you can learn about the description and usage flow of the corresponding RPLIDAR products, interfaces and parameters, as well as the latest version information of the current ROS support.

In any package of ROS ecosystem, you only need to find the corresponding wiki and github page, you can clearly understand its data interface and internal implementation.

The github of rplidar_ros mainly contains the source code of the package and the version management and developer problem dialogue of the package.

Any problem with the package, developers could ask questions and search for solution in the file of Issue.

It is welcomed to send Pull Request to improve the original code to enhance the function!

3. ROS Nodes

Basically there are one topic that publishes scan topic from the laser and two services in the communication interface: start_motor and stop_motor, calling the service for starting/stopping the motor of RPLIDAR. The ROS package needs to call the data of topic of /scan to complete the mapping or obstacle avoidance.

Rplidar.launch given by rplidar_ros, the actual usage depends on the specific parameters of the port number (serial_port), coordinate system name (frame_id), forward and reverse (inverted)

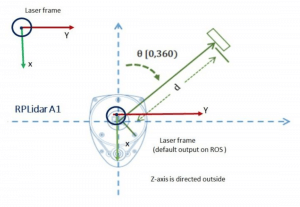

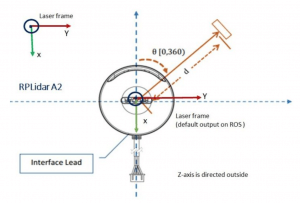

4. RPLIDAR Frame

RPLIDAR rotates in clockwise direction. The SDK data output is left-handed data with distance and angle information, and the rplidar_ros output has converted it to the right-handed coordinate system output.

After all document, build a robot system with RPLIDAR

After getting the instructions for the rplidar_ros package, let’s build the robot system to better operate robot autonomous positioning navigation

If I want to use LIDAR on a robot body, how to set it up?

In fact, in ROS, you can use the existing package to build the system to achieve basic functions. You only need to pay attention to whether the topic/service and TF frame are coordinated. TF is the main concept in ROS, which maintains the pose transformation relationship between the coordinate systems of each data. To build a robot ROS system with RPLIDAR,

You need to unify the transformation relationship between the RPLIDAR coordinate system and the base coordinate system of the robot body based on the actual installation information. There are three main ways to implement this transformation:

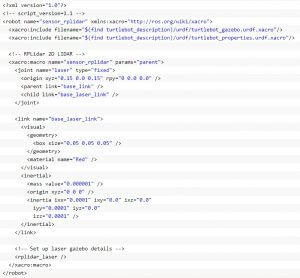

4.1 Add rplidar to the URDF file

In the URDF of the known robot model, add elements of the joint for LIDAR and link describing the robot body. The picture below shows that add the RPLIDAR model in the turtlebot simulation model.

4.2 TF static_transform_publisher:

Add the static TF transformation information to the launch file of the robot startup.

<node name=”base2laser” pkg=”tf”

Type=”static_transform_publisher” args=”0.07 0 0 0 0 0 1

/base_link /laser 50″/>

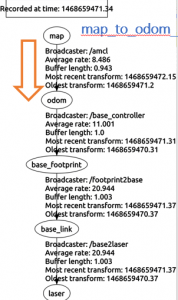

4.3 View the TF tree by rqt/tf_echo:

5.ROS package for current open source 2D Lidar

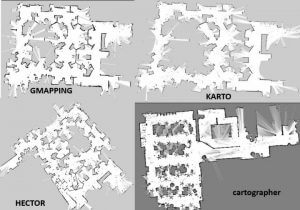

5.1 The current open source 2D laser radar slam ROS package mainly includes:

Gmapping

ros-perception/slam_gmapping ros-perception/openslam_gmapping

Hector

tu-darmstadt-ros-pkg/hector_slam

karto

ros-perception/slam_karto ros-perception/open_karto skasperski/navigation_2d

cartographer

googlecartographer/cartographer

googlecartographer/cartographer_ros

5.2 TF Tree

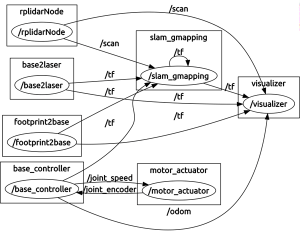

5.3 rqt_graph

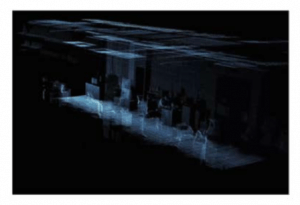

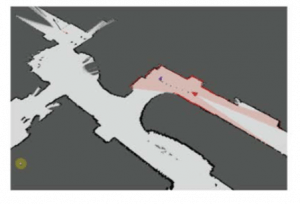

5.4 SLAM Graph Construction

Setting up the system depends on unifying the interface names of topic/service and tf. How the system will run depends on the algorithm implementation inside.

I know this is not a quick read (thank you for your patience & precious time!), and hopefully it is informative for you, in providing both hardware and software knowledge.

If you have any questions, suggestions or complaints, feel free to comment on this blog!

Stay tuned for our next sharing about RPLIDAR and SLAM

Related Links:

More RPLIDAR product information